forked from apache/spark

-

Couldn't load subscription status.

- Fork 0

Merge master #2

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

Merge master #2

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

… avoid CCE ### What changes were proposed in this pull request? [SPARK-27122](#24088) fixes `ClassCastException` at `yarn` module by introducing `DelegatingServletContextHandler`. Initially, this was discovered with JDK9+, but the class path issues affected JDK8 environment, too. After [SPARK-28709](#25439), I also hit the similar issue at `streaming` module. This PR aims to fix `streaming` module by adding `getContextPath` to `DelegatingServletContextHandler` and using it. ### Why are the changes needed? Currently, when we test `streaming` module independently, it fails like the following. ``` $ build/mvn test -pl streaming ... UISeleniumSuite: - attaching and detaching a Streaming tab *** FAILED *** java.lang.ClassCastException: org.sparkproject.jetty.servlet.ServletContextHandler cannot be cast to org.eclipse.jetty.servlet.ServletContextHandler ... Tests: succeeded 337, failed 1, canceled 0, ignored 1, pending 0 *** 1 TEST FAILED *** [INFO] ------------------------------------------------------------------------ [INFO] BUILD FAILURE [INFO] ------------------------------------------------------------------------ ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Pass the Jenkins with the modified tests. And do the following manually. Since you can observe this when you run `streaming` module test only (instead of running all), you need to install the changed `core` module and use it. ``` $ java -version openjdk version "1.8.0_222" OpenJDK Runtime Environment (AdoptOpenJDK)(build 1.8.0_222-b10) OpenJDK 64-Bit Server VM (AdoptOpenJDK)(build 25.222-b10, mixed mode) $ build/mvn install -DskipTests $ build/mvn test -pl streaming ``` Closes #25791 from dongjoon-hyun/SPARK-29087. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…f date-time expressions ### What changes were proposed in this pull request? In the PR, I propose to fix comments of date-time expressions, and replace the `yyyy` pattern by `uuuu` when the implementation supposes the former one. ### Why are the changes needed? To make comments consistent to implementations. ### Does this PR introduce any user-facing change? No ### How was this patch tested? By running Scala Style checker. Closes #25796 from MaxGekk/year-pattern-uuuu-followup. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

… stopping ### What changes were proposed in this pull request? This patch fixes the bug regarding NPE in SQLConf.get, which is only possible when SparkContext._dagScheduler is null due to stopping SparkContext. The logic doesn't seem to consider active SparkContext could be in progress of stopping. Note that it can't be encountered easily as SparkContext.stop() blocks the main thread, but there're many cases which SQLConf.get is accessed concurrently while SparkContext.stop() is executing - users run another threads, or listener is accessing SQLConf.get after dagScheduler is set to null (this is the case what I encountered.) ### Why are the changes needed? The bug brings NPE. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Previous patch #25753 was tested with new UT, and due to disruption with other tests in concurrent test run, the test is excluded in this patch. Closes #25790 from HeartSaVioR/SPARK-29046-v2. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…ableIdentifierParserSuite ### What changes were proposed in this pull request? This PR add the `namespaces` keyword to `TableIdentifierParserSuite`. ### Why are the changes needed? Improve the test. ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A Closes #25758 from highmoutain/3.0bugfix. Authored-by: changchun.wang <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

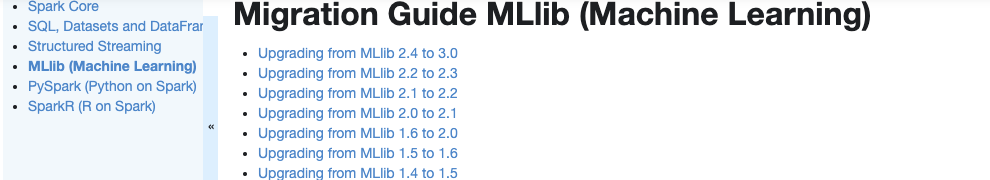

…Guide tap in Spark documentation ### What changes were proposed in this pull request? Currently, there is no migration section for PySpark, SparkCore and Structured Streaming. It is difficult for users to know what to do when they upgrade. This PR proposes to create create a "Migration Guide" tap at Spark documentation.   This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring. There are some new information added, which I will leave a comment inlined for easier review. 1. **MLlib** Merge [ml-guide.html#migration-guide](https://spark.apache.org/docs/latest/ml-guide.html#migration-guide) and [ml-migration-guides.html](https://spark.apache.org/docs/latest/ml-migration-guides.html) ``` 'docs/ml-guide.md' ↓ Merge new/old migration guides 'docs/ml-migration-guide.md' ``` 2. **PySpark** Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html ``` 'docs/sql-migration-guide-upgrade.md' ↓ Extract PySpark specific items 'docs/pyspark-migration-guide.md' ``` 3. **SparkR** Move [sparkr.html#migration-guide](https://spark.apache.org/docs/latest/sparkr.html#migration-guide) into a separate file, and extract from [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) ``` 'docs/sparkr.md' 'docs/sql-migration-guide-upgrade.md' Move migration guide section ↘ ↙ Extract SparkR specific items docs/sparkr-migration-guide.md ``` 4. **Core** Newly created at `'docs/core-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note. 5. **Structured Streaming** Newly created at `'docs/ss-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note. 6. **SQL** Merged [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) and [sql-migration-guide-hive-compatibility.html](https://spark.apache.org/docs/latest/sql-migration-guide-hive-compatibility.html) ``` 'docs/sql-migration-guide-hive-compatibility.md' 'docs/sql-migration-guide-upgrade.md' Move Hive compatibility section ↘ ↙ Left over after filtering PySpark and SparkR items 'docs/sql-migration-guide.md' ``` ### Why are the changes needed? In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating. ### Does this PR introduce any user-facing change? Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html. ### How was this patch tested? Manually build the doc. This can be verified as below: ```bash cd docs SKIP_API=1 jekyll build open _site/index.html ``` Closes #25757 from HyukjinKwon/migration-doc. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? It's more clear to only do table lookup in `ResolveTables` rule (for v2 tables) and `ResolveRelations` rule (for v1 tables). `ResolveInsertInto` should only resolve the `InsertIntoStatement` with resolved relations. ### Why are the changes needed? to make the code simpler ### Does this PR introduce any user-facing change? no ### How was this patch tested? existing tests Closes #25774 from cloud-fan/simplify. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…ency for JDK11 ### What changes were proposed in this pull request? This is a follow-up of #25638 to switch `scala-library` from `test` dependency to `compile` dependency in `network-common` module. ### Why are the changes needed? Previously, we added `scala-library` as a test dependency to resolve the followings, but it was insufficient to resolve. This PR aims to switch it to compile dependency. ``` $ java -version openjdk version "11.0.3" 2019-04-16 OpenJDK Runtime Environment AdoptOpenJDK (build 11.0.3+7) OpenJDK 64-Bit Server VM AdoptOpenJDK (build 11.0.3+7, mixed mode) $ mvn clean install -pl common/network-common -DskipTests ... [INFO] --- scala-maven-plugin:4.2.0:doc-jar (attach-scaladocs) spark-network-common_2.12 --- error: fatal error: object scala in compiler mirror not found. one error found [INFO] ------------------------------------------------------------------------ [INFO] BUILD FAILURE ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manually, run the following on JDK11. ``` $ mvn clean install -pl common/network-common -DskipTests ``` Closes #25800 from dongjoon-hyun/SPARK-28932-2. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request?

This pr proposes to print bytecode statistics (max class bytecode size, max method bytecode size, max constant pool size, and # of inner classes) for generated classes in debug prints, `debugCodegen`. Since these metrics are critical for codegen framework developments, I think its worth printing there. This pr intends to enable `debugCodegen` to print these metrics as following;

```

scala> sql("SELECT sum(v) FROM VALUES(1) t(v)").debugCodegen

Found 2 WholeStageCodegen subtrees.

== Subtree 1 / 2 (maxClassCodeSize:2693; maxMethodCodeSize:124; maxConstantPoolSize:130(0.20% used); numInnerClasses:0) ==

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

*(1) HashAggregate(keys=[], functions=[partial_sum(cast(v#0 as bigint))], output=[sum#5L])

+- *(1) LocalTableScan [v#0]

Generated code:

/* 001 */ public Object generate(Object[] references) {

/* 002 */ return new GeneratedIteratorForCodegenStage1(references);

/* 003 */ }

...

```

### Why are the changes needed?

For efficient developments

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested

Closes #25766 from maropu/PrintBytecodeStats.

Authored-by: Takeshi Yamamuro <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

…safeShuffleWriter and SortShuffleWriter ### What changes were proposed in this pull request? The previous refactors of the shuffle writers using the shuffle writer plugin resulted in shuffle write metric updates - particularly write times - being lost in particular situations. This patch restores the lost metric updates. ### Why are the changes needed? This fixes a regression. I'm pretty sure that without this, the Spark UI will lose shuffle write time information. ### Does this PR introduce any user-facing change? No change from Spark 2.4. Without this, there would be a user-facing bug in Spark 3.0. ### How was this patch tested? Existing unit tests. Closes #25780 from mccheah/fix-write-metrics. Authored-by: mcheah <[email protected]> Signed-off-by: Imran Rashid <[email protected]>

… create table through spark-sql or beeline …create table through spark-sql or beeline ## What changes were proposed in this pull request? fix table owner use user instead of principal when create table through spark-sql private val userName = conf.getUser will get ugi's userName which is principal info, and i copy the source code into HiveClientImpl, and use ugi.getShortUserName() instead of ugi.getUserName(). The owner display correctly. ## How was this patch tested? 1. create a table in kerberos cluster 2. use "desc formatted tbName" check owner Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #23952 from hddong/SPARK-26929-fix-table-owner. Lead-authored-by: hongdd <[email protected]> Co-authored-by: hongdongdong <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]>

…esn't break with default conf of includeHeaders ### What changes were proposed in this pull request? This patch adds new UT to ensure existing query (before Spark 3.0.0) with checkpoint doesn't break with default configuration of "includeHeaders" being introduced via SPARK-23539. This patch also modifies existing test which checks type of columns to also check headers column as well. ### Why are the changes needed? The patch adds missing tests which guarantees backward compatibility of the change of SPARK-23539. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? UT passed. Closes #25792 from HeartSaVioR/SPARK-23539-FOLLOWUP. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…ression in HashAggregateExec ### What changes were proposed in this pull request? This pr proposes to define an individual method for each common subexpression in HashAggregateExec. In the current master, the common subexpr elimination code in HashAggregateExec is expanded in a single method; https://github.com/apache/spark/blob/4664a082c2c7ac989e818958c465c72833d3ccfe/sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/HashAggregateExec.scala#L397 The method size can be too big for JIT compilation, so I believe splitting it is beneficial for performance. For example, in a query `SELECT SUM(a + b), AVG(a + b + c) FROM VALUES (1, 1, 1) t(a, b, c)`, the current master generates; ``` /* 098 */ private void agg_doConsume_0(InternalRow localtablescan_row_0, int agg_expr_0_0, int agg_expr_1_0, int agg_expr_2_0) throws java.io.IOException { /* 099 */ // do aggregate /* 100 */ // common sub-expressions /* 101 */ int agg_value_6 = -1; /* 102 */ /* 103 */ agg_value_6 = agg_expr_0_0 + agg_expr_1_0; /* 104 */ /* 105 */ int agg_value_5 = -1; /* 106 */ /* 107 */ agg_value_5 = agg_value_6 + agg_expr_2_0; /* 108 */ boolean agg_isNull_4 = false; /* 109 */ long agg_value_4 = -1L; /* 110 */ if (!false) { /* 111 */ agg_value_4 = (long) agg_value_5; /* 112 */ } /* 113 */ int agg_value_10 = -1; /* 114 */ /* 115 */ agg_value_10 = agg_expr_0_0 + agg_expr_1_0; /* 116 */ // evaluate aggregate functions and update aggregation buffers /* 117 */ agg_doAggregate_sum_0(agg_value_10); /* 118 */ agg_doAggregate_avg_0(agg_value_4, agg_isNull_4); /* 119 */ /* 120 */ } ``` On the other hand, this pr generates; ``` /* 121 */ private void agg_doConsume_0(InternalRow localtablescan_row_0, int agg_expr_0_0, int agg_expr_1_0, int agg_expr_2_0) throws java.io.IOException { /* 122 */ // do aggregate /* 123 */ // common sub-expressions /* 124 */ long agg_subExprValue_0 = agg_subExpr_0(agg_expr_2_0, agg_expr_0_0, agg_expr_1_0); /* 125 */ int agg_subExprValue_1 = agg_subExpr_1(agg_expr_0_0, agg_expr_1_0); /* 126 */ // evaluate aggregate functions and update aggregation buffers /* 127 */ agg_doAggregate_sum_0(agg_subExprValue_1); /* 128 */ agg_doAggregate_avg_0(agg_subExprValue_0); /* 129 */ /* 130 */ } ``` I run some micro benchmarks for this pr; ``` (base) maropu~:$system_profiler SPHardwareDataType Hardware: Hardware Overview: Processor Name: Intel Core i5 Processor Speed: 2 GHz Number of Processors: 1 Total Number of Cores: 2 L2 Cache (per Core): 256 KB L3 Cache: 4 MB Memory: 8 GB (base) maropu~:$java -version java version "1.8.0_181" Java(TM) SE Runtime Environment (build 1.8.0_181-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode) (base) maropu~:$ /bin/spark-shell --master=local[1] --conf spark.driver.memory=8g --conf spark.sql.shurtitions=1 -v val numCols = 40 val colExprs = "id AS key" +: (0 until numCols).map { i => s"id AS _c$i" } spark.range(3000000).selectExpr(colExprs: _*).createOrReplaceTempView("t") val aggExprs = (2 until numCols).map { i => (0 until i).map(d => s"_c$d") .mkString("AVG(", " + ", ")") } // Drops the time of a first run then pick that of a second run timer { sql(s"SELECT ${aggExprs.mkString(", ")} FROM t").write.format("noop").save() } // the master maxCodeGen: 12957 Elapsed time: 36.309858661s // this pr maxCodeGen=4184 Elapsed time: 2.399490285s ``` ### Why are the changes needed? To avoid the too-long-function issue in JVMs. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Added tests in `WholeStageCodegenSuite` Closes #25710 from maropu/SplitSubexpr. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

…InSet expression ### What changes were proposed in this pull request? When InSet generates Java switch-based code, if the input set is empty, we don't generate switch condition, but a simple expression that is default case of original switch. ### Why are the changes needed? SPARK-26205 adds an optimization to InSet that generates Java switch condition for certain cases. When the given set is empty, it is possibly that codegen causes compilation error: ``` [info] - SPARK-29100: InSet with empty input set *** FAILED *** (58 milliseconds) [info] Code generation of input[0, int, true] INSET () failed: [info] org.codehaus.janino.InternalCompilerException: failed to compile: org.codehaus.janino.InternalCompilerException: Compiling "GeneratedClass" in "generated.java": Compiling "apply(java.lang.Object _i)"; apply(java.lang.Object _i): Operand stack inconsistent at offset 45: Previous size 0, now 1 [info] org.codehaus.janino.InternalCompilerException: failed to compile: org.codehaus.janino.InternalCompilerException: Compiling "GeneratedClass" in "generated.java": Compiling "apply(java.lang.Object _i)"; apply(java.lang.Object _i): Operand stack inconsistent at offset 45: Previous size 0, now 1 [info] at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$.org$apache$spark$sql$catalyst$expressions$codegen$CodeGenerator$$doCompile(CodeGenerator.scala:1308) [info] at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:1386) [info] at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator$$anon$1.load(CodeGenerator.scala:1383) ``` ### Does this PR introduce any user-facing change? Yes. Previously, when users have InSet against an empty set, generated code causes compilation error. This patch fixed it. ### How was this patch tested? Unit test added. Closes #25806 from viirya/SPARK-29100. Authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Bucketizer support multi-column in the python side ### Why are the changes needed? Bucketizer should support multi-column like the scala side. ### Does this PR introduce any user-facing change? yes, this PR add new Python API ### How was this patch tested? added testsuites Closes #25801 from zhengruifeng/20542_py. Authored-by: zhengruifeng <[email protected]> Signed-off-by: zhengruifeng <[email protected]>

### What changes were proposed in this pull request? This patch proposes to add new tests to test the username of HiveClient to prevent changing the semantic unintentionally. The owner of Hive table has been changed back-and-forth, principal -> username -> principal, and looks like the change is not intentional. (Please refer [SPARK-28996](https://issues.apache.org/jira/browse/SPARK-28996) for more details.) This patch intends to prevent this. This patch also renames previous HiveClientSuite(s) to HivePartitionFilteringSuite(s) as it was commented as TODO, as well as previous tests are too narrowed to test only partition filtering. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Newly added UTs. Closes #25696 from HeartSaVioR/SPARK-28996. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? In the PR, I propose to create an instance of `TimestampFormatter` only once at the initialization, and reuse it inside of `nullSafeEval()` and `doGenCode()` in the case when the `fmt` parameter is foldable. ### Why are the changes needed? The changes improve performance of the `date_format()` function. Before: ``` format date: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------ format date wholestage off 7180 / 7181 1.4 718.0 1.0X format date wholestage on 7051 / 7194 1.4 705.1 1.0X ``` After: ``` format date: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------ format date wholestage off 4787 / 4839 2.1 478.7 1.0X format date wholestage on 4736 / 4802 2.1 473.6 1.0X ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? By existing test suites `DateExpressionsSuite` and `DateFunctionsSuite`. Closes #25782 from MaxGekk/date_format-foldable. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…UG in Executor Plugin API ### What changes were proposed in this pull request? Log levels in Executor.scala are changed from DEBUG to INFO. ### Why are the changes needed? Logging level DEBUG is too low here. These messages are simple acknowledgement for successful loading and initialization of plugins. So its better to keep them in INFO level. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Manually tested. Closes #25634 from iRakson/ExecutorPlugin. Authored-by: iRakson <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? I fix the test "barrier task killed" which is flaky: * Split interrupt/no interrupt test into separate sparkContext. Prevent them to influence each other. * only check exception on partiton-0. partition-1 is hang on sleep which may throw other exception. ### Why are the changes needed? Make test robust. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? N/A Closes #25799 from WeichenXu123/oss_fix_barrier_test. Authored-by: WeichenXu <[email protected]> Signed-off-by: WeichenXu <[email protected]>

…heck thread termination ### What changes were proposed in this pull request? `PipedRDD` will invoke `stdinWriterThread.interrupt()` at task completion, and `obj.wait` will get `InterruptedException`. However, there exists a possibility which the thread termination gets delayed because the thread starts from `obj.wait()` with that exception. To prevent test flakiness, we need to use `eventually`. Also, This PR fixes the typo in code comment and variable name. ### Why are the changes needed? ``` - stdin writer thread should be exited when task is finished *** FAILED *** Some(Thread[stdin writer for List(cat),5,]) was not empty (PipedRDDSuite.scala:107) ``` - https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7/6867/testReport/junit/org.apache.spark.rdd/PipedRDDSuite/stdin_writer_thread_should_be_exited_when_task_is_finished/ ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manual. We can reproduce the same failure like Jenkins if we catch `InterruptedException` and sleep longer than the `eventually` timeout inside the test code. The following is the example to reproduce it. ```scala val nums = sc.makeRDD(Array(1, 2, 3, 4), 1).map { x => try { obj.synchronized { obj.wait() // make the thread waits here. } } catch { case ie: InterruptedException => Thread.sleep(15000) throw ie } x } ``` Closes #25808 from dongjoon-hyun/SPARK-29104. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? This pr refines the code of DELETE, including, 1, make `whereClause` to be optional, in which case DELETE will delete all of the data of a table; 2, add more test cases; 3, some other refines. This is a following-up of SPARK-28351. ### Why are the changes needed? An optional where clause in DELETE respects the SQL standard. ### Does this PR introduce any user-facing change? Yes. But since this is a non-released feature, this change does not have any end-user affects. ### How was this patch tested? New case is added. Closes #25652 from xianyinxin/SPARK-28950. Authored-by: xy_xin <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Document SET/REST statement in SQL Reference Guide. ### Why are the changes needed? Currently Spark lacks documentation on the supported SQL constructs causing confusion among users who sometimes have to look at the code to understand the usage. This is aimed at addressing this issue. ### Does this PR introduce any user-facing change? Yes. #### Before: There was no documentation for this. #### After: **SET**   **RESET**  ### How was this patch tested? Manual Review and Tested using jykyll build --serve Closes #25606 from sharangk/resetDoc. Authored-by: sharangk <[email protected]> Signed-off-by: Xiao Li <[email protected]>

…erence ### What changes were proposed in this pull request? Document CREATE DATABASE statement in SQL Reference Guide. ### Why are the changes needed? Currently Spark lacks documentation on the supported SQL constructs causing confusion among users who sometimes have to look at the code to understand the usage. This is aimed at addressing this issue. ### Does this PR introduce any user-facing change? Yes. ### Before: There was no documentation for this. ### After:   ### How was this patch tested? Manual Review and Tested using jykyll build --serve Closes #25595 from sharangk/createDbDoc. Lead-authored-by: sharangk <[email protected]> Co-authored-by: Xiao Li <[email protected]> Signed-off-by: Xiao Li <[email protected]>

…stamp() ### What changes were proposed in this pull request? Added new benchmarks for `make_date()` and `make_timestamp()` to detect performance issues, and figure out functions speed on foldable arguments. - `make_date()` is benchmarked on fully foldable arguments. - `make_timestamp()` is benchmarked on corner case `60.0`, foldable time fields and foldable date. ### Why are the changes needed? To find out inputs where `make_date()` and `make_timestamp()` have performance problems. This should be useful in the future optimizations of the functions and users apps. ### Does this PR introduce any user-facing change? No ### How was this patch tested? By running the benchmark and manually checking generated dates/timestamps. Closes #25813 from MaxGekk/make_datetime-benchmark. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…nical host name differs from localhost ### What changes were proposed in this pull request? `KafkaDelegationTokenSuite` fails on different platforms with the following problem: ``` 19/09/11 11:07:42.690 pool-1-thread-1-SendThread(localhost:44965) DEBUG ZooKeeperSaslClient: creating sasl client: Client=zkclient/localhostEXAMPLE.COM;service=zookeeper;serviceHostname=localhost.localdomain ... NIOServerCxn.Factory:localhost/127.0.0.1:0: Zookeeper Server failed to create a SaslServer to interact with a client during session initiation: javax.security.sasl.SaslException: Failure to initialize security context [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos credentails)] at com.sun.security.sasl.gsskerb.GssKrb5Server.<init>(GssKrb5Server.java:125) at com.sun.security.sasl.gsskerb.FactoryImpl.createSaslServer(FactoryImpl.java:85) at javax.security.sasl.Sasl.createSaslServer(Sasl.java:524) at org.apache.zookeeper.util.SecurityUtils$2.run(SecurityUtils.java:233) at org.apache.zookeeper.util.SecurityUtils$2.run(SecurityUtils.java:229) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.zookeeper.util.SecurityUtils.createSaslServer(SecurityUtils.java:228) at org.apache.zookeeper.server.ZooKeeperSaslServer.createSaslServer(ZooKeeperSaslServer.java:44) at org.apache.zookeeper.server.ZooKeeperSaslServer.<init>(ZooKeeperSaslServer.java:38) at org.apache.zookeeper.server.NIOServerCnxn.<init>(NIOServerCnxn.java:100) at org.apache.zookeeper.server.NIOServerCnxnFactory.createConnection(NIOServerCnxnFactory.java:186) at org.apache.zookeeper.server.NIOServerCnxnFactory.run(NIOServerCnxnFactory.java:227) at java.lang.Thread.run(Thread.java:748) Caused by: GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos credentails) at sun.security.jgss.krb5.Krb5AcceptCredential.getInstance(Krb5AcceptCredential.java:87) at sun.security.jgss.krb5.Krb5MechFactory.getCredentialElement(Krb5MechFactory.java:127) at sun.security.jgss.GSSManagerImpl.getCredentialElement(GSSManagerImpl.java:193) at sun.security.jgss.GSSCredentialImpl.add(GSSCredentialImpl.java:427) at sun.security.jgss.GSSCredentialImpl.<init>(GSSCredentialImpl.java:62) at sun.security.jgss.GSSManagerImpl.createCredential(GSSManagerImpl.java:154) at com.sun.security.sasl.gsskerb.GssKrb5Server.<init>(GssKrb5Server.java:108) ... 13 more NIOServerCxn.Factory:localhost/127.0.0.1:0: Client attempting to establish new session at /127.0.0.1:33742 SyncThread:0: Creating new log file: log.1 SyncThread:0: Established session 0x100003736ae0000 with negotiated timeout 10000 for client /127.0.0.1:33742 pool-1-thread-1-SendThread(localhost:35625): Session establishment complete on server localhost/127.0.0.1:35625, sessionid = 0x100003736ae0000, negotiated timeout = 10000 pool-1-thread-1-SendThread(localhost:35625): ClientCnxn:sendSaslPacket:length=0 pool-1-thread-1-SendThread(localhost:35625): saslClient.evaluateChallenge(len=0) pool-1-thread-1-EventThread: zookeeper state changed (SyncConnected) NioProcessor-1: No server entry found for kerberos principal name zookeeper/localhost.localdomainEXAMPLE.COM NioProcessor-1: No server entry found for kerberos principal name zookeeper/localhost.localdomainEXAMPLE.COM NioProcessor-1: Server not found in Kerberos database (7) NioProcessor-1: Server not found in Kerberos database (7) ``` The problem reproducible if the `localhost` and `localhost.localdomain` order exhanged: ``` [systestgsomogyi-build spark]$ cat /etc/hosts 127.0.0.1 localhost.localdomain localhost localhost4 localhost4.localdomain4 ::1 localhost.localdomain localhost localhost6 localhost6.localdomain6 ``` The main problem is that `ZkClient` connects to the canonical loopback address (which is not necessarily `localhost`). ### Why are the changes needed? `KafkaDelegationTokenSuite` failed in some environments. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing unit tests on different platforms. Closes #25803 from gaborgsomogyi/SPARK-29027. Authored-by: Gabor Somogyi <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]>

### What changes were proposed in this pull request? Until now, we are testing JDK8/11 with Hadoop-3.2. This PR aims to extend the test coverage for JDK8/Hadoop-2.7. ### Why are the changes needed? This will prevent Hadoop 2.7 compile/package issues at PR stage. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? GitHub Action on this PR shows all three combinations now. And, this is irrelevant to Jenkins test. Closes #25824 from dongjoon-hyun/SPARK-29125. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request?

Adds a new cogroup Pandas UDF. This allows two grouped dataframes to be cogrouped together and apply a (pandas.DataFrame, pandas.DataFrame) -> pandas.DataFrame UDF to each cogroup.

**Example usage**

```

from pyspark.sql.functions import pandas_udf, PandasUDFType

df1 = spark.createDataFrame(

[(20000101, 1, 1.0), (20000101, 2, 2.0), (20000102, 1, 3.0), (20000102, 2, 4.0)],

("time", "id", "v1"))

df2 = spark.createDataFrame(

[(20000101, 1, "x"), (20000101, 2, "y")],

("time", "id", "v2"))

pandas_udf("time int, id int, v1 double, v2 string", PandasUDFType.COGROUPED_MAP)

def asof_join(l, r):

return pd.merge_asof(l, r, on="time", by="id")

df1.groupby("id").cogroup(df2.groupby("id")).apply(asof_join).show()

```

+--------+---+---+---+

| time| id| v1| v2|

+--------+---+---+---+

|20000101| 1|1.0| x|

|20000102| 1|3.0| x|

|20000101| 2|2.0| y|

|20000102| 2|4.0| y|

+--------+---+---+---+

### How was this patch tested?

Added unit test test_pandas_udf_cogrouped_map

Closes #24981 from d80tb7/SPARK-27463-poc-arrow-stream.

Authored-by: Chris Martin <[email protected]>

Signed-off-by: Bryan Cutler <[email protected]>

…f `bytesHash(data)` ### What changes were proposed in this pull request? This PR changes `bytesHash(data)` API invocation with the underlaying `byteHash(data, arraySeed)` invocation. ```scala def bytesHash(data: Array[Byte]): Int = bytesHash(data, arraySeed) ``` ### Why are the changes needed? The original API is changed between Scala versions by the following commit. From Scala 2.12.9, the semantic of the function is changed. If we use the underlying form, we are safe during Scala version migration. - scala/scala@846ee2b#diff-ac889f851e109fc4387cd738d52ce177 ### Does this PR introduce any user-facing change? No. ### How was this patch tested? This is a kind of refactoring. Pass the Jenkins with the existing tests. Closes #25821 from dongjoon-hyun/SPARK-SCALA-HASH. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…all clients **What changes were proposed in this pull request?** Issue 1 : modifications not required as these are different formats for the same info. In the case of a Spark DataFrame, null is correct. Issue 2 mentioned in JIRA Spark SQL "desc formatted tablename" is not showing the header # col_name,data_type,comment , seems to be the header has been removed knowingly as part of SPARK-20954. Issue 3: Corrected the Last Access time, the value shall display 'UNKNOWN' as currently system wont support the last access time evaluation, since hive was setting Last access time as '0' in metastore even though spark CatalogTable last access time value set as -1. this will make the validation logic of LasAccessTime where spark sets 'UNKNOWN' value if last access time value set as -1 (means not evaluated). **Does this PR introduce any user-facing change?** No **How was this patch tested?** Locally and corrected a ut. Attaching the test report below  Closes #25720 from sujith71955/master_describe_info. Authored-by: s71955 <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…annotations in project ### What changes were proposed in this pull request? In this PR, I fix some annotation errors and remove meaningless annotations in project. ### Why are the changes needed? There are some annotation errors and meaningless annotations in project. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Verified manually. Closes #25809 from turboFei/SPARK-29113. Authored-by: turbofei <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…er faces a fatal exception

### What changes were proposed in this pull request?

In `ApplicationMaster.Reporter` thread, fatal exception information is swallowed. It's better to expose it.

We found our thrift server was shutdown due to a fatal exception but no useful information from log.

> 19/09/16 06:59:54,498 INFO [Reporter] yarn.ApplicationMaster:54 : Final app status: FAILED, exitCode: 12, (reason: Exception was thrown 1 time(s) from Reporter thread.)

19/09/16 06:59:54,500 ERROR [Driver] thriftserver.HiveThriftServer2:91 : Error starting HiveThriftServer2

java.lang.InterruptedException: sleep interrupted

at java.lang.Thread.sleep(Native Method)

at org.apache.spark.sql.hive.thriftserver.HiveThriftServer2$.main(HiveThriftServer2.scala:160)

at org.apache.spark.sql.hive.thriftserver.HiveThriftServer2.main(HiveThriftServer2.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$4.run(ApplicationMaster.scala:708)

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manual test

Closes #25810 from LantaoJin/SPARK-29112.

Authored-by: LantaoJin <[email protected]>

Signed-off-by: jerryshao <[email protected]>

### What changes were proposed in this pull request? Currently the checks in the Analyzer require that V2 Tables have BATCH_WRITE defined for all tables that have V1 Write fallbacks. This is confusing as these tables may not have the V2 writer interface implemented yet. This PR adds this table capability to these checks. In addition, this allows V2 tables to leverage the V1 APIs for DataFrameWriter.save if they do extend the V1_BATCH_WRITE capability. This way, these tables can continue to receive partitioning information and also perform checks for the existence of tables, and support all SaveModes. ### Why are the changes needed? Partitioned saves through DataFrame.write are otherwise broken for V2 tables that support the V1 write API. ### Does this PR introduce any user-facing change? No ### How was this patch tested? V1WriteFallbackSuite Closes #25767 from brkyvz/bwcheck. Authored-by: Burak Yavuz <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…est` and minor documentation correction ### What changes were proposed in this pull request? This PR is a followup of #25838 and proposes to create an actual test case under `src/test`. Previously, compile only test existed at `src/main`. Also, just changed the wordings in `SerializableConfiguration` just only to describe what it does (remove other words). ### Why are the changes needed? Tests codes should better exist in `src/test` not `src/main`. Also, it should better test a basic functionality. ### Does this PR introduce any user-facing change? No except minor doc change. ### How was this patch tested? Unit test was added. Closes #25867 from HyukjinKwon/SPARK-29158. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR use `java-version` instead of `version` for GitHub Action. More details: actions/setup-java@204b974 actions/setup-java@ac25aee ### Why are the changes needed? The `version` property will not be supported after October 1, 2019. ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A Closes #25866 from wangyum/java-version. Authored-by: Yuming Wang <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…o be up in SparkContextSuite ### What changes were proposed in this pull request? This patch proposes to increase timeout to wait for executor(s) to be up in SparkContextSuite, as we observed these tests failed due to wait timeout. ### Why are the changes needed? There's some case that CI build is extremely slow which requires 3x or more time to pass the test. (https://issues.apache.org/jira/browse/SPARK-29139?focusedCommentId=16934034&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16934034) Allocating higher timeout wouldn't bring additional latency, as the code checks the condition with sleeping 10 ms per loop iteration. ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A, as the case is not likely to be occurred frequently. Closes #25864 from HeartSaVioR/SPARK-29139. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR allows Python toLocalIterator to prefetch the next partition while the first partition is being collected. The PR also adds a demo micro bench mark in the examples directory, we may wish to keep this or not. ### Why are the changes needed? In https://issues.apache.org/jira/browse/SPARK-23961 / 5e79ae3 we changed PySpark to only pull one partition at a time. This is memory efficient, but if partitions take time to compute this can mean we're spending more time blocking. ### Does this PR introduce any user-facing change? A new param is added to toLocalIterator ### How was this patch tested? New unit test inside of `test_rdd.py` checks the time that the elements are evaluated at. Another test that the results remain the same are added to `test_dataframe.py`. I also ran a micro benchmark in the examples directory `prefetch.py` which shows an improvement of ~40% in this specific use case. > > 19/08/16 17:11:36 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable > Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties > Setting default log level to "WARN". > To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). > Running timers: > > [Stage 32:> (0 + 1) / 1] > Results: > > Prefetch time: > > 100.228110831 > > > Regular time: > > 188.341721614 > > > Closes #25515 from holdenk/SPARK-27659-allow-pyspark-tolocalitr-to-prefetch. Authored-by: Holden Karau <[email protected]> Signed-off-by: Holden Karau <[email protected]>

### What changes were proposed in this pull request? Switch from using a Thread sleep for waiting for commands to finish to just waiting for the command to finish with a watcher & improve the error messages in the SecretsTestsSuite. ### Why are the changes needed? Currently some of the Spark Kubernetes tests have race conditions with command execution, and the frequent use of eventually makes debugging test failures difficult. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Existing tests pass after removal of thread.sleep Closes #25765 from holdenk/SPARK-28937SPARK-28936-improve-kubernetes-integration-tests. Authored-by: Holden Karau <[email protected]> Signed-off-by: Holden Karau <[email protected]>

…from python execution in Python 2

### What changes were proposed in this pull request?

This PR allows non-ascii string as an exception message in Python 2 by explicitly en/decoding in case of `str` in Python 2.

### Why are the changes needed?

Previously PySpark will hang when the `UnicodeDecodeError` occurs and the real exception cannot be passed to the JVM side.

See the reproducer as below:

```python

def f():

raise Exception("中")

spark = SparkSession.builder.master('local').getOrCreate()

spark.sparkContext.parallelize([1]).map(lambda x: f()).count()

```

### Does this PR introduce any user-facing change?

User may not observe hanging for the similar cases.

### How was this patch tested?

Added a new test and manually checking.

This pr is based on #18324, credits should also go to dataknocker.

To make lint-python happy for python3, it also includes a followup fix for #25814

Closes #25847 from advancedxy/python_exception_19926_and_21045.

Authored-by: Xianjin YE <[email protected]>

Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request?

Modify the approach in `DataFrameNaFunctions.fillValue`, the new one uses `df.withColumns` which only address the columns need to be filled. After this change, there are no more ambiguous fileds detected for joined dataframe.

### Why are the changes needed?

Before this change, when you have a joined table that has the same field name from both original table, fillna will fail even if you specify a subset that does not include the 'ambiguous' fields.

```

scala> val df1 = Seq(("f1-1", "f2", null), ("f1-2", null, null), ("f1-3", "f2", "f3-1"), ("f1-4", "f2", "f3-1")).toDF("f1", "f2", "f3")

scala> val df2 = Seq(("f1-1", null, null), ("f1-2", "f2", null), ("f1-3", "f2", "f4-1")).toDF("f1", "f2", "f4")

scala> val df_join = df1.alias("df1").join(df2.alias("df2"), Seq("f1"), joinType="left_outer")

scala> df_join.na.fill("", cols=Seq("f4"))

org.apache.spark.sql.AnalysisException: Reference 'f2' is ambiguous, could be: df1.f2, df2.f2.;

```

### Does this PR introduce any user-facing change?

Yes, fillna operation will pass and give the right answer for a joined table.

### How was this patch tested?

Local test and newly added UT.

Closes #25768 from xuanyuanking/SPARK-29063.

Lead-authored-by: Yuanjian Li <[email protected]>

Co-authored-by: Xiao Li <[email protected]>

Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? This PRs add Java 11 version to the document. ### Why are the changes needed? Apache Spark 3.0.0 starts to support JDK11 officially. ### Does this PR introduce any user-facing change? Yes.  ### How was this patch tested? Manually. Doc generation. Closes #25875 from dongjoon-hyun/SPARK-29196. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…gative threshold ### What changes were proposed in this pull request? if threshold<0, convert implict 0 to 1, althought this will break sparsity ### Why are the changes needed? if `threshold<0`, current impl deal with sparse vector incorrectly. See JIRA [SPARK-29144](https://issues.apache.org/jira/browse/SPARK-29144) and [Scikit-Learn's Binarizer](https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.Binarizer.html) ('Threshold may not be less than 0 for operations on sparse matrices.') for details. ### Does this PR introduce any user-facing change? no ### How was this patch tested? added testsuite Closes #25829 from zhengruifeng/binarizer_throw_exception_sparse_vector. Authored-by: zhengruifeng <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…rately ### What changes were proposed in this pull request? This PR aims to extend the existing benchmarks to save JDK9+ result separately. All `core` module benchmark test results are added. I'll run the other test suites in another PR. After regenerating all results, we will check JDK11 performance regressions. ### Why are the changes needed? From Apache Spark 3.0, we support both JDK8 and JDK11. We need to have a way to find the performance regression. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manually run the benchmark. Closes #25873 from dongjoon-hyun/SPARK-JDK11-PERF. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request?

In the PR, I propose to change behavior of the `date_part()` function in handling `null` field, and make it the same as PostgreSQL has. If `field` parameter is `null`, the function should return `null` of the `double` type as PostgreSQL does:

```sql

# select date_part(null, date '2019-09-20');

date_part

-----------

(1 row)

# select pg_typeof(date_part(null, date '2019-09-20'));

pg_typeof

------------------

double precision

(1 row)

```

### Why are the changes needed?

The `date_part()` function was added to maintain feature parity with PostgreSQL but current behavior of the function is different in handling null as `field`.

### Does this PR introduce any user-facing change?

Yes.

Before:

```sql

spark-sql> select date_part(null, date'2019-09-20');

Error in query: null; line 1 pos 7

```

After:

```sql

spark-sql> select date_part(null, date'2019-09-20');

NULL

```

### How was this patch tested?

Add new tests to `DateFunctionsSuite for 2 cases:

- `field` = `null`, `source` = a date literal

- `field` = `null`, `source` = a date column

Closes #25865 from MaxGekk/date_part-null.

Authored-by: Maxim Gekk <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Update breeze dependency to 1.0. ### Why are the changes needed? Breeze 1.0 supports Scala 2.13 and has a few bug fixes. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing tests. Closes #25874 from srowen/SPARK-28772. Authored-by: Sean Owen <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…rly in splitAggregateExpressions ### What changes were proposed in this pull request? This patch fixes the issue brought by [SPARK-21870](http://issues.apache.org/jira/browse/SPARK-21870): when generating code for parameter type, it doesn't consider array type in javaType. At least we have one, Spark should generate code for BinaryType as `byte[]`, but Spark create the code for BinaryType as `[B` and generated code fails compilation. Below is the generated code which failed compilation (Line 380): ``` /* 380 */ private void agg_doAggregate_count_0([B agg_expr_1_1, boolean agg_exprIsNull_1_1, org.apache.spark.sql.catalyst.InternalRow agg_unsafeRowAggBuffer_1) throws java.io.IOException { /* 381 */ // evaluate aggregate function for count /* 382 */ boolean agg_isNull_26 = false; /* 383 */ long agg_value_28 = -1L; /* 384 */ if (!false && agg_exprIsNull_1_1) { /* 385 */ long agg_value_31 = agg_unsafeRowAggBuffer_1.getLong(1); /* 386 */ agg_isNull_26 = false; /* 387 */ agg_value_28 = agg_value_31; /* 388 */ } else { /* 389 */ long agg_value_33 = agg_unsafeRowAggBuffer_1.getLong(1); /* 390 */ /* 391 */ long agg_value_32 = -1L; /* 392 */ /* 393 */ agg_value_32 = agg_value_33 + 1L; /* 394 */ agg_isNull_26 = false; /* 395 */ agg_value_28 = agg_value_32; /* 396 */ } /* 397 */ // update unsafe row buffer /* 398 */ agg_unsafeRowAggBuffer_1.setLong(1, agg_value_28); /* 399 */ } ``` There wasn't any test for HashAggregateExec specifically testing this, but randomized test in ObjectHashAggregateSuite could encounter this and that's why ObjectHashAggregateSuite is flaky. ### Why are the changes needed? Without the fix, generated code from HashAggregateExec may fail compilation. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Added new UT. Without the fix, newly added UT fails. Closes #25830 from HeartSaVioR/SPARK-29140. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

… stopped ### What changes were proposed in this pull request? TransportClientFactory.createClient() is called by task and TransportClientFactory.close() is called by executor. When stop the executor, close() will set workerGroup = null, NPE will occur in createClient which generate many exception in log. For exception occurs after close(), treated it as an expected Exception and transform it to InterruptedException which can be processed by Executor. ### Why are the changes needed? The change can reduce the exception stack trace in log file, and user won't be confused by these excepted exception. ### Does this PR introduce any user-facing change? N/A ### How was this patch tested? New tests are added in TransportClientFactorySuite and ExecutorSuite Closes #25759 from colinmjj/spark-19147. Authored-by: colinma <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? Setting custom sort key for duration and execution time column. ### Why are the changes needed? Sorting on duration and execution time columns consider time as a string after converting into readable form which is the reason for wrong sort results as mentioned in [SPARK-29053](https://issues.apache.org/jira/browse/SPARK-29053). ### Does this PR introduce any user-facing change? No ### How was this patch tested? Test manually. Screenshots are attached. After patch: **Duration**  **Execution time**  Closes #25855 from amanomer/SPARK29053. Authored-by: aman_omer <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…g file ### What changes were proposed in this pull request? Credit to vanzin as he found and commented on this while reviewing #25670 - [comment](#25670 (comment)). This patch proposes to specify UTF-8 explicitly while reading/writer event log file. ### Why are the changes needed? The event log file is being read/written as default character set of JVM process which may open the chance to bring some problems on reading event log files from another machines. Spark's de facto standard character set is UTF-8, so it should be explicitly set to. ### Does this PR introduce any user-facing change? Yes, if end users have been running Spark process with different default charset than "UTF-8", especially their driver JVM processes. No otherwise. ### How was this patch tested? Existing UTs, as ReplayListenerSuite contains "end-to-end" event logging/reading tests (both uncompressed/compressed). Closes #25845 from HeartSaVioR/SPARK-29160. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…itHub Action ### What changes were proposed in this pull request? This PR aims to add linters and license/dependency checkers to GitHub Action. This excludes `lint-r` intentionally because https://github.com/actions/setup-r is not ready. We can add that later when it becomes available. ### Why are the changes needed? This will help the PR reviews. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? See the GitHub Action result on this PR. Closes #25879 from dongjoon-hyun/SPARK-29199. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Support for dot product with: - `ml.linalg.Vector` - `ml.linalg.Vectors` - `mllib.linalg.Vector` - `mllib.linalg.Vectors` ### Why are the changes needed? Dot product is useful for feature engineering and scoring. BLAS routines are already there, just a wrapper is needed. ### Does this PR introduce any user-facing change? No user facing changes, just some new functionality. ### How was this patch tested? Tests were written and added to the appropriate `VectorSuites` classes. They can be quickly run with: ``` sbt "mllib-local/testOnly org.apache.spark.ml.linalg.VectorsSuite" sbt "mllib/testOnly org.apache.spark.mllib.linalg.VectorsSuite" ``` Closes #25818 from phpisciuneri/SPARK-29121. Authored-by: Patrick Pisciuneri <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…s `field` ### What changes were proposed in this pull request? Changed the `DateTimeUtils.getMilliseconds()` by avoiding the decimal division, and replacing it by setting scale and precision while converting microseconds to the decimal type. ### Why are the changes needed? This improves performance of `extract` and `date_part()` by more than **50 times**: Before: ``` Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------------------------------ cast to timestamp 397 428 45 25.2 39.7 1.0X MILLISECONDS of timestamp 36723 36761 63 0.3 3672.3 0.0X ``` After: ``` Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------------------------------ cast to timestamp 278 284 6 36.0 27.8 1.0X MILLISECONDS of timestamp 592 606 13 16.9 59.2 0.5X ``` ### Does this PR introduce any user-facing change? No ### How was this patch tested? By existing test suite - `DateExpressionsSuite` Closes #25871 from MaxGekk/optimize-epoch-millis. Lead-authored-by: Maxim Gekk <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Refactoring of the `DateTimeUtils.getEpoch()` function by avoiding decimal operations that are pretty expensive, and converting the final result to the decimal type at the end. ### Why are the changes needed? The changes improve performance of the `getEpoch()` method at least up to **20 times**. Before: ``` Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------------------------------ cast to timestamp 256 277 33 39.0 25.6 1.0X EPOCH of timestamp 23455 23550 131 0.4 2345.5 0.0X ``` After: ``` Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative ------------------------------------------------------------------------------------------------------------------------ cast to timestamp 255 294 34 39.2 25.5 1.0X EPOCH of timestamp 1049 1054 9 9.5 104.9 0.2X ``` ### Does this PR introduce any user-facing change? No ### How was this patch tested? By existing test from `DateExpressionSuite`. Closes #25881 from MaxGekk/optimize-extract-epoch. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Correct a word in a log message. ### Why are the changes needed? Log message will be more clearly. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Test is not needed. Closes #25880 from mdianjun/fix-a-word. Authored-by: madianjun <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Supported special string values for `DATE` type. They are simply notational shorthands that will be converted to ordinary date values when read. The following string values are supported: - `epoch [zoneId]` - `1970-01-01` - `today [zoneId]` - the current date in the time zone specified by `spark.sql.session.timeZone`. - `yesterday [zoneId]` - the current date -1 - `tomorrow [zoneId]` - the current date + 1 - `now` - the date of running the current query. It has the same notion as `today`. For example: ```sql spark-sql> SELECT date 'tomorrow' - date 'yesterday'; 2 ``` ### Why are the changes needed? To maintain feature parity with PostgreSQL, see [8.5.1.4. Special Values](https://www.postgresql.org/docs/12/datatype-datetime.html) ### Does this PR introduce any user-facing change? Previously, the parser fails on the special values with the error: ```sql spark-sql> select date 'today'; Error in query: Cannot parse the DATE value: today(line 1, pos 7) ``` After the changes, the special values are converted to appropriate dates: ```sql spark-sql> select date 'today'; 2019-09-06 ``` ### How was this patch tested? - Added tests to `DateFormatterSuite` to check parsing special values from regular strings. - Tests in `DateTimeUtilsSuite` check parsing those values from `UTF8String` - Uncommented tests in `date.sql` Closes #25708 from MaxGekk/datetime-special-values. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? This is a followup for #24981 Seems we mistakenly didn't added `test_pandas_udf_cogrouped_map` into `modules.py`. So we don't have official test results against that PR. ``` ... Starting test(python3.6): pyspark.sql.tests.test_pandas_udf ... Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_agg ... Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_map ... Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_scalar ... Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_window Finished test(python3.6): pyspark.sql.tests.test_pandas_udf (21s) ... Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_map (49s) ... Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_window (58s) ... Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_scalar (82s) ... Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_agg (105s) ... ``` If tests fail, we should revert that PR. ### Why are the changes needed? Relevant tests should be ran. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Jenkins tests. Closes #25890 from HyukjinKwon/SPARK-28840. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Rewrite ``` NOT isnull(x) -> isnotnull(x) NOT isnotnull(x) -> isnull(x) ``` ### Why are the changes needed? Make LogicalPlan more readable and useful for query canonicalization. Make same condition equal when judge query canonicalization equal ### Does this PR introduce any user-facing change? NO ### How was this patch tested? Newly added UTs. Closes #25878 from AngersZhuuuu/SPARK-29162. Authored-by: angerszhu <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR aims to add tag `ExtendedSQLTest` for `SQLQueryTestSuite`. This doesn't affect our Jenkins test coverage. Instead, this tag gives us an ability to parallelize them by splitting this test suite and the other suites. ### Why are the changes needed? `SQLQueryTestSuite` takes 45 mins alone because it has many SQL scripts to run. <img width="906" alt="time" src="https://user-images.githubusercontent.com/9700541/65353553-4af0f100-dba2-11e9-9f2f-386742d28f92.png"> ### Does this PR introduce any user-facing change? No. ### How was this patch tested? ``` build/sbt "sql/test-only *.SQLQueryTestSuite" -Dtest.exclude.tags=org.apache.spark.tags.ExtendedSQLTest ... [info] SQLQueryTestSuite: [info] ScalaTest [info] Run completed in 3 seconds, 147 milliseconds. [info] Total number of tests run: 0 [info] Suites: completed 1, aborted 0 [info] Tests: succeeded 0, failed 0, canceled 0, ignored 0, pending 0 [info] No tests were executed. [info] Passed: Total 0, Failed 0, Errors 0, Passed 0 [success] Total time: 22 s, completed Sep 20, 2019 12:23:13 PM ``` Closes #25872 from dongjoon-hyun/SPARK-29191. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

… for ThriftServerSessionPage ### What changes were proposed in this pull request? This PR add support sorting `Execution Time` and `Duration` columns for `ThriftServerSessionPage`. ### Why are the changes needed? Previously, it's not sorted correctly. ### Does this PR introduce any user-facing change? Yes. ### How was this patch tested? Manually do the following and test sorting on those columns in the Spark Thrift Server Session Page. ``` $ sbin/start-thriftserver.sh $ bin/beeline -u jdbc:hive2://localhost:10000 0: jdbc:hive2://localhost:10000> create table t(a int); +---------+--+ | Result | +---------+--+ +---------+--+ No rows selected (0.521 seconds) 0: jdbc:hive2://localhost:10000> select * from t; +----+--+ | a | +----+--+ +----+--+ No rows selected (0.772 seconds) 0: jdbc:hive2://localhost:10000> show databases; +---------------+--+ | databaseName | +---------------+--+ | default | +---------------+--+ 1 row selected (0.249 seconds) ``` **Sorted by `Execution Time` column**:  **Sorted by `Duration` column**:  Closes #25892 from wangyum/SPARK-28599. Authored-by: Yuming Wang <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…yTest ### What changes were proposed in this pull request? This pr proposes to check method bytecode size in `BenchmarkQueryTest`. This metric is critical for performance numbers. ### Why are the changes needed? For performance checks ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A Closes #25788 from maropu/CheckMethodSize. Authored-by: Takeshi Yamamuro <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

cozos

pushed a commit

that referenced

this pull request

Oct 26, 2019

### What changes were proposed in this pull request? `org.apache.spark.sql.kafka010.KafkaDelegationTokenSuite` failed lately. After had a look at the logs it just shows the following fact without any details: ``` Caused by: sbt.ForkMain$ForkError: sun.security.krb5.KrbException: Server not found in Kerberos database (7) - Server not found in Kerberos database ``` Since the issue is intermittent and not able to reproduce it we should add more debug information and wait for reproduction with the extended logs. ### Why are the changes needed? Failing test doesn't give enough debug information. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? I've started the test manually and checked that such additional debug messages show up: ``` >>> KrbApReq: APOptions are 00000000 00000000 00000000 00000000 >>> EType: sun.security.krb5.internal.crypto.Aes128CtsHmacSha1EType Looking for keys for: kafka/localhostEXAMPLE.COM Added key: 17version: 0 Added key: 23version: 0 Added key: 16version: 0 Found unsupported keytype (3) for kafka/localhostEXAMPLE.COM >>> EType: sun.security.krb5.internal.crypto.Aes128CtsHmacSha1EType Using builtin default etypes for permitted_enctypes default etypes for permitted_enctypes: 17 16 23. >>> EType: sun.security.krb5.internal.crypto.Aes128CtsHmacSha1EType MemoryCache: add 1571936500/174770/16C565221B70AAB2BEFE31A83D13A2F4/client/localhostEXAMPLE.COM to client/localhostEXAMPLE.COM|kafka/localhostEXAMPLE.COM MemoryCache: Existing AuthList: #3: 1571936493/200803/8CD70D280B0862C5DA1FF901ECAD39FE/client/localhostEXAMPLE.COM #2: 1571936499/985009/BAD33290D079DD4E3579A8686EC326B7/client/localhostEXAMPLE.COM #1: 1571936499/995208/B76B9D78A9BE283AC78340157107FD40/client/localhostEXAMPLE.COM ``` Closes apache#26252 from gaborgsomogyi/SPARK-29580. Authored-by: Gabor Somogyi <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

Why are the changes needed?

Does this PR introduce any user-facing change?

How was this patch tested?