-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-29052][DOCS][ML][PYTHON][CORE][R][SQL][SS] Create a Migration Guide tap in Spark documentation #25757

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| - In Spark 3.0, deprecated method `shuffleBytesWritten`, `shuffleWriteTime` and `shuffleRecordsWritten` in `ShuffleWriteMetrics` have been removed. Instead, use `bytesWritten`, `writeTime ` and `recordsWritten` respectively. | ||

|

|

||

| - In Spark 3.0, deprecated method `AccumulableInfo.apply` have been removed because creating `AccumulableInfo` is disallowed. | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There's probably more we can or should add, given the number of deprecations and removals in 3.0. Is your theory that this should stick to changes that require user code to change, and ones that aren't obvious? Like, a missing method is obvious. A behavior change may not be.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yea, I just quickly skimmed so that I can leave this page non empty. For migration guide, I think we should mention something that's not a corner case and when both previous and new behaviours make sense. My thought was that basically bug fixes or minor behaviour changes shouldn't come here

d468715 to

922a33e

Compare

| ## Upgrading from MLlib 2.4 to 3.0 | ||

|

|

||

| ### Breaking changes | ||

| {:.no_toc} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

|

||

| ## Upgrading from Structured Streaming 2.4 to 3.0 | ||

|

|

||

| - In Spark 3.0, Structured Streaming forces the source schema into nullable when file-based datasources such as text, json, csv, parquet and orc are used via `spark.readStream(...)`. Previously, it respected the nullability in source schema; however, it caused issues tricky to debug with NPE. To restore the previous behavior, set `spark.sql.streaming.fileSource.schema.forceNullable` to `false`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I skimmed and found one SS change to note in migration guide while I am adding this SS migration guide section cc @zsxwing

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

922a33e to

7a8d639

Compare

|

Test build #110475 has finished for PR 25757 at commit

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For me, this looks like a good start. We can add more.

BTW, which version of Jekyll do you use, @HyukjinKwon ?

In the master branch (and this PR), I saw misaligned tabs when I use Jekyll 4.0.

|

Ur, it seems to be not a Jekyll issue. When I use BTW, this is irrelevant to this PR~ |

|

|

||

| - Since Spark 3.0, PySpark requires a Pandas version of 0.23.2 or higher to use Pandas related functionality, such as `toPandas`, `createDataFrame` from Pandas DataFrame, etc. | ||

|

|

||

| - Since Spark 3.0, PySpark requires a PyArrow version of 0.12.1 or higher to use PyArrow related functionality, such as `pandas_udf`, `toPandas` and `createDataFrame` with "spark.sql.execution.arrow.enabled=true", etc. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

"spark.sql.execution.arrow.enabled=true" => `spark.sql.execution.arrow.enabled`=true or `spark.sql.execution.arrow.enabled` set to `True`?

| access nested values. For example `df['table.column.nestedField']`. However, this means that if | ||

| your column name contains any dots you must now escape them using backticks (e.g., ``table.`column.with.dots`.nested``). | ||

|

|

||

| - DataFrame.withColumn method in PySpark supports adding a new column or replacing existing columns of the same name. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

`DataFrame.withColumn`

| - text: SQL Reserved/Non-Reserved Keywords | ||

| url: sql-reserved-and-non-reserved-keywords.html | ||

|

|

||

| url: sql-migration-old.html |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we need to keep these old links?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for doing this. It looks good.

|

Merged to master. |

|

Thanks @srowen @dongjoon-hyun @viirya. I was on vacation so the reaction was late. |

What changes were proposed in this pull request?

Currently, there is no migration section for PySpark, SparkCore and Structured Streaming.

It is difficult for users to know what to do when they upgrade.

This PR proposes to create create a "Migration Guide" tap at Spark documentation.

This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring.

There are some new information added, which I will leave a comment inlined for easier review.

MLlib

Merge ml-guide.html#migration-guide and ml-migration-guides.html

PySpark

Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html

SparkR

Move sparkr.html#migration-guide into a separate file, and extract from sql-migration-guide-upgrade.html

Core

Newly created at

'docs/core-migration-guide.md'. I skimmed resolved JIRAs at 3.0.0 and found some items to note.Structured Streaming

Newly created at

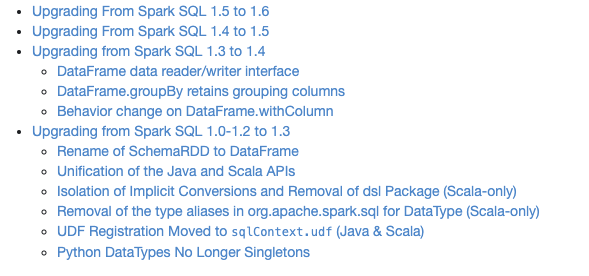

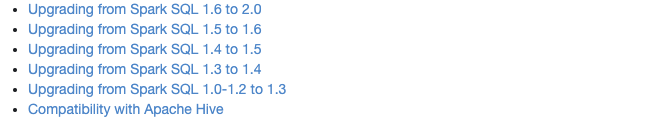

'docs/ss-migration-guide.md'. I skimmed resolved JIRAs at 3.0.0 and found some items to note.SQL

Merged sql-migration-guide-upgrade.html and sql-migration-guide-hive-compatibility.html

Why are the changes needed?

In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating.

Does this PR introduce any user-facing change?

Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html.

How was this patch tested?

Manually build the doc. This can be verified as below:

cd docs SKIP_API=1 jekyll build open _site/index.html