forked from apache/spark

-

Notifications

You must be signed in to change notification settings - Fork 0

Merge #3

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

Merge #3

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

…s are available This PR is an enhanced version of #25805 so I've kept the original text. The problem with the original PR can be found in comment. This situation can happen when an external system (e.g. Oozie) generates delegation tokens for a Spark application. The Spark driver will then run against secured services, have proper credentials (the tokens), but no kerberos credentials. So trying to do things that requires a kerberos credential fails. Instead, if no kerberos credentials are detected, just skip the whole delegation token code. Tested with an application that simulates Oozie; fails before the fix, passes with the fix. Also with other DT-related tests to make sure other functionality keeps working. Closes #25901 from gaborgsomogyi/SPARK-29082. Authored-by: Gabor Somogyi <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? When I use `ProcfsMetricsGetterSuite for` testing, always throw out `java.lang.NullPointerException`. I think there is a problem with locating `new ProcfsMetricsGetter`, which will lead to `SparkEnv` not being initialized in time. This leads to `java.lang.NullPointerException` when the method is executed. ### Why are the changes needed? For test. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Local testing Closes #25918 from sev7e0/dev_0924. Authored-by: sev7e0 <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…rabilities ### What changes were proposed in this pull request? The current code uses com.fasterxml.jackson.core:jackson-databind:jar:2.9.9.3 and it will cause a security vulnerabilities. We could get some security info from https://www.tenable.com/cve/CVE-2019-16335 and https://www.tenable.com/cve/CVE-2019-14540 This reference remind to upgrate the version of `jackson-databind` to 2.9.10 or later. This PR also upgrade the version of jackson to 2.9.10. ### Why are the changes needed? This PR fix the security vulnerabilities. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Exists UT. Closes #25912 from beliefer/upgrade-jackson. Authored-by: gengjiaan <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…o hadoop conf as "hive.foo=bar" ### What changes were proposed in this pull request? Copy any "spark.hive.foo=bar" spark properties into hadoop conf as "hive.foo=bar" ### Why are the changes needed? Providing spark side config entry for hive configurations. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? UT. Closes #25661 from WeichenXu123/add_hive_conf. Authored-by: WeichenXu <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…log if the current catalog is session catalog ### What changes were proposed in this pull request? when the current catalog is session catalog, get/set the current namespace from/to the `SessionCatalog`. ### Why are the changes needed? It's super confusing that we don't have a single source of truth for the current namespace of the session catalog. It can be in `CatalogManager` or `SessionCatalog`. Ideally, we should always track the current catalog/namespace in `CatalogManager`. However, there are many commands that do not support v2 catalog API. They ignore the current catalog in `CatalogManager` and blindly go to `SessionCatalog`. This means, we must keep track of the current namespace of session catalog even if the current catalog is not session catalog. Thus, we can't use `CatalogManager` to track the current namespace of session catalog because it changes when the current catalog is changed. To keep single source of truth, we should only track the current namespace of session catalog in `SessionCatalog`. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Newly added and updated test cases. Closes #25903 from cloud-fan/current. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

…r removed on timeline view ### What changes were proposed in this pull request? Changed Executor color settings in timeline-view.css ### Why are the changes needed? In WebUI, color of executor changes to dark blue when you click it. It might be confused user because of the color. [ Before ]  [ After ]  ### Does this PR introduce any user-facing change? Yes. ### How was this patch tested? Manually test. Closes #25921 from TomokoKomiyama/fix-js-2. Authored-by: Tomoko Komiyama <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…should be protected by lock ### What changes were proposed in this pull request? Protected the `executorDataMap` under lock when accessing it out of 'DriverEndpoint''s methods. ### Why are the changes needed? Just as the comments: > // Accessing `executorDataMap` in `DriverEndpoint.receive/receiveAndReply` doesn't need any // protection. But accessing `executorDataMap` out of `DriverEndpoint.receive/receiveAndReply` // must be protected by `CoarseGrainedSchedulerBackend.this`. Besides, `executorDataMap` should // only be modified in `DriverEndpoint.receive/receiveAndReply` with protection by // `CoarseGrainedSchedulerBackend.this`. `executorDataMap` is not threadsafe, it should be protected by lock when accessing it out of `DriverEndpoint` ### Does this PR introduce any user-facing change? NO ### How was this patch tested? Existed UT. Closes #25922 from ConeyLiu/executorDataMap. Authored-by: Xianyang Liu <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…e" to not rely on timing ### What changes were proposed in this pull request? This patch changes ReceiverSuite."receiver_life_cycle" to record actual calls with timestamp in FakeReceiver/FakeReceiverSupervisor, which doesn't rely on timing of stopping and starting receiver in restarting receiver. It enables us to give enough huge timeout on verification of restart as we can verify both stopping and starting together. ### Why are the changes needed? The test is flaky without this patch. We increased timeout to fix flakyness of this test (15adcc8) but even with longer timeout it has been still failing intermittently. ### Does this PR introduce any user-facing change? No ### How was this patch tested? I've reproduced test failure artificially via below diff: ``` diff --git a/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala b/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala index faf6db8..d8977543c0 100644 --- a/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala +++ b/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala -191,9 +191,11 private[streaming] abstract class ReceiverSupervisor( // thread pool. logWarning("Restarting receiver with delay " + delay + " ms: " + message, error.getOrElse(null)) + Thread.sleep(1000) stopReceiver("Restarting receiver with delay " + delay + "ms: " + message, error) logDebug("Sleeping for " + delay) Thread.sleep(delay) + Thread.sleep(1000) logInfo("Starting receiver again") startReceiver() logInfo("Receiver started again") ``` and confirmed this patch doesn't fail with the change. Closes #25862 from HeartSaVioR/SPARK-23197-v2. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…template ### What changes were proposed in this pull request? In the PR, I propose to replace function names in some expression examples by `_FUNC_`, and add a test to check that `_FUNC_` always present in all examples. ### Why are the changes needed? Binding of a function name to an expression is performed in `FunctionRegistry` which is single source of truth. Expression examples should avoid using function name directly because this can make the examples invalid in the future. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Added new test to `SQLQuerySuite` which analyses expression example, and check presence of `_FUNC_`. Closes #25924 from MaxGekk/fix-func-examples. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…tables ### What changes were proposed in this pull request? Don't allow calling append, overwrite, or overwritePartitions after tableProperty is used in DataFrameWriterV2 because table properties are not set as part of operations on existing tables. Only tables that are created or replaced can set table properties. ### Why are the changes needed? The properties are discarded otherwise, so this avoids confusing behavior. ### Does this PR introduce any user-facing change? Yes, but to a new API, DataFrameWriterV2. ### How was this patch tested? Removed test cases that used this method and the append, etc. methods because they no longer compile. Closes #25931 from rdblue/fix-dfw-v2-table-properties. Authored-by: Ryan Blue <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…iminating subexpression ### What changes were proposed in this pull request? This patch proposes to skip PlanExpression when doing subexpression elimination on executors. ### Why are the changes needed? Subexpression elimination can possibly cause NPE when applying on execution subquery expression like ScalarSubquery on executors. It is because PlanExpression wraps query plan. To compare query plan on executor when eliminating subexpression, can cause unexpected error, like NPE when accessing transient fields. The NPE looks like: ``` [info] - SPARK-29239: Subquery should not cause NPE when eliminating subexpression *** FAILED *** (175 milliseconds) [info] org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 1395.0 failed 1 times, most recent failure: Lost task 0.0 in stage 1395.0 (TID 3447, 10.0.0.196, executor driver): java.lang.NullPointerException [info] at org.apache.spark.sql.execution.LocalTableScanExec.stringArgs(LocalTableScanExec.scala:62) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.argString(TreeNode.scala:506) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.simpleString(TreeNode.scala:534) [info] at org.apache.spark.sql.catalyst.plans.QueryPlan.simpleString(QueryPlan.scala:179) [info] at org.apache.spark.sql.catalyst.plans.QueryPlan.verboseString(QueryPlan.scala:181) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.generateTreeString(TreeNode.scala:647) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.generateTreeString(TreeNode.scala:675) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.generateTreeString(TreeNode.scala:675) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.treeString(TreeNode.scala:569) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.treeString(TreeNode.scala:559) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.treeString(TreeNode.scala:551) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.toString(TreeNode.scala:548) [info] at org.apache.spark.sql.catalyst.errors.package$TreeNodeException.<init>(package.scala:36) [info] at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:56) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.makeCopy(TreeNode.scala:436) [info] at org.apache.spark.sql.catalyst.trees.TreeNode.makeCopy(TreeNode.scala:425) [info] at org.apache.spark.sql.execution.SparkPlan.makeCopy(SparkPlan.scala:102) [info] at org.apache.spark.sql.execution.SparkPlan.makeCopy(SparkPlan.scala:63) [info] at org.apache.spark.sql.catalyst.plans.QueryPlan.mapExpressions(QueryPlan.scala:132) [info] at org.apache.spark.sql.catalyst.plans.QueryPlan.doCanonicalize(QueryPlan.scala:261) ``` ### Does this PR introduce any user-facing change? No ### How was this patch tested? Added unit test. Closes #25925 from viirya/SPARK-29239. Authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? It is very confusing that the default save mode is different between the internal implementation of a Data source. The reason that we had to have saveModeForDSV2 was that there was no easy way to check the existence of a Table in DataSource v2. Now, we have catalogs for that. Therefore we should be able to remove the different save modes. We also have a plan forward for `save`, where we can't really check the existence of a table, and therefore create one. That will come in a future PR. ### Why are the changes needed? Because it is confusing that the internal implementation of a data source (which is generally non-obvious to users) decides which default save mode is used within Spark. ### Does this PR introduce any user-facing change? It changes the default save mode for V2 Tables in the DataFrameWriter APIs ### How was this patch tested? Existing tests Closes #25876 from brkyvz/removeSM. Lead-authored-by: Burak Yavuz <[email protected]> Co-authored-by: Burak Yavuz <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Rename the package pgSQL to postgreSQL ### Why are the changes needed? To address the comment in #25697 (comment) . The official full name seems more reasonable. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing unit tests. Closes #25936 from gengliangwang/renamePGSQL. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Yuming Wang <[email protected]>

### What changes were proposed in this pull request? After #25158 and #25458, SQL features of PostgreSQL are introduced into Spark. AFAIK, both features are implementation-defined behaviors, which are not specified in ANSI SQL. In such a case, this proposal is to add a configuration `spark.sql.dialect` for choosing a database dialect. After this PR, Spark supports two database dialects, `Spark` and `PostgreSQL`. With `PostgreSQL` dialect, Spark will: 1. perform integral division with the / operator if both sides are integral types; 2. accept "true", "yes", "1", "false", "no", "0", and unique prefixes as input and trim input for the boolean data type. ### Why are the changes needed? Unify the external database dialect with one configuration, instead of small flags. ### Does this PR introduce any user-facing change? A new configuration `spark.sql.dialect` for choosing a database dialect. ### How was this patch tested? Existing tests. Closes #25697 from gengliangwang/dialect. Authored-by: Gengliang Wang <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? Changed 'Phive-thriftserver' to ' -Phive-thriftserver'. ### Why are the changes needed? Typo ### Does this PR introduce any user-facing change? Yes. ### How was this patch tested? Manually tested. Closes #25937 from TomokoKomiyama/fix-build-doc. Authored-by: Tomoko Komiyama <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…ug log ### What changes were proposed in this pull request? This patch fixes the order of elements while logging token. Header columns are printed as ``` "TOKENID", "HMAC", "OWNER", "RENEWERS", "ISSUEDATE", "EXPIRYDATE", "MAXDATE" ``` whereas the code prints out actual information as ``` "HMAC"(redacted), "TOKENID", "OWNER", "RENEWERS", "ISSUEDATE", "EXPIRYDATE", "MAXDATE" ``` This patch fixes this. ### Why are the changes needed? Not critical but it doesn't line up with header columns. ### Does this PR introduce any user-facing change? No ### How was this patch tested? N/A, as it's only logged as debug and it's obvious what/where is the problem and how it can be fixed. Closes #25935 from HeartSaVioR/SPARK-27748-FOLLOWUP. Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]>

…ocess in Yarn client mode ### What changes were proposed in this pull request? `--driver-java-options` is not passed to driver process if the user runs the application in **Yarn client** mode Run the below command ``` ./bin/spark-sql --master yarn \ --driver-java-options="-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=5555" ``` **In Spark 2.4.4** ``` java ... -agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=5555 org.apache.spark.deploy.SparkSubmit --master yarn --conf spark.driver.extraJavaOptions=-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=5555 ... ``` **In Spark 3.0** ``` java ... org.apache.spark.deploy.SparkSubmit --master yarn --conf spark.driver.extraJavaOptions=-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=5556 ... ``` This issue is caused by [SPARK-28980](https://github.com/apache/spark/pull/25684/files#diff-75e0f814aa3717db995fa701883dc4e1R395) ### Why are the changes needed? Corrected the `isClientMode` API implementation ### Does this PR introduce any user-facing change? No ### How was this patch tested? Manually,  Closes #25889 from sandeep-katta/yarnmode. Authored-by: sandeep katta <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Call fs.exists only when necessary in InsertIntoHadoopFsRelationCommand. ### Why are the changes needed? When saving a dataframe into Hadoop, spark first checks if the file exists before inspecting the SaveMode to determine if it should actually insert data. However, the pathExists variable is actually not used in the case of SaveMode.Append. In some file systems, the exists call can be expensive and hence this PR makes that call only when necessary. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Existing unit tests should cover it since this doesn't change the behavior. Closes #25928 from rahij/rr/exists-upstream. Authored-by: Rahij Ramsharan <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…tters/getters/predict ### What changes were proposed in this pull request? Add the following Params classes in Pyspark clustering ```GaussianMixtureParams``` ```KMeansParams``` ```BisectingKMeansParams``` ```LDAParams``` ```PowerIterationClusteringParams``` ### Why are the changes needed? To be consistent with scala side ### Does this PR introduce any user-facing change? Yes. Add the following changes: ``` GaussianMixtureModel - get/setMaxIter - get/setFeaturesCol - get/setSeed - get/setPredictionCol - get/setProbabilityCol - get/setTol - predict ``` ``` KMeansModel - get/setMaxIter - get/setFeaturesCol - get/setSeed - get/setPredictionCol - get/setDistanceMeasure - get/setTol - predict ``` ``` BisectingKMeansModel - get/setMaxIter - get/setFeaturesCol - get/setSeed - get/setPredictionCol - get/setDistanceMeasure - predict ``` ``` LDAModel(HasMaxIter, HasFeaturesCol, HasSeed, HasCheckpointInterval): - get/setMaxIter - get/setFeaturesCol - get/setSeed - get/setCheckpointInterval ``` ### How was this patch tested? Add doctests Closes #25859 from huaxingao/spark-29142. Authored-by: Huaxin Gao <[email protected]> Signed-off-by: zhengruifeng <[email protected]>

### What changes were proposed in this pull request? Remove unnecessary imports in `core` module. ### Why are the changes needed? Clean code for Apache Spark 3.0.0. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Local test. Closes #25927 from sev7e0/dev_0925. Authored-by: sev7e0 <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…ct format ## What changes were proposed in this pull request? before pr:  after pr:  ## How was this patch tested? manual test Closes #24609 from uncleGen/SPARK-27715. Authored-by: uncleGen <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…dClientLoader configurable ### What changes were proposed in this pull request? Added a new config "spark.sql.additionalRemoteRepositories", a comma-delimited string config of the optional additional remote maven mirror. ### Why are the changes needed? We need to connect the Maven repositories in IsolatedClientLoader for downloading Hive jars, end-users can set this config if the default maven central repo is unreachable. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Existing UT. Closes #25849 from xuanyuanking/SPARK-29175. Authored-by: Yuanjian Li <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? 1, expose `BinaryClassificationMetrics.numBins` in `BinaryClassificationEvaluator` 2, expose `RegressionMetrics.throughOrigin` in `RegressionEvaluator` 3, add metric `explainedVariance` in `RegressionEvaluator` ### Why are the changes needed? existing function in mllib.metrics should also be exposed in ml ### Does this PR introduce any user-facing change? yes, this PR add two expert params and one metric option ### How was this patch tested? existing and added tests Closes #25940 from zhengruifeng/evaluator_add_param. Authored-by: zhengruifeng <[email protected]> Signed-off-by: zhengruifeng <[email protected]>

### What changes were proposed in this pull request?

Currently the behavior of getting output and generating null checks in `FilterExec` is different. Thus some nullable attribute could be treated as not nullable by mistake.

In `FilterExec.ouput`, an attribute is marked as nullable or not by finding its `exprId` in notNullAttributes:

```

a.nullable && notNullAttributes.contains(a.exprId)

```

But in `FilterExec.doConsume`, a `nullCheck` is generated or not for a predicate is decided by whether there is semantic equal not null predicate:

```

val nullChecks = c.references.map { r =>

val idx = notNullPreds.indexWhere { n => n.asInstanceOf[IsNotNull].child.semanticEquals(r)}

if (idx != -1 && !generatedIsNotNullChecks(idx)) {

generatedIsNotNullChecks(idx) = true

// Use the child's output. The nullability is what the child produced.

genPredicate(notNullPreds(idx), input, child.output)

} else {

""

}

}.mkString("\n").trim

```

NPE will happen when run the SQL below:

```

sql("create table table1(x string)")

sql("create table table2(x bigint)")

sql("create table table3(x string)")

sql("insert into table2 select null as x")

sql(

"""

|select t1.x

|from (

| select x from table1) t1

|left join (

| select x from (

| select x from table2

| union all

| select substr(x,5) x from table3

| ) a

| where length(x)>0

|) t3

|on t1.x=t3.x

""".stripMargin).collect()

```

NPE Exception:

```

java.lang.NullPointerException

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(generated.java:40)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:726)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.shuffle.sort.BypassMergeSortShuffleWriter.write(BypassMergeSortShuffleWriter.java:135)

at org.apache.spark.shuffle.ShuffleWriteProcessor.write(ShuffleWriteProcessor.scala:59)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:94)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:52)

at org.apache.spark.scheduler.Task.run(Task.scala:127)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:449)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1377)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:452)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

the generated code:

```

== Subtree 4 / 5 ==

*(2) Project [cast(x#7L as string) AS x#9]

+- *(2) Filter ((length(cast(x#7L as string)) > 0) AND isnotnull(cast(x#7L as string)))

+- Scan hive default.table2 [x#7L], HiveTableRelation `default`.`table2`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [x#7L]

Generated code:

/* 001 */ public Object generate(Object[] references) {

/* 002 */ return new GeneratedIteratorForCodegenStage2(references);

/* 003 */ }

/* 004 */

/* 005 */ // codegenStageId=2

/* 006 */ final class GeneratedIteratorForCodegenStage2 extends org.apache.spark.sql.execution.BufferedRowIterator {

/* 007 */ private Object[] references;

/* 008 */ private scala.collection.Iterator[] inputs;

/* 009 */ private scala.collection.Iterator inputadapter_input_0;

/* 010 */ private org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter[] filter_mutableStateArray_0 = new org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter[2];

/* 011 */

/* 012 */ public GeneratedIteratorForCodegenStage2(Object[] references) {

/* 013 */ this.references = references;

/* 014 */ }

/* 015 */

/* 016 */ public void init(int index, scala.collection.Iterator[] inputs) {

/* 017 */ partitionIndex = index;

/* 018 */ this.inputs = inputs;

/* 019 */ inputadapter_input_0 = inputs[0];

/* 020 */ filter_mutableStateArray_0[0] = new org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter(1, 0);

/* 021 */ filter_mutableStateArray_0[1] = new org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter(1, 32);

/* 022 */

/* 023 */ }

/* 024 */

/* 025 */ protected void processNext() throws java.io.IOException {

/* 026 */ while ( inputadapter_input_0.hasNext()) {

/* 027 */ InternalRow inputadapter_row_0 = (InternalRow) inputadapter_input_0.next();

/* 028 */

/* 029 */ do {

/* 030 */ boolean inputadapter_isNull_0 = inputadapter_row_0.isNullAt(0);

/* 031 */ long inputadapter_value_0 = inputadapter_isNull_0 ?

/* 032 */ -1L : (inputadapter_row_0.getLong(0));

/* 033 */

/* 034 */ boolean filter_isNull_2 = inputadapter_isNull_0;

/* 035 */ UTF8String filter_value_2 = null;

/* 036 */ if (!inputadapter_isNull_0) {

/* 037 */ filter_value_2 = UTF8String.fromString(String.valueOf(inputadapter_value_0));

/* 038 */ }

/* 039 */ int filter_value_1 = -1;

/* 040 */ filter_value_1 = (filter_value_2).numChars();

/* 041 */

/* 042 */ boolean filter_value_0 = false;

/* 043 */ filter_value_0 = filter_value_1 > 0;

/* 044 */ if (!filter_value_0) continue;

/* 045 */

/* 046 */ boolean filter_isNull_6 = inputadapter_isNull_0;

/* 047 */ UTF8String filter_value_6 = null;

/* 048 */ if (!inputadapter_isNull_0) {

/* 049 */ filter_value_6 = UTF8String.fromString(String.valueOf(inputadapter_value_0));

/* 050 */ }

/* 051 */ if (!(!filter_isNull_6)) continue;

/* 052 */

/* 053 */ ((org.apache.spark.sql.execution.metric.SQLMetric) references[0] /* numOutputRows */).add(1);

/* 054 */

/* 055 */ boolean project_isNull_0 = false;

/* 056 */ UTF8String project_value_0 = null;

/* 057 */ if (!false) {

/* 058 */ project_value_0 = UTF8String.fromString(String.valueOf(inputadapter_value_0));

/* 059 */ }

/* 060 */ filter_mutableStateArray_0[1].reset();

/* 061 */

/* 062 */ filter_mutableStateArray_0[1].zeroOutNullBytes();

/* 063 */

/* 064 */ if (project_isNull_0) {

/* 065 */ filter_mutableStateArray_0[1].setNullAt(0);

/* 066 */ } else {

/* 067 */ filter_mutableStateArray_0[1].write(0, project_value_0);

/* 068 */ }

/* 069 */ append((filter_mutableStateArray_0[1].getRow()));

/* 070 */

/* 071 */ } while(false);

/* 072 */ if (shouldStop()) return;

/* 073 */ }

/* 074 */ }

/* 075 */

/* 076 */ }

```

This PR proposes to use semantic comparison both in `FilterExec.output` and `FilterExec.doConsume` for nullable attribute.

With this PR, the generated code snippet is below:

```

== Subtree 2 / 5 ==

*(3) Project [substring(x#8, 5, 2147483647) AS x#5]

+- *(3) Filter ((length(substring(x#8, 5, 2147483647)) > 0) AND isnotnull(substring(x#8, 5, 2147483647)))

+- Scan hive default.table3 [x#8], HiveTableRelation `default`.`table3`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [x#8]

Generated code:

/* 001 */ public Object generate(Object[] references) {

/* 002 */ return new GeneratedIteratorForCodegenStage3(references);

/* 003 */ }

/* 004 */

/* 005 */ // codegenStageId=3

/* 006 */ final class GeneratedIteratorForCodegenStage3 extends org.apache.spark.sql.execution.BufferedRowIterator {

/* 007 */ private Object[] references;

/* 008 */ private scala.collection.Iterator[] inputs;

/* 009 */ private scala.collection.Iterator inputadapter_input_0;

/* 010 */ private org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter[] filter_mutableStateArray_0 = new org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter[2];

/* 011 */

/* 012 */ public GeneratedIteratorForCodegenStage3(Object[] references) {

/* 013 */ this.references = references;

/* 014 */ }

/* 015 */

/* 016 */ public void init(int index, scala.collection.Iterator[] inputs) {

/* 017 */ partitionIndex = index;

/* 018 */ this.inputs = inputs;

/* 019 */ inputadapter_input_0 = inputs[0];

/* 020 */ filter_mutableStateArray_0[0] = new org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter(1, 32);

/* 021 */ filter_mutableStateArray_0[1] = new org.apache.spark.sql.catalyst.expressions.codegen.UnsafeRowWriter(1, 32);

/* 022 */

/* 023 */ }

/* 024 */

/* 025 */ protected void processNext() throws java.io.IOException {

/* 026 */ while ( inputadapter_input_0.hasNext()) {

/* 027 */ InternalRow inputadapter_row_0 = (InternalRow) inputadapter_input_0.next();

/* 028 */

/* 029 */ do {

/* 030 */ boolean inputadapter_isNull_0 = inputadapter_row_0.isNullAt(0);

/* 031 */ UTF8String inputadapter_value_0 = inputadapter_isNull_0 ?

/* 032 */ null : (inputadapter_row_0.getUTF8String(0));

/* 033 */

/* 034 */ boolean filter_isNull_0 = true;

/* 035 */ boolean filter_value_0 = false;

/* 036 */ boolean filter_isNull_2 = true;

/* 037 */ UTF8String filter_value_2 = null;

/* 038 */

/* 039 */ if (!inputadapter_isNull_0) {

/* 040 */ filter_isNull_2 = false; // resultCode could change nullability.

/* 041 */ filter_value_2 = inputadapter_value_0.substringSQL(5, 2147483647);

/* 042 */

/* 043 */ }

/* 044 */ boolean filter_isNull_1 = filter_isNull_2;

/* 045 */ int filter_value_1 = -1;

/* 046 */

/* 047 */ if (!filter_isNull_2) {

/* 048 */ filter_value_1 = (filter_value_2).numChars();

/* 049 */ }

/* 050 */ if (!filter_isNull_1) {

/* 051 */ filter_isNull_0 = false; // resultCode could change nullability.

/* 052 */ filter_value_0 = filter_value_1 > 0;

/* 053 */

/* 054 */ }

/* 055 */ if (filter_isNull_0 || !filter_value_0) continue;

/* 056 */ boolean filter_isNull_8 = true;

/* 057 */ UTF8String filter_value_8 = null;

/* 058 */

/* 059 */ if (!inputadapter_isNull_0) {

/* 060 */ filter_isNull_8 = false; // resultCode could change nullability.

/* 061 */ filter_value_8 = inputadapter_value_0.substringSQL(5, 2147483647);

/* 062 */

/* 063 */ }

/* 064 */ if (!(!filter_isNull_8)) continue;

/* 065 */

/* 066 */ ((org.apache.spark.sql.execution.metric.SQLMetric) references[0] /* numOutputRows */).add(1);

/* 067 */

/* 068 */ boolean project_isNull_0 = true;

/* 069 */ UTF8String project_value_0 = null;

/* 070 */

/* 071 */ if (!inputadapter_isNull_0) {

/* 072 */ project_isNull_0 = false; // resultCode could change nullability.

/* 073 */ project_value_0 = inputadapter_value_0.substringSQL(5, 2147483647);

/* 074 */

/* 075 */ }

/* 076 */ filter_mutableStateArray_0[1].reset();

/* 077 */

/* 078 */ filter_mutableStateArray_0[1].zeroOutNullBytes();

/* 079 */

/* 080 */ if (project_isNull_0) {

/* 081 */ filter_mutableStateArray_0[1].setNullAt(0);

/* 082 */ } else {

/* 083 */ filter_mutableStateArray_0[1].write(0, project_value_0);

/* 084 */ }

/* 085 */ append((filter_mutableStateArray_0[1].getRow()));

/* 086 */

/* 087 */ } while(false);

/* 088 */ if (shouldStop()) return;

/* 089 */ }

/* 090 */ }

/* 091 */

/* 092 */ }

```

### Why are the changes needed?

Fix NPE bug in FilterExec.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

new UT

Closes #25902 from wangshuo128/filter-codegen-npe.

Authored-by: Wang Shuo <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? New test compares outputs of expression examples in comments with results of `hiveResultString()`. Also I fixed existing examples where actual and expected outputs are different. ### Why are the changes needed? This prevents mistakes in expression examples, and fixes existing mistakes in comments. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Add new test to `SQLQuerySuite`. Closes #25942 from MaxGekk/run-expr-examples. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…ing SessionState for built-in Hive 2.3 ### What changes were proposed in this pull request? Hive 2.3 will set a new UDFClassLoader to hiveConf.classLoader when initializing SessionState since HIVE-11878, and 1. ADDJarCommand will add jars to clientLoader.classLoader. 2. --jar passed jar will be added to clientLoader.classLoader 3. jar passed by hive conf `hive.aux.jars` [SPARK-28954](#25653) [SPARK-28840](#25542) will be added to clientLoader.classLoader too For these reason we cannot load the jars added by ADDJarCommand because of class loader got changed. We reset it to clientLoader.ClassLoader here. ### Why are the changes needed? support for jdk11 ### Does this PR introduce any user-facing change? NO ### How was this patch tested? UT ``` export JAVA_HOME=/usr/lib/jdk-11.0.3 export PATH=$JAVA_HOME/bin:$PATH build/sbt -Phive-thriftserver -Phadoop-3.2 hive/test-only *HiveSparkSubmitSuite -- -z "SPARK-8368: includes jars passed in through --jars" hive-thriftserver/test-only *HiveThriftBinaryServerSuite -- -z "test add jar" ``` Closes #25775 from AngersZhuuuu/SPARK-29015-STS-JDK11. Authored-by: angerszhu <[email protected]> Signed-off-by: Sean Owen <[email protected]>

… SQL migration guide ### What changes were proposed in this pull request? Updated the SQL migration guide regarding to recently supported special date and timestamp values, see #25716 and #25708. Closes #25834 ### Why are the changes needed? To let users know about new feature in Spark 3.0. ### Does this PR introduce any user-facing change? No Closes #25948 from MaxGekk/special-values-migration-guide. Authored-by: Maxim Gekk <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…lumn in element_at function

### What changes were proposed in this pull request?

This PR makes `element_at` in PySpark able to take PySpark `Column` instances.

### Why are the changes needed?

To match with Scala side. Seems it was intended but not working correctly as a bug.

### Does this PR introduce any user-facing change?

Yes. See below:

```python

from pyspark.sql import functions as F

x = spark.createDataFrame([([1,2,3],1),([4,5,6],2),([7,8,9],3)],['list','num'])

x.withColumn('aa',F.element_at('list',x.num.cast('int'))).show()

```

Before:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/functions.py", line 2059, in element_at

return Column(sc._jvm.functions.element_at(_to_java_column(col), extraction))

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1277, in __call__

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1241, in _build_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1228, in _get_args

File "/.../forked/spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_collections.py", line 500, in convert

File "/.../spark/python/pyspark/sql/column.py", line 344, in __iter__

raise TypeError("Column is not iterable")

TypeError: Column is not iterable

```

After:

```

+---------+---+---+

| list|num| aa|

+---------+---+---+

|[1, 2, 3]| 1| 1|

|[4, 5, 6]| 2| 5|

|[7, 8, 9]| 3| 9|

+---------+---+---+

```

### How was this patch tested?

Manually tested against literal, Python native types, and PySpark column.

Closes #25950 from HyukjinKwon/SPARK-29240.

Authored-by: HyukjinKwon <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

…` before checking available slots for barrier taskSet ### What changes were proposed in this pull request? availableSlots are computed before the for loop looping over all TaskSets in resourceOffers. But the number of slots changes in every iteration, as in every iteration these slots are taken. The number of available slots checked by a barrier task set has therefore to be recomputed in every iteration from availableCpus. ### Why are the changes needed? Bugfix. This could make resourceOffer attempt to start a barrier task set, even though it has not enough slots available. That would then be caught by the `require` in line 519, which will throw an exception, which will get caught and ignored by Dispatcher's MessageLoop, so nothing terrible would happen, but the exception would prevent resourceOffers from considering further TaskSets. Note that launching the barrier TaskSet can still fail if other requirements are not satisfied, and still can be rolled-back by throwing exception in this `require`. Handling it more gracefully remains a TODO in SPARK-24818, but this fix at least should resolve the situation when it's unable to launch because of insufficient slots. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Added UT Closes #23375 Closes #25946 from juliuszsompolski/SPARK-29263. Authored-by: Juliusz Sompolski <[email protected]> Signed-off-by: Xingbo Jiang <[email protected]>

…line Log the full spark-submit command in SparkSubmit#launchApplication Adding .python-version (pyenv file) to RAT exclusion list ### What changes were proposed in this pull request? Original motivation [here](http://apache-spark-user-list.1001560.n3.nabble.com/Is-it-possible-to-obtain-the-full-command-to-be-invoked-by-SparkLauncher-td35144.html), expanded in the [Jira](https://issues.apache.org/jira/browse/SPARK-29070).. In essence, we want to be able to log the full `spark-submit` command being constructed by `SparkLauncher` ### Why are the changes needed? Currently, it is not possible to directly obtain this information from the `SparkLauncher` instance, which makes debugging and customer support more difficult. ### Does this PR introduce any user-facing change? No ### How was this patch tested? `core` `sbt` tests were executed. The `SparkLauncherSuite` (where I added assertions to an existing test) was also checked. Within that, `testSparkLauncherGetError` is failing, but that appears not to have been caused by this change (failing for me even on the parent commit of c18f849). Closes #25777 from jeff303/SPARK-29070. Authored-by: Jeff Evans <[email protected]> Signed-off-by: Marcelo Vanzin <[email protected]>

### What changes were proposed in this pull request? This add `typesafe` bintray repo for `sbt-mima-plugin`. ### Why are the changes needed? Since Oct 21, the following plugin causes [Jenkins failures](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-branch-2.4-test-sbt-hadoop-2.6/611/console ) due to the missing jar. - `branch-2.4`: `sbt-mima-plugin:0.1.17` is missing. - `master`: `sbt-mima-plugin:0.3.0` is missing. These versions of `sbt-mima-plugin` seems to be removed from the old repo. ``` $ rm -rf ~/.ivy2/ $ build/sbt scalastyle test:scalastyle ... [warn] :::::::::::::::::::::::::::::::::::::::::::::: [warn] :: UNRESOLVED DEPENDENCIES :: [warn] :::::::::::::::::::::::::::::::::::::::::::::: [warn] :: com.typesafe#sbt-mima-plugin;0.1.17: not found [warn] :::::::::::::::::::::::::::::::::::::::::::::: ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Check `GitHub Action` linter result. This PR should pass. Or, manual check. (Note that Jenkins PR builder didn't fail until now due to the local cache.) Closes #26217 from dongjoon-hyun/SPARK-29560. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This PR ports window.sql from PostgreSQL regression tests https://github.com/postgres/postgres/blob/REL_12_STABLE/src/test/regress/sql/window.sql from lines 1~319 The expected results can be found in the link: https://github.com/postgres/postgres/blob/REL_12_STABLE/src/test/regress/expected/window.out ### Why are the changes needed? To ensure compatibility with PostgreSQL. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Pass the Jenkins. And, Comparison with PgSQL results. Closes #26119 from DylanGuedes/spark-29107. Authored-by: DylanGuedes <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? This PR adds `CREATE NAMESPACE` support for V2 catalogs. ### Why are the changes needed? Currently, you cannot explicitly create namespaces for v2 catalogs. ### Does this PR introduce any user-facing change? The user can now perform the following: ```SQL CREATE NAMESPACE mycatalog.ns ``` to create a namespace `ns` inside `mycatalog` V2 catalog. ### How was this patch tested? Added unit tests. Closes #26166 from imback82/create_namespace. Authored-by: Terry Kim <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…ntegration-tests ### What changes were proposed in this pull request? Currently, `docker-integration-tests` is broken in both JDK8/11. This PR aims to recover JDBC integration test for JDK8/11. ### Why are the changes needed? While SPARK-28737 upgraded `Jersey` to 2.29 for JDK11, `docker-integration-tests` is broken because `com.spotify.docker-client` still depends on `jersey-guava`. The latest `com.spotify.docker-client` also has this problem. - https://mvnrepository.com/artifact/com.spotify/docker-client/5.0.2 -> https://mvnrepository.com/artifact/org.glassfish.jersey.core/jersey-client/2.19 -> https://mvnrepository.com/artifact/org.glassfish.jersey.core/jersey-common/2.19 -> https://mvnrepository.com/artifact/org.glassfish.jersey.bundles.repackaged/jersey-guava/2.19 ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manual because this is an integration test suite. ``` $ java -version openjdk version "1.8.0_222" OpenJDK Runtime Environment (AdoptOpenJDK)(build 1.8.0_222-b10) OpenJDK 64-Bit Server VM (AdoptOpenJDK)(build 25.222-b10, mixed mode) $ build/mvn install -DskipTests $ build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test ``` ``` $ java -version openjdk version "11.0.5" 2019-10-15 OpenJDK Runtime Environment AdoptOpenJDK (build 11.0.5+10) OpenJDK 64-Bit Server VM AdoptOpenJDK (build 11.0.5+10, mixed mode) $ build/mvn install -DskipTests $ build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test ``` **BEFORE** ``` *** RUN ABORTED *** com.spotify.docker.client.exceptions.DockerException: java.util.concurrent.ExecutionException: javax.ws.rs.ProcessingException: java.lang.NoClassDefFoundError: jersey/repackaged/com/google/common/util/concurrent/MoreExecutors at com.spotify.docker.client.DefaultDockerClient.propagate(DefaultDockerClient.java:1607) at com.spotify.docker.client.DefaultDockerClient.request(DefaultDockerClient.java:1538) at com.spotify.docker.client.DefaultDockerClient.ping(DefaultDockerClient.java:387) at org.apache.spark.sql.jdbc.DockerJDBCIntegrationSuite.beforeAll(DockerJDBCIntegrationSuite.scala:81) ``` **AFTER** ``` Run completed in 47 seconds, 999 milliseconds. Total number of tests run: 30 Suites: completed 6, aborted 0 Tests: succeeded 30, failed 0, canceled 0, ignored 6, pending 0 All tests passed. ``` Closes #26203 from dongjoon-hyun/SPARK-29546. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…sion.sharedState ### What changes were proposed in this pull request? This moves the tracking of active queries from a per SparkSession state, to the shared SparkSession for better safety in isolated Spark Session environments. ### Why are the changes needed? We have checks to prevent the restarting of the same stream on the same spark session, but we can actually make that better in multi-tenant environments by actually putting that state in the SharedState instead of SessionState. This would allow a more comprehensive check for multi-tenant clusters. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Added tests to StreamingQueryManagerSuite Closes #26018 from brkyvz/sharedStreamingQueryManager. Lead-authored-by: Burak Yavuz <[email protected]> Co-authored-by: Burak Yavuz <[email protected]> Signed-off-by: Burak Yavuz <[email protected]>

… clearly ### What changes were proposed in this pull request? As described in [SPARK-29542](https://issues.apache.org/jira/browse/SPARK-29542) , the descriptions of `spark.sql.files.*` are confused. In this PR, I make their descriptions be clearly. ### Why are the changes needed? It makes the descriptions of `spark.sql.files.*` be clearly. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing UT. Closes #26200 from turboFei/SPARK-29542-partition-maxSize. Authored-by: turbofei <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

### What changes were proposed in this pull request? Add mapPartitionsWithIndex for RDDBarrier. ### Why are the changes needed? There is only one method in `RDDBarrier`. We often use the partition index as a label for the current partition. We need to get the index from `TaskContext` index in the method of `mapPartitions` which is not convenient. ### Does this PR introduce any user-facing change? No ### How was this patch tested? New UT. Closes #26148 from ConeyLiu/barrier-index. Authored-by: Xianyang Liu <[email protected]> Signed-off-by: Xingbo Jiang <[email protected]>

…n post-processing badges in JavaDoc ### What changes were proposed in this pull request? This PR fixes our documentation build to copy minified jquery file instead. The original file `jquery.js` seems missing as of Scala 2.12 upgrade. Scala 2.12 seems started to use minified `jquery.min.js` instead. Since we dropped Scala 2.11, we won't have to take care about legacy `jquery.js` anymore. Note that, there seem multiple weird stuff in the current ScalaDoc (e.g., some pages are weird, it starts from `scala.collection.*` or some pages are missing, or some docs are truncated, some badges look missing). It needs a separate double check and investigation. This PR targets to make the documentation generation pass in order to unblock Spark 3.0 preview. ### Why are the changes needed? To fix and make our official documentation build able to run. ### Does this PR introduce any user-facing change? It will enable to build the documentation in our official way. **Before:** ``` Making directory api/scala cp -r ../target/scala-2.12/unidoc/. api/scala Making directory api/java cp -r ../target/javaunidoc/. api/java Updating JavaDoc files for badge post-processing Copying jquery.js from Scala API to Java API for page post-processing of badges jekyll 3.8.6 | Error: No such file or directory rb_sysopen - ./api/scala/lib/jquery.js ``` **After:** ``` Making directory api/scala cp -r ../target/scala-2.12/unidoc/. api/scala Making directory api/java cp -r ../target/javaunidoc/. api/java Updating JavaDoc files for badge post-processing Copying jquery.min.js from Scala API to Java API for page post-processing of badges Copying api_javadocs.js to Java API for page post-processing of badges Appending content of api-javadocs.css to JavaDoc stylesheet.css for badge styles ... ``` ### How was this patch tested? Manually tested via: ``` SKIP_PYTHONDOC=1 SKIP_RDOC=1 SKIP_SQLDOC=1 jekyll build ``` Closes #26228 from HyukjinKwon/SPARK-29569. Authored-by: HyukjinKwon <[email protected]> Signed-off-by: Xingbo Jiang <[email protected]>

… commands ### What changes were proposed in this pull request? Add RefreshTableStatement and make REFRESH TABLE go through the same catalog/table resolution framework of v2 commands. ### Why are the changes needed? It's important to make all the commands have the same table resolution behavior, to avoid confusing end-users. e.g. ``` USE my_catalog DESC t // success and describe the table t from my_catalog REFRESH TABLE t // report table not found as there is no table t in the session catalog ``` ### Does this PR introduce any user-facing change? yes. When running REFRESH TABLE, Spark fails the command if the current catalog is set to a v2 catalog, or the table name specified a v2 catalog. ### How was this patch tested? New unit tests Closes #26183 from imback82/refresh_table. Lead-authored-by: Terry Kim <[email protected]> Co-authored-by: Terry Kim <[email protected]> Signed-off-by: Liang-Chi Hsieh <[email protected]>

…StructUnsafe

### What changes were proposed in this pull request?

There's a case where MapObjects has a lambda function which creates nested struct - unsafe data in safe data struct. In this case, MapObjects doesn't copy the row returned from lambda function (as outmost data type is safe data struct), which misses copying nested unsafe data.

The culprit is that `UnsafeProjection.toUnsafeExprs` converts `CreateNamedStruct` to `CreateNamedStructUnsafe` (this is the only place where `CreateNamedStructUnsafe` is used) which incurs safe and unsafe being mixed up temporarily, which may not be needed at all at least logically, as it will finally assembly these evaluations to `UnsafeRow`.

> Before the patch

```

/* 105 */ private ArrayData MapObjects_0(InternalRow i) {

/* 106 */ boolean isNull_1 = i.isNullAt(0);

/* 107 */ ArrayData value_1 = isNull_1 ?

/* 108 */ null : (i.getArray(0));

/* 109 */ ArrayData value_0 = null;

/* 110 */

/* 111 */ if (!isNull_1) {

/* 112 */

/* 113 */ int dataLength_0 = value_1.numElements();

/* 114 */

/* 115 */ ArrayData[] convertedArray_0 = null;

/* 116 */ convertedArray_0 = new ArrayData[dataLength_0];

/* 117 */

/* 118 */

/* 119 */ int loopIndex_0 = 0;

/* 120 */

/* 121 */ while (loopIndex_0 < dataLength_0) {

/* 122 */ value_MapObject_lambda_variable_1 = (int) (value_1.getInt(loopIndex_0));

/* 123 */ isNull_MapObject_lambda_variable_1 = value_1.isNullAt(loopIndex_0);

/* 124 */

/* 125 */ ArrayData arrayData_0 = ArrayData.allocateArrayData(

/* 126 */ -1, 1L, " createArray failed.");

/* 127 */

/* 128 */ mutableStateArray_0[0].reset();

/* 129 */

/* 130 */

/* 131 */ mutableStateArray_0[0].zeroOutNullBytes();

/* 132 */

/* 133 */

/* 134 */ if (isNull_MapObject_lambda_variable_1) {

/* 135 */ mutableStateArray_0[0].setNullAt(0);

/* 136 */ } else {

/* 137 */ mutableStateArray_0[0].write(0, value_MapObject_lambda_variable_1);

/* 138 */ }

/* 139 */ arrayData_0.update(0, (mutableStateArray_0[0].getRow()));

/* 140 */ if (false) {

/* 141 */ convertedArray_0[loopIndex_0] = null;

/* 142 */ } else {

/* 143 */ convertedArray_0[loopIndex_0] = arrayData_0 instanceof UnsafeArrayData? arrayData_0.copy() : arrayData_0;

/* 144 */ }

/* 145 */

/* 146 */ loopIndex_0 += 1;

/* 147 */ }

/* 148 */

/* 149 */ value_0 = new org.apache.spark.sql.catalyst.util.GenericArrayData(convertedArray_0);

/* 150 */ }

/* 151 */ globalIsNull_0 = isNull_1;

/* 152 */ return value_0;

/* 153 */ }

```

> After the patch

```

/* 104 */ private ArrayData MapObjects_0(InternalRow i) {

/* 105 */ boolean isNull_1 = i.isNullAt(0);

/* 106 */ ArrayData value_1 = isNull_1 ?

/* 107 */ null : (i.getArray(0));

/* 108 */ ArrayData value_0 = null;

/* 109 */

/* 110 */ if (!isNull_1) {

/* 111 */

/* 112 */ int dataLength_0 = value_1.numElements();

/* 113 */

/* 114 */ ArrayData[] convertedArray_0 = null;

/* 115 */ convertedArray_0 = new ArrayData[dataLength_0];

/* 116 */

/* 117 */

/* 118 */ int loopIndex_0 = 0;

/* 119 */

/* 120 */ while (loopIndex_0 < dataLength_0) {

/* 121 */ value_MapObject_lambda_variable_1 = (int) (value_1.getInt(loopIndex_0));

/* 122 */ isNull_MapObject_lambda_variable_1 = value_1.isNullAt(loopIndex_0);

/* 123 */

/* 124 */ ArrayData arrayData_0 = ArrayData.allocateArrayData(

/* 125 */ -1, 1L, " createArray failed.");

/* 126 */

/* 127 */ Object[] values_0 = new Object[1];

/* 128 */

/* 129 */

/* 130 */ if (isNull_MapObject_lambda_variable_1) {

/* 131 */ values_0[0] = null;

/* 132 */ } else {

/* 133 */ values_0[0] = value_MapObject_lambda_variable_1;

/* 134 */ }

/* 135 */

/* 136 */ final InternalRow value_3 = new org.apache.spark.sql.catalyst.expressions.GenericInternalRow(values_0);

/* 137 */ values_0 = null;

/* 138 */ arrayData_0.update(0, value_3);

/* 139 */ if (false) {

/* 140 */ convertedArray_0[loopIndex_0] = null;

/* 141 */ } else {

/* 142 */ convertedArray_0[loopIndex_0] = arrayData_0 instanceof UnsafeArrayData? arrayData_0.copy() : arrayData_0;

/* 143 */ }

/* 144 */

/* 145 */ loopIndex_0 += 1;

/* 146 */ }

/* 147 */

/* 148 */ value_0 = new org.apache.spark.sql.catalyst.util.GenericArrayData(convertedArray_0);

/* 149 */ }

/* 150 */ globalIsNull_0 = isNull_1;

/* 151 */ return value_0;

/* 152 */ }

```

### Why are the changes needed?

This patch fixes the bug described above.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

UT added which fails on master branch and passes on PR.

Closes #26173 from HeartSaVioR/SPARK-29503.

Authored-by: Jungtaek Lim (HeartSaVioR) <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

… creating new query stage and then can optimize the shuffle reader to local shuffle reader as much as possible ### What changes were proposed in this pull request? `OptimizeLocalShuffleReader` rule is very conservative and gives up optimization as long as there are extra shuffles introduced. It's very likely that most of the added local shuffle readers are fine and only one introduces extra shuffle. However, it's very hard to make `OptimizeLocalShuffleReader` optimal, a simple workaround is to run this rule again right before executing a query stage. ### Why are the changes needed? Optimize more shuffle reader to local shuffle reader. ### Does this PR introduce any user-facing change? No ### How was this patch tested? existing ut Closes #26207 from JkSelf/resolve-multi-joins-issue. Authored-by: jiake <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? This proposes to update the dropwizard/codahale metrics library version used by Spark to `3.2.6` which is the last version supporting Ganglia. ### Why are the changes needed? Spark is currently using Dropwizard metrics version 3.1.5, a version that is no more actively developed nor maintained, according to the project's Github repo README. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Existing tests + manual tests on a YARN cluster. Closes #26212 from LucaCanali/updateDropwizardVersion. Authored-by: Luca Canali <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This is a follow-up of #26189 to regenerate the result on EC2. ### Why are the changes needed? This will be used for the other PR reviews. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? N/A. Closes #26233 from dongjoon-hyun/SPARK-29533. Authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: DB Tsai <[email protected]>

### What changes were proposed in this pull request?

This PR updates JDBC Integration Test DBMS Docker Images.

| DBMS | Docker Image Tag | Release |

| ------ | ------------------ | ------ |

| MySQL | mysql:5.7.28 | Oct 13, 2019 |

| PostgreSQL | postgres:12.0-alpine | Oct 3, 2019 |

* For `MySQL`, `SET GLOBAL sql_mode = ''` is added to disable all strict modes because `test("Basic write test")` creates a table like the following. The latest MySQL rejects `0000-00-00 00:00:00` as TIMESTAMP and causes the test case failure.

```

mysql> desc datescopy;

+-------+-----------+------+-----+---------------------+-----------------------------+

| Field | Type | Null | Key | Default | Extra |

+-------+-----------+------+-----+---------------------+-----------------------------+

| d | date | YES | | NULL | |

| t | timestamp | NO | | CURRENT_TIMESTAMP | on update CURRENT_TIMESTAMP |

| dt | timestamp | NO | | 0000-00-00 00:00:00 | |

| ts | timestamp | NO | | 0000-00-00 00:00:00 | |

| yr | date | YES | | NULL | |

+-------+-----------+------+-----+---------------------+-----------------------------+

```

* For `PostgreSQL`, I chose the smallest image in `12` releases. It reduces the image size a lot, `312MB` -> `72.8MB`. This is good for CI/CI testing environment.

```

$ docker images | grep postgres

postgres 12.0-alpine 5b681acb1cfc 2 days ago 72.8MB

postgres 11.4 53912975086f 3 months ago 312MB

```

Note that

- For `MsSqlServer`, we are using `2017-GA-ubuntu` and the next version `2019-CTP3.2-ubuntu` is still `Community Technology Preview` status.

- For `DB2` and `Oracle`, the official images are not available.

### Why are the changes needed?

This is to make it sure we are testing with the latest DBMS images during preparing `3.0.0`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Since this is the integration test, we need to run this manually.

```

build/mvn install -DskipTests

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

Closes #26224 from dongjoon-hyun/SPARK-29567.

Authored-by: Dongjoon Hyun <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

…OutputStatus ### What changes were proposed in this pull request? Instead of using ZStd codec directly, we use Spark's CompressionCodec which wraps ZStd codec in a buffered stream to avoid overhead excessive of JNI call while trying to compress/decompress small amount of data. Also, by using Spark's CompressionCodec, we can easily to make it configurable in the future if it's needed. ### Why are the changes needed? Faster performance. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing tests. Closes #26235 from dbtsai/optimizeDeser. Lead-authored-by: DB Tsai <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? This is a follow-up of #24052 to correct assert condition. ### Why are the changes needed? To test IllegalArgumentException condition.. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Manual Test (during fixing of SPARK-29453 find this issue) Closes #26234 from 07ARB/SPARK-29571. Authored-by: 07ARB <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…ommands ### What changes were proposed in this pull request? Add CacheTableStatement and make CACHE TABLE go through the same catalog/table resolution framework of v2 commands. ### Why are the changes needed? It's important to make all the commands have the same table resolution behavior, to avoid confusing end-users. e.g. ``` USE my_catalog DESC t // success and describe the table t from my_catalog CACHE TABLE t // report table not found as there is no table t in the session catalog ``` ### Does this PR introduce any user-facing change? yes. When running CACHE TABLE, Spark fails the command if the current catalog is set to a v2 catalog, or the table name specified a v2 catalog. ### How was this patch tested? Unit tests. Closes #26179 from viirya/SPARK-29522. Lead-authored-by: Liang-Chi Hsieh <[email protected]> Co-authored-by: Liang-Chi Hsieh <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…database style iterator ### What changes were proposed in this pull request? Reimplement the iterator in UnsafeExternalRowSorter in database style. This can be done by reusing the `RowIterator` in our code base. ### Why are the changes needed? During the job in #26164, after involving a var `isReleased` in `hasNext`, there's possible that `isReleased` is false when calling `hasNext`, but it becomes true before calling `next`. A safer way is using database-style iterator: `advanceNext` and `getRow`. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? Existing UT. Closes #26229 from xuanyuanking/SPARK-21492-follow-up. Authored-by: Yuanjian Li <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

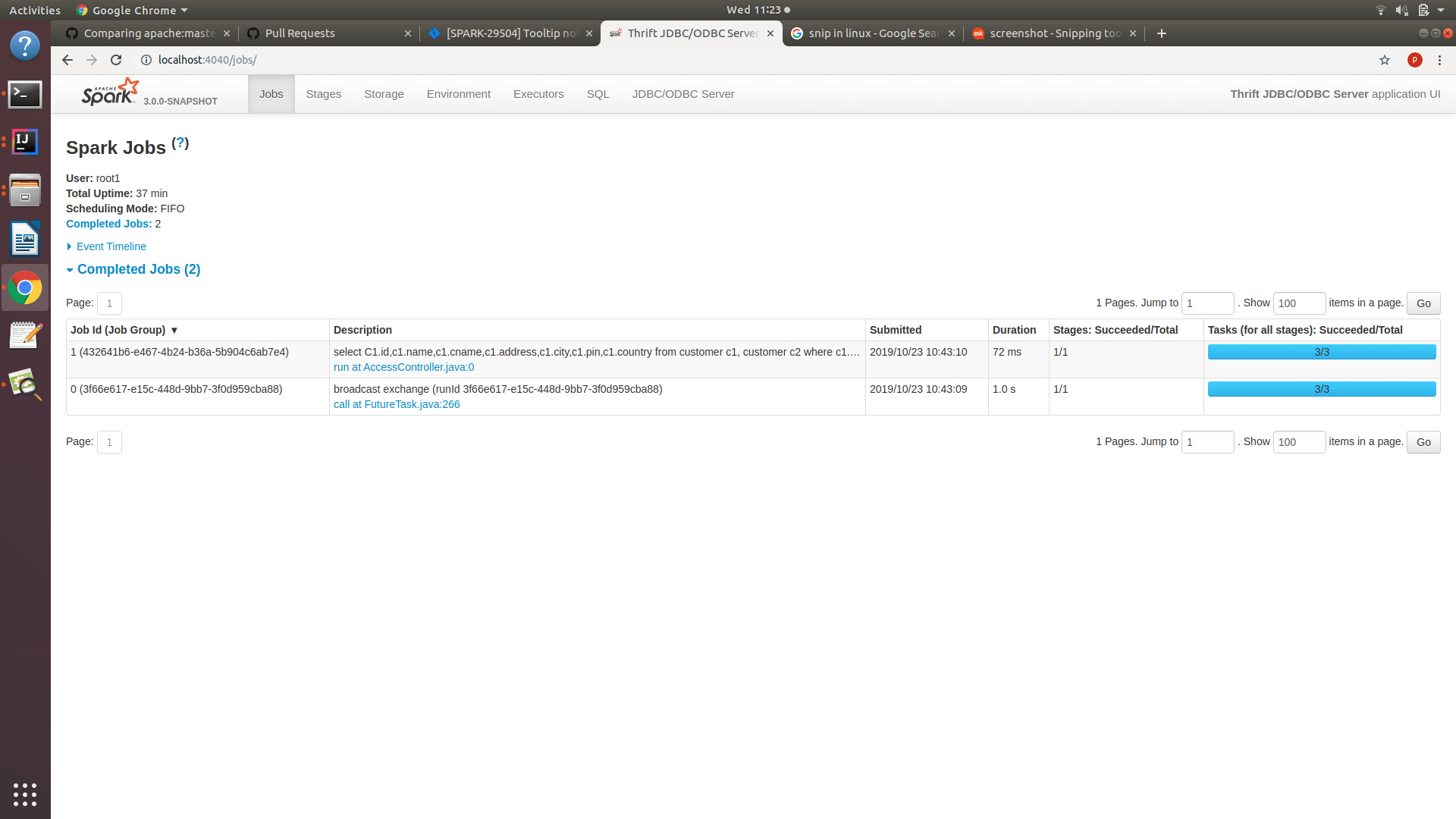

### What changes were proposed in this pull request? On clicking job description in jobs page, the description was not shown fully. Add the function for the click event on description. ### Why are the changes needed? when there is a long description of a job, it cannot be seen fully in the UI. The feature was added in #24145 But it is missed after #25374 Before change:  After change: on Double click over decription  ### Does this PR introduce any user-facing change? No ### How was this patch tested? Manually test Closes #26222 from PavithraRamachandran/jobs_description_tooltip. Authored-by: Pavithra Ramachandran <[email protected]> Signed-off-by: Gengliang Wang <[email protected]>

### What changes were proposed in this pull request? Support SparkSQL use iN/EXISTS with subquery in JOIN condition. ### Why are the changes needed? Support SQL use iN/EXISTS with subquery in JOIN condition. ### Does this PR introduce any user-facing change? This PR is for enable user use subquery in `JOIN`'s ON condition. such as we have create three table ``` CREATE TABLE A(id String); CREATE TABLE B(id String); CREATE TABLE C(id String); ``` we can do query like : ``` SELECT A.id from A JOIN B ON A.id = B.id and A.id IN (select C.id from C) ``` ### How was this patch tested? ADDED UT Closes #25854 from AngersZhuuuu/SPARK-29145. Lead-authored-by: angerszhu <[email protected]> Co-authored-by: AngersZhuuuu <[email protected]> Signed-off-by: Takeshi Yamamuro <[email protected]>

What changes were proposed in this pull request? Document ALTER TABLE statement in SQL Reference Guide. Why are the changes needed? Adding documentation for SQL reference. Does this PR introduce any user-facing change? yes Before: There was no documentation for this. After.       How was this patch tested? Used jekyll build and serve to verify. Closes #25590 from PavithraRamachandran/alter_doc. Authored-by: Pavithra Ramachandran <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? Supports pagination for SQL Statisitcs table in the JDBC/ODBC tab using existing Spark pagination framework. ### Why are the changes needed? It will easier for user to analyse the table and it may fix the potential issues like oom while loading the page, that may occur similar to the SQL page (refer #22645) ### Does this PR introduce any user-facing change? There will be no change in the `SQLStatistics` table in JDBC/ODBC server page execpt pagination support. ### How was this patch tested? Manually verified. Before PR:  After PR:  Closes #26215 from shahidki31/jdbcPagination. Authored-by: shahid <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…timzeone due to more recent timezone updates in later JDK 8 ### What changes were proposed in this pull request? Recent timezone definition changes in very new JDK 8 (and beyond) releases cause test failures. The below was observed on JDK 1.8.0_232. As before, the easy fix is to allow for these inconsequential variations in test results due to differing definition of timezones. ### Why are the changes needed? Keeps test passing on the latest JDK releases. ### Does this PR introduce any user-facing change? None ### How was this patch tested? Existing tests Closes #26236 from srowen/SPARK-29578. Authored-by: Sean Owen <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? Only use antlr4 to parse the interval string, and remove the duplicated parsing logic from `CalendarInterval`. ### Why are the changes needed? Simplify the code and fix inconsistent behaviors. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Pass the Jenkins with the updated test cases. Closes #26190 from cloud-fan/parser. Lead-authored-by: Wenchen Fan <[email protected]> Co-authored-by: Dongjoon Hyun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…elds in json generating # What changes were proposed in this pull request? Add description for ignoreNullFields, which is commited in #26098 , in DataFrameWriter and readwriter.py. Enable user to use ignoreNullFields in pyspark. ### Does this PR introduce any user-facing change? No ### How was this patch tested? run unit tests Closes #26227 from stczwd/json-generator-doc. Authored-by: stczwd <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Remove the requirement of fetch_size>=0 from JDBCOptions to allow negative fetch size. ### Why are the changes needed? Namely, to allow data fetch in stream manner (row-by-row fetch) against MySQL database. ### Does this PR introduce any user-facing change? No ### How was this patch tested? Unit test (JDBCSuite) This closes #26230 . Closes #26244 from fuwhu/SPARK-21287-FIX. Authored-by: fuwhu <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

… commands ### What changes were proposed in this pull request? Add UncacheTableStatement and make UNCACHE TABLE go through the same catalog/table resolution framework of v2 commands. ### Why are the changes needed? It's important to make all the commands have the same table resolution behavior, to avoid confusing end-users. e.g. ``` USE my_catalog DESC t // success and describe the table t from my_catalog UNCACHE TABLE t // report table not found as there is no table t in the session catalog ``` ### Does this PR introduce any user-facing change? yes. When running UNCACHE TABLE, Spark fails the command if the current catalog is set to a v2 catalog, or the table name specified a v2 catalog. ### How was this patch tested? New unit tests Closes #26237 from imback82/uncache_table. Authored-by: Terry Kim <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? Track timing info for each rule in optimization phase using `QueryPlanningTracker` in Structured Streaming ### Why are the changes needed? In Structured Streaming we only track rule info in analysis phase, not in optimization phase. ### Does this PR introduce any user-facing change? No Closes #25914 from wenxuanguan/spark-29227. Authored-by: wenxuanguan <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

cozos

pushed a commit

that referenced

this pull request

Oct 26, 2019

### What changes were proposed in this pull request? `org.apache.spark.sql.kafka010.KafkaDelegationTokenSuite` failed lately. After had a look at the logs it just shows the following fact without any details: ``` Caused by: sbt.ForkMain$ForkError: sun.security.krb5.KrbException: Server not found in Kerberos database (7) - Server not found in Kerberos database ``` Since the issue is intermittent and not able to reproduce it we should add more debug information and wait for reproduction with the extended logs. ### Why are the changes needed? Failing test doesn't give enough debug information. ### Does this PR introduce any user-facing change? No. ### How was this patch tested? I've started the test manually and checked that such additional debug messages show up: ``` >>> KrbApReq: APOptions are 00000000 00000000 00000000 00000000 >>> EType: sun.security.krb5.internal.crypto.Aes128CtsHmacSha1EType Looking for keys for: kafka/localhostEXAMPLE.COM Added key: 17version: 0 Added key: 23version: 0 Added key: 16version: 0 Found unsupported keytype (3) for kafka/localhostEXAMPLE.COM >>> EType: sun.security.krb5.internal.crypto.Aes128CtsHmacSha1EType Using builtin default etypes for permitted_enctypes default etypes for permitted_enctypes: 17 16 23. >>> EType: sun.security.krb5.internal.crypto.Aes128CtsHmacSha1EType MemoryCache: add 1571936500/174770/16C565221B70AAB2BEFE31A83D13A2F4/client/localhostEXAMPLE.COM to client/localhostEXAMPLE.COM|kafka/localhostEXAMPLE.COM MemoryCache: Existing AuthList: #3: 1571936493/200803/8CD70D280B0862C5DA1FF901ECAD39FE/client/localhostEXAMPLE.COM #2: 1571936499/985009/BAD33290D079DD4E3579A8686EC326B7/client/localhostEXAMPLE.COM #1: 1571936499/995208/B76B9D78A9BE283AC78340157107FD40/client/localhostEXAMPLE.COM ``` Closes apache#26252 from gaborgsomogyi/SPARK-29580. Authored-by: Gabor Somogyi <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

What changes were proposed in this pull request?

Why are the changes needed?

Does this PR introduce any user-facing change?

How was this patch tested?