-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-40283][INFRA] Make MiMa check default exclude private object and bump previousSparkVersion to 3.3.0

#37741

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

d9830ef

f8e3d79

0d568b9

b47bc42

bd0cca1

b43c8e3

e61ebc2

f2ed5d8

5be1c1f

9182c4b

d843a21

d712518

31ad559

c2d9f37

e20d2d7

c9c4b4c

ad543c0

2d735ed

34fb8c4

8653819

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -34,8 +34,8 @@ import com.typesafe.tools.mima.core.ProblemFilters._ | |

| */ | ||

| object MimaExcludes { | ||

|

|

||

| // Exclude rules for 3.4.x | ||

| lazy val v34excludes = v33excludes ++ Seq( | ||

| // Exclude rules for 3.4.x from 3.3.0 | ||

| lazy val v34excludes = defaultExcludes ++ Seq( | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.recommendation.ALS.checkedCast"), | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.recommendation.ALSModel.checkedCast"), | ||

|

|

||

|

|

@@ -62,48 +62,34 @@ object MimaExcludes { | |

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.deploy.DeployMessages#RequestExecutors.copy"), | ||

| ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.deploy.DeployMessages#RequestExecutors.copy$default$2"), | ||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.deploy.DeployMessages#RequestExecutors.this"), | ||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.deploy.DeployMessages#RequestExecutors.apply") | ||

| ) | ||

|

|

||

| // Exclude rules for 3.3.x from 3.2.0 | ||

| lazy val v33excludes = v32excludes ++ Seq( | ||

| // [SPARK-35672][CORE][YARN] Pass user classpath entries to executors using config instead of command line | ||

| // The followings are necessary for Scala 2.13. | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend#Arguments.*"), | ||

| ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend#Arguments.*"), | ||

| ProblemFilters.exclude[MissingTypesProblem]("org.apache.spark.executor.CoarseGrainedExecutorBackend$Arguments$"), | ||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.deploy.DeployMessages#RequestExecutors.apply"), | ||

LuciferYang marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| // [SPARK-37391][SQL] JdbcConnectionProvider tells if it modifies security context | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.jdbc.JdbcConnectionProvider.modifiesSecurityContext"), | ||

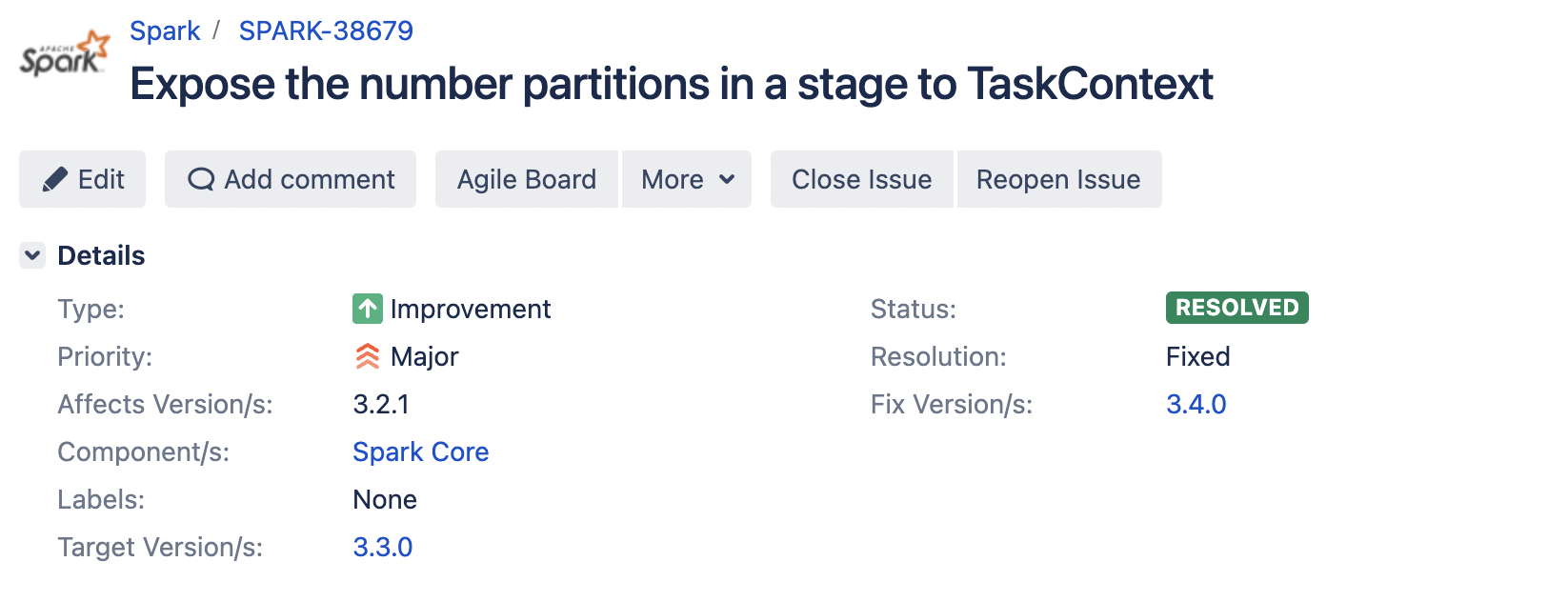

| // [SPARK-38679][CORE] Expose the number of partitions in a stage to TaskContext | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It was placed in cc @vkorukanti and @cloud-fan SPARK-38679: |

||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.TaskContext.numPartitions"), | ||

|

|

||

| // [SPARK-37780][SQL] QueryExecutionListener support SQLConf as constructor parameter | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.sql.util.ExecutionListenerManager.this"), | ||

| // [SPARK-37786][SQL] StreamingQueryListener support use SQLConf.get to get corresponding SessionState's SQLConf | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.sql.streaming.StreamingQueryManager.this"), | ||

| // [SPARK-38432][SQL] Reactor framework so as JDBC dialect could compile filter by self way | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.sources.Filter.toV2"), | ||

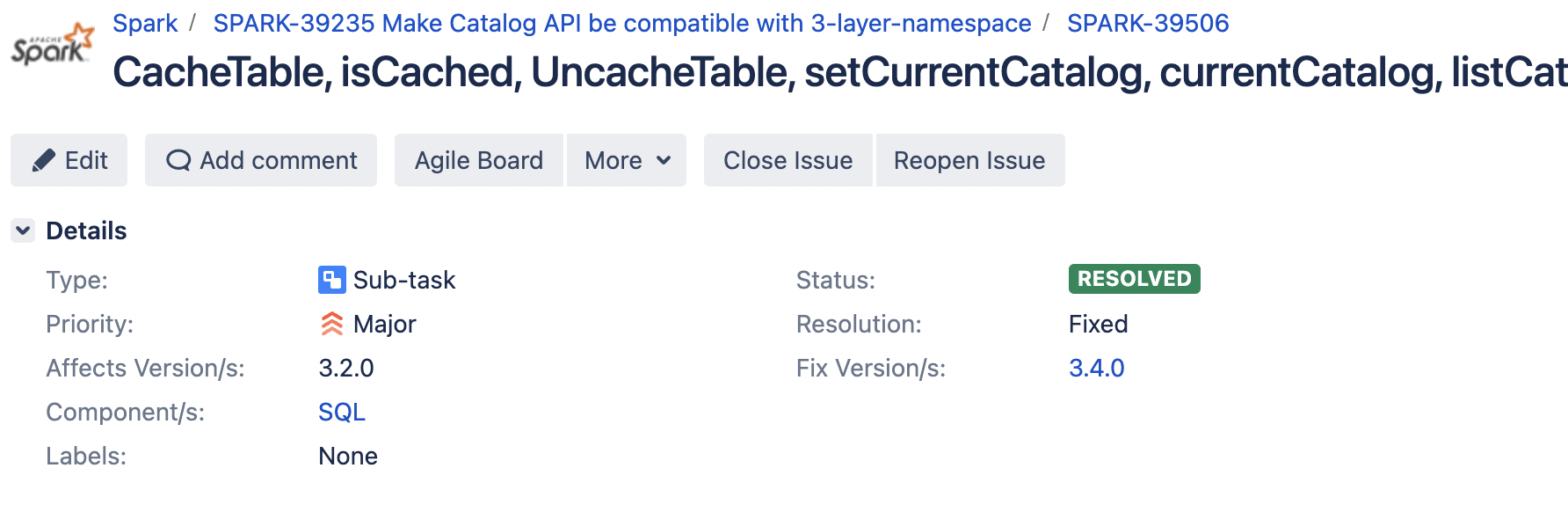

| // [SPARK-39506] In terms of 3 layer namespace effort, add currentCatalog, setCurrentCatalog and listCatalogs API to Catalog interface | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It was placed in cc @amaliujia and @cloud-fan for SPARK-39506 |

||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.catalog.Catalog.currentCatalog"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.catalog.Catalog.setCurrentCatalog"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.catalog.Catalog.listCatalogs"), | ||

|

|

||

| // [SPARK-37831][CORE] Add task partition id in TaskInfo and Task Metrics | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.status.api.v1.TaskData.this"), | ||

| // [SPARK-39704][SQL] Implement createIndex & dropIndex & indexExists in JDBC (H2 dialect) | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. they should not be checked by mima... Does mima have a list to mark private APIs?

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Sure? we can add

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. c2d9f37 add |

||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.sql.jdbc.JdbcDialect.createIndex"), | ||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.sql.jdbc.JdbcDialect.dropIndex"), | ||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.sql.jdbc.JdbcDialect.indexExists"), | ||

|

|

||

| // [SPARK-37600][BUILD] Upgrade to Hadoop 3.3.2 | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.hadoop.shaded.net.jpountz.lz4.LZ4Compressor"), | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.hadoop.shaded.net.jpountz.lz4.LZ4Factory"), | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.hadoop.shaded.net.jpountz.lz4.LZ4SafeDecompressor"), | ||

| // [SPARK-39759][SQL] Implement listIndexes in JDBC (H2 dialect) | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.sql.jdbc.JdbcDialect.listIndexes"), | ||

|

|

||

| // [SPARK-37377][SQL] Initial implementation of Storage-Partitioned Join | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.spark.sql.connector.read.partitioning.ClusteredDistribution"), | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.spark.sql.connector.read.partitioning.Distribution"), | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.sql.connector.read.partitioning.Partitioning.*"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.connector.read.partitioning.Partitioning.*"), | ||

| // [SPARK-38929][SQL] Improve error messages for cast failures in ANSI | ||

| ProblemFilters.exclude[IncompatibleMethTypeProblem]("org.apache.spark.sql.types.Decimal.fromStringANSI"), | ||

| ProblemFilters.exclude[IncompatibleResultTypeProblem]("org.apache.spark.sql.types.Decimal.fromStringANSI$default$3"), | ||

|

|

||

| // [SPARK-38908][SQL] Provide query context in runtime error of Casting from String to | ||

| // Number/Date/Timestamp/Boolean | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.sql.types.Decimal.fromStringANSI") | ||

| // [SPARK-36511][MINOR][SQL] Remove ColumnIOUtil | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.parquet.io.ColumnIOUtil") | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. |

||

| ) | ||

|

|

||

| // Exclude rules for 3.2.x from 3.1.1 | ||

| lazy val v32excludes = Seq( | ||

| // Defulat exclude rules | ||

| lazy val defaultExcludes = Seq( | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I keep as

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Looks good to me. |

||

| // Spark Internals | ||

| ProblemFilters.exclude[Problem]("org.apache.spark.rpc.*"), | ||

| ProblemFilters.exclude[Problem]("org.spark-project.jetty.*"), | ||

|

|

@@ -124,73 +110,15 @@ object MimaExcludes { | |

| // Avro source implementation is internal. | ||

| ProblemFilters.exclude[Problem]("org.apache.spark.sql.v2.avro.*"), | ||

|

|

||

| // [SPARK-34848][CORE] Add duration to TaskMetricDistributions | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.status.api.v1.TaskMetricDistributions.this"), | ||

|

|

||

| // [SPARK-34488][CORE] Support task Metrics Distributions and executor Metrics Distributions | ||

| // in the REST API call for a specified stage | ||

| ProblemFilters.exclude[MissingMethodProblem]("org.apache.spark.status.api.v1.StageData.this"), | ||

|

|

||

| // [SPARK-36173][CORE] Support getting CPU number in TaskContext | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.TaskContext.cpus"), | ||

|

|

||

| // [SPARK-38679][CORE] Expose the number of partitions in a stage to TaskContext | ||

|

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. wrong place, should in |

||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.TaskContext.numPartitions"), | ||

|

|

||

| // [SPARK-35896] Include more granular metrics for stateful operators in StreamingQueryProgress | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.sql.streaming.StateOperatorProgress.this"), | ||

|

|

||

| (problem: Problem) => problem match { | ||

| case MissingClassProblem(cls) => !cls.fullName.startsWith("org.sparkproject.jpmml") && | ||

| !cls.fullName.startsWith("org.sparkproject.dmg.pmml") | ||

| case _ => true | ||

| }, | ||

|

|

||

| // [SPARK-33808][SQL] DataSource V2: Build logical writes in the optimizer | ||

| ProblemFilters.exclude[MissingClassProblem]("org.apache.spark.sql.connector.write.V1WriteBuilder"), | ||

|

|

||

| // [SPARK-33955] Add latest offsets to source progress | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.sql.streaming.SourceProgress.this"), | ||

|

|

||

| // [SPARK-34862][SQL] Support nested column in ORC vectorized reader | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getBoolean"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getByte"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getShort"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getInt"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getLong"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getFloat"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getDouble"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getDecimal"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getUTF8String"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getBinary"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getArray"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getMap"), | ||

| ProblemFilters.exclude[DirectAbstractMethodProblem]("org.apache.spark.sql.vectorized.ColumnVector.getChild"), | ||

|

|

||

| // [SPARK-35135][CORE] Turn WritablePartitionedIterator from trait into a default implementation class | ||

| ProblemFilters.exclude[IncompatibleTemplateDefProblem]("org.apache.spark.util.collection.WritablePartitionedIterator"), | ||

|

|

||

| // [SPARK-35757][CORE] Add bitwise AND operation and functionality for intersecting bloom filters | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.util.sketch.BloomFilter.intersectInPlace"), | ||

|

|

||

| // [SPARK-35276][CORE] Calculate checksum for shuffle data and write as checksum file | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.shuffle.sort.io.LocalDiskShuffleMapOutputWriter.commitAllPartitions"), | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.shuffle.sort.io.LocalDiskSingleSpillMapOutputWriter.transferMapSpillFile"), | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.shuffle.api.ShuffleMapOutputWriter.commitAllPartitions"), | ||

| ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.shuffle.api.SingleSpillShuffleMapOutputWriter.transferMapSpillFile"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.shuffle.api.SingleSpillShuffleMapOutputWriter.transferMapSpillFile"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.shuffle.api.ShuffleMapOutputWriter.commitAllPartitions"), | ||

|

|

||

| // [SPARK-39506] In terms of 3 layer namespace effort, add currentCatalog, setCurrentCatalog and listCatalogs API to Catalog interface | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.catalog.Catalog.currentCatalog"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.catalog.Catalog.setCurrentCatalog"), | ||

| ProblemFilters.exclude[ReversedMissingMethodProblem]("org.apache.spark.sql.catalog.Catalog.listCatalogs") | ||

| } | ||

| ) | ||

|

|

||

| def excludes(version: String) = version match { | ||

| case v if v.startsWith("3.4") => v34excludes | ||

| case v if v.startsWith("3.3") => v33excludes | ||

| case v if v.startsWith("3.2") => v32excludes | ||

| case _ => Seq() | ||

| } | ||

| } | ||

Uh oh!

There was an error while loading. Please reload this page.