-

Notifications

You must be signed in to change notification settings - Fork 28.9k

revert [SPARK-21743][SQL] top-most limit should not cause memory leak #21573

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Hmm, this LGTM, however it didn't catch up 2.3.1 release. |

|

I take a look at Note: I see. Though |

|

Test build #91877 has finished for PR 21573 at commit

|

|

LGTM - Merging to 2.3. Thanks! What is the process for master? |

## What changes were proposed in this pull request?

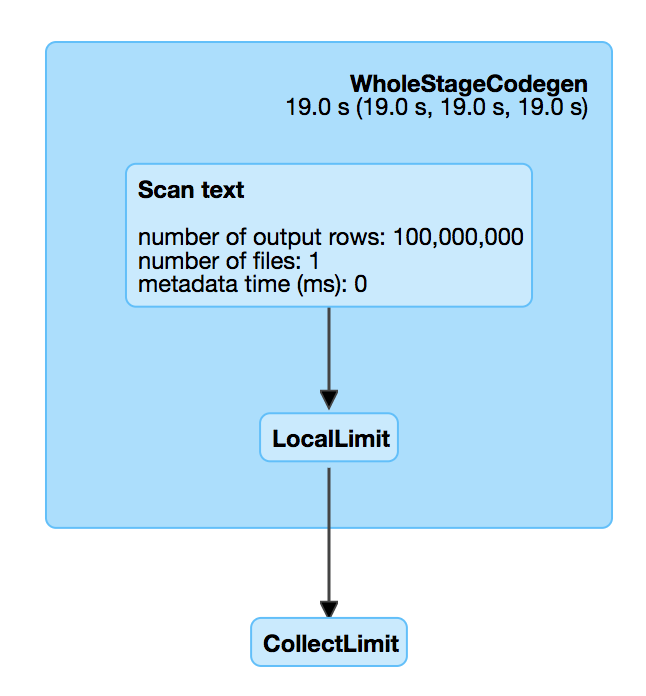

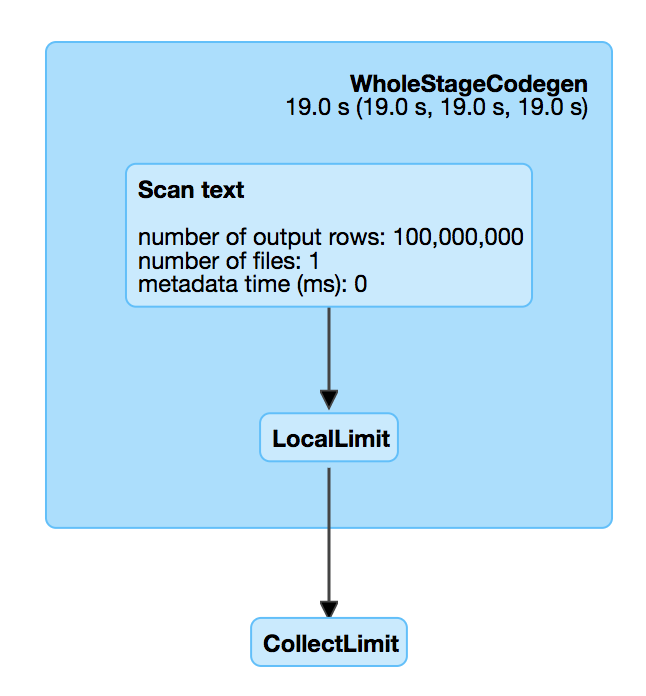

There is a performance regression in Spark 2.3. When we read a big compressed text file which is un-splittable(e.g. gz), and then take the first record, Spark will scan all the data in the text file which is very slow. For example, `spark.read.text("/tmp/test.csv.gz").head(1)`, we can check out the SQL UI and see that the file is fully scanned.

This is introduced by #18955 , which adds a LocalLimit to the query when executing `Dataset.head`. The foundamental problem is, `Limit` is not well whole-stage-codegened. It keeps consuming the input even if we have already hit the limitation.

However, if we just fix LIMIT whole-stage-codegen, the memory leak test will fail, as we don't fully consume the inputs to trigger the resource cleanup.

To fix it completely, we should do the following

1. fix LIMIT whole-stage-codegen, stop consuming inputs after hitting the limitation.

2. in whole-stage-codegen, provide a way to release resource of the parant operator, and apply it in LIMIT

3. automatically release resource when task ends.

Howere this is a non-trivial change, and is risky to backport to Spark 2.3.

This PR proposes to revert #18955 in Spark 2.3. The memory leak is not a big issue. When task ends, Spark will release all the pages allocated by this task, which is kind of releasing most of the resources.

I'll submit a exhaustive fix to master later.

## How was this patch tested?

N/A

Author: Wenchen Fan <[email protected]>

Closes #21573 from cloud-fan/limit.

|

Ya, that's too bad to miss this in 2.3.1. |

|

I'll send one or more PRs to master for the following things

|

## What changes were proposed in this pull request? This is the first follow-up of #21573 , which was only merged to 2.3. This PR fixes the memory leak in another way: free the `UnsafeExternalMap` when the task ends. All the data buffers in Spark SQL are using `UnsafeExternalMap` and `UnsafeExternalSorter` under the hood, e.g. sort, aggregate, window, SMJ, etc. `UnsafeExternalSorter` registers a task completion listener to free the resource, we should apply the same thing to `UnsafeExternalMap`. TODO in the next PR: do not consume all the inputs when having limit in whole stage codegen. ## How was this patch tested? existing tests Author: Wenchen Fan <[email protected]> Closes #21738 from cloud-fan/limit.

There is a performance regression in Spark 2.3. When we read a big compressed text file which is un-splittable(e.g. gz), and then take the first record, Spark will scan all the data in the text file which is very slow. For example, `spark.read.text("/tmp/test.csv.gz").head(1)`, we can check out the SQL UI and see that the file is fully scanned.

This is introduced by apache#18955 , which adds a LocalLimit to the query when executing `Dataset.head`. The foundamental problem is, `Limit` is not well whole-stage-codegened. It keeps consuming the input even if we have already hit the limitation.

However, if we just fix LIMIT whole-stage-codegen, the memory leak test will fail, as we don't fully consume the inputs to trigger the resource cleanup.

To fix it completely, we should do the following

1. fix LIMIT whole-stage-codegen, stop consuming inputs after hitting the limitation.

2. in whole-stage-codegen, provide a way to release resource of the parant operator, and apply it in LIMIT

3. automatically release resource when task ends.

Howere this is a non-trivial change, and is risky to backport to Spark 2.3.

This PR proposes to revert apache#18955 in Spark 2.3. The memory leak is not a big issue. When task ends, Spark will release all the pages allocated by this task, which is kind of releasing most of the resources.

I'll submit a exhaustive fix to master later.

N/A

Author: Wenchen Fan <[email protected]>

Closes apache#21573 from cloud-fan/limit.

(cherry picked from commit d3255a5)

RB=1435935

BUG=LIHADOOP-40677

G=superfriends-reviewers

R=fli,mshen,yezhou,edlu

A=yezhou

What changes were proposed in this pull request?

There is a performance regression in Spark 2.3. When we read a big compressed text file which is un-splittable(e.g. gz), and then take the first record, Spark will scan all the data in the text file which is very slow. For example,

spark.read.text("/tmp/test.csv.gz").head(1), we can check out the SQL UI and see that the file is fully scanned.This is introduced by #18955 , which adds a LocalLimit to the query when executing

Dataset.head. The foundamental problem is,Limitis not well whole-stage-codegened. It keeps consuming the input even if we have already hit the limitation.However, if we just fix LIMIT whole-stage-codegen, the memory leak test will fail, as we don't fully consume the inputs to trigger the resource cleanup.

To fix it completely, we should do the following

Howere this is a non-trivial change, and is risky to backport to Spark 2.3.

This PR proposes to revert #18955 in Spark 2.3. The memory leak is not a big issue. When task ends, Spark will release all the pages allocated by this task, which is kind of releasing most of the resources.

I'll submit a exhaustive fix to master later.

How was this patch tested?

N/A