-

Notifications

You must be signed in to change notification settings - Fork 620

feat(lib-storage): improve performance by reducing buffer copies #2612

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Codecov Report

@@ Coverage Diff @@

## main #2612 +/- ##

===========================================

- Coverage 75.19% 60.67% -14.53%

===========================================

Files 474 516 +42

Lines 20721 27808 +7087

Branches 4755 6832 +2077

===========================================

+ Hits 15581 16872 +1291

- Misses 5140 10936 +5796

Continue to review full report at Codecov.

|

|

I've added two extra changes. They're technically not from lib-storage, but I felt that they're of the same spirit as my other changes so I thought that it made sense to put them here, instead of spamming PRs. Please tell me if you'd prefer a separate PR.

|

|

@AllanZhengYP Hi, I'm pinging you because you've also replied in my other PR. Is there anything that I need to do to get this PR rolling (am I missing something, are there any changes that I need to do?)? |

96acaae to

d4f8231

Compare

|

I've added an additional tweak for |

AWS CodeBuild CI Report

Powered by github-codebuild-logs, available on the AWS Serverless Application Repository |

|

@AllanZhengYP Hey, could you post an update on this, why is this stuck? This performance impact is affecting our team as well. |

|

Hey, any updates regarding this PR? Is there anything that's missing, or something else that's blocking this PR? I'd be happy to make any modifications that are needed. |

Reduce memory usage by reducing buffer-copying and returning the original buffer instead.

79d5b58 to

767c18b

Compare

|

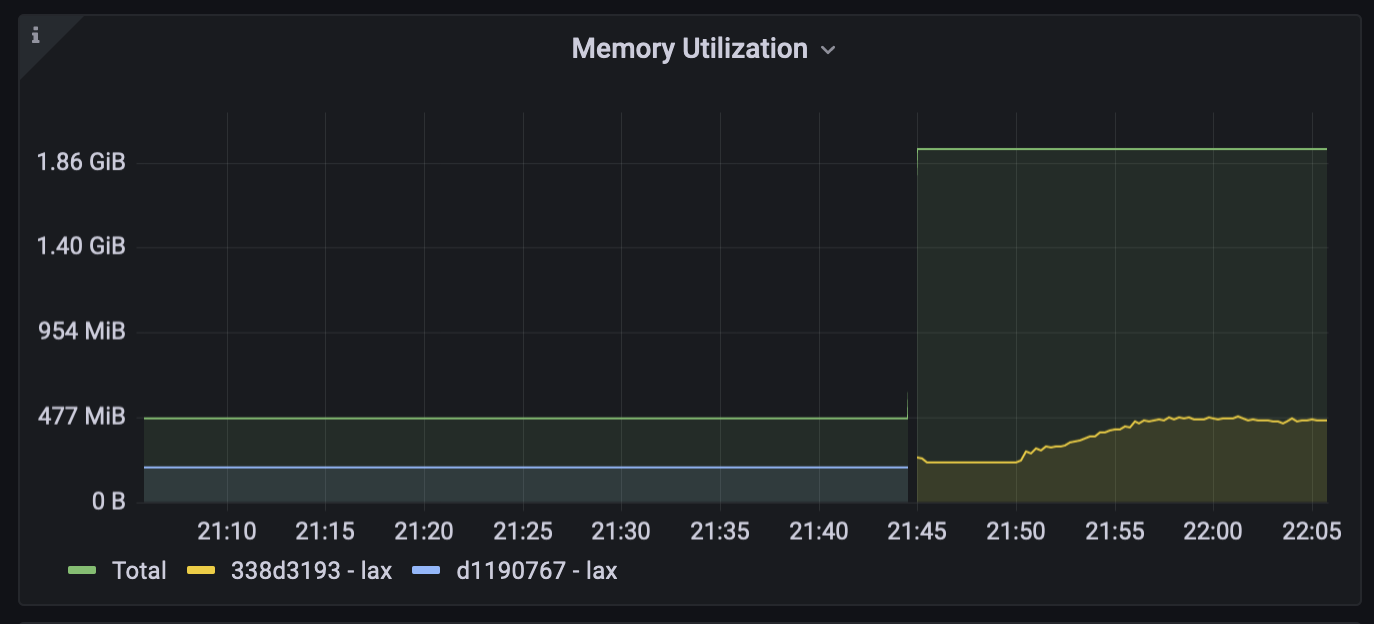

@Linkgoron hey! I've been dealing with OOM errors when bulk uploading using the I assume you were running in to memory issues when using this library as well? Honestly shocked I don't see more issues pop up about the steady memory increase over time until bulk uploading is complete. I wrote an issue here where I assume it has something to do with backpressure or the buffers not releasing from gc quick enough, but honestly I'm not experienced in this realm of node at all. Here's my issue ticket: #4257 |

I didn't actually have any memory issues, but when i created a different PR I just noticed how many copies there were - this PR is a bit old now, so I might not remember correctly, but I believe that every buffer is copied at least twice and in some cases three times. I'd be happy to put this PR back into a mergable state if the team has interest in it. |

Got it - yea turns out my issue was not due to a leak but the fact that this package does take up around 500MB before stabilizing if bulk uploading, so because my staging sites were using 512MB, this was enough for it to look like the memory was constantly increasing thus crashing my server. Increasing the memory to >512MB solved that. I'm trying to bring more attention to your PR as it would be great to update this and eventually have it merged!! |

|

due to package migration, moved the work into new PRs |

|

This thread has been automatically locked since there has not been any recent activity after it was closed. Please open a new issue for related bugs and link to relevant comments in this thread. |

Reduce memory usage by reducing buffer-copying and returning the original buffer instead.

Description

This PR reduces the number of buffers copied and created from existing buffers. The most significant change was made to streams that return buffers and Uint8Arrays, but some minor improvements were made for

stringandUint8Arraybodies as well. The following changes were made:getDataReadable.tsandgetDataReadableStream.ts(probably the most significant change).byteLength.ts, useBuffer.byteLength(string)instead ofBuffer.from(string).byteLengthfor computing the string byteLength, so that the string is not copied into a buffer just for its byteLength.Uint8Arraythe same as a Buffer inchunker.tssubarrayinstead ofsliceingetChunkBuffer.tsso thatUint8ArrayandBufferinputs behave the same. In Node.sliceon Uint8Array copies the data, while.subarrayjust takes the subarray without copying. Slice and subarray for Buffers in Node are the same (both don't copy the data).getChunkStream.tswas changed in a similar way togetChunkBuffer.ts, and also added a change so that the last buffer is not copied if there's only one buffer left (the last change is probably relatively minor).Testing

I ran the following file for different values:

results

Original codetimes 1

rss 59.67 MB

heapTotal 15.68 MB

heapUsed 12.78 MB

external 30.91 MB

arrayBuffers 30.02 MB

timing: 17.289ms

times 2

rss 79.71 MB

heapTotal 15.68 MB

heapUsed 12.82 MB

external 50.91 MB

arrayBuffers 50.02 MB

timing: 26.743ms

times 10

rss 173.15 MB

heapTotal 7.18 MB

heapUsed 2.3 MB

external 90.91 MB

arrayBuffers 90.02 MB

timing: 90.863ms

New:

times 1

rss 49.52 MB

heapTotal 15.68 MB

heapUsed 12.78 MB

external 20.91 MB

arrayBuffers 20.02 MB

timing: 13.046ms

times 2

rss 59.64 MB

heapTotal 15.43 MB

heapUsed 12.8 MB

external 30.91 MB

arrayBuffers 30.02 MB

timing: 17.994ms

times 10

rss 129.96 MB

heapTotal 7.18 MB

heapUsed 2.32 MB

external 60.91 MB

arrayBuffers 60.02 MB

timing: 59.366ms

Note that I executed the above multiple times, to see that the results are essentially the same, but I didn't do a z-test or anything. If there's any benchmarking tools that I can use to have better results to show that the results are significant, I'd be happy to use them. From my testing, the new chunking was faster and also used less memory, when chunking a stream of a 10MB buffer with 1MB chunks. I also checked to see if there was some degraded performance for streams that return strings instead of buffers, but performance was essentially the same.

For byteLength, I executed a similar script that called

byteLength10 times with a 10MB and 1MB strings and also saw significant memory reduction.results

10MB strings: >>>>> original codetimes 10

rss 129.17 MB

heapTotal 14.93 MB

heapUsed 12.1 MB

external 40.93 MB

arrayBuffers 40.01 MB

timing: 90.462ms

new code

times 10

rss 29.49 MB

heapTotal 15.43 MB

heapUsed 12.66 MB

external 0.93 MB

arrayBuffers 0.01 MB

timing: 26.115ms

1MB strings

original code

times 10

rss 30.39 MB

heapTotal 6.68 MB

heapUsed 3.66 MB

external 0.93 MB

arrayBuffers 0.01 MB

timing: 13.741ms

new code

times 10

rss 20.36 MB

heapTotal 6.68 MB

heapUsed 3.67 MB

external 0.93 MB

arrayBuffers 0.01 MB

timing: 7.668ms

I did not check

Uint8Arraybuffers or streams (i.e. streams that return such chunks), but I expect similar performance number improvements as above. If it's necessary I'll also check as well.Additional context

If the general direction of this PR is OK, I'd be happy to add some more (regression) tests for Uint8Array buffers and streams and some other edge-cases, as those currently don't exist so if I did mess something up with such inputs, there are no tests to show it.Also, if there's some benchmark tooling that I missed, I'd be happy to add benchmarks.By submitting this pull request, I confirm that you can use, modify, copy, and redistribute this contribution, under the terms of your choice.