-

Notifications

You must be signed in to change notification settings - Fork 621

Description

Checkboxes for prior research

- I've gone through Developer Guide and API reference

- I've checked AWS Forums and StackOverflow.

- I've searched for previous similar issues and didn't find any solution.

Describe the bug

Hi! I am building a bulk upload queue microservice that utilizes the @aws-sdk/lib-storage library. When testing my service (uploading a queued files back to back until the queue is empty), I discovered that my memory usage increases over time and will eventually lead to OOM exceptions if my upload queue is large enough. I believe this has to do with the package converting the stream to buffer chunks and possibly resulting in backpressure or NodeJS not releasing these buffers via garbage collection fast enough?

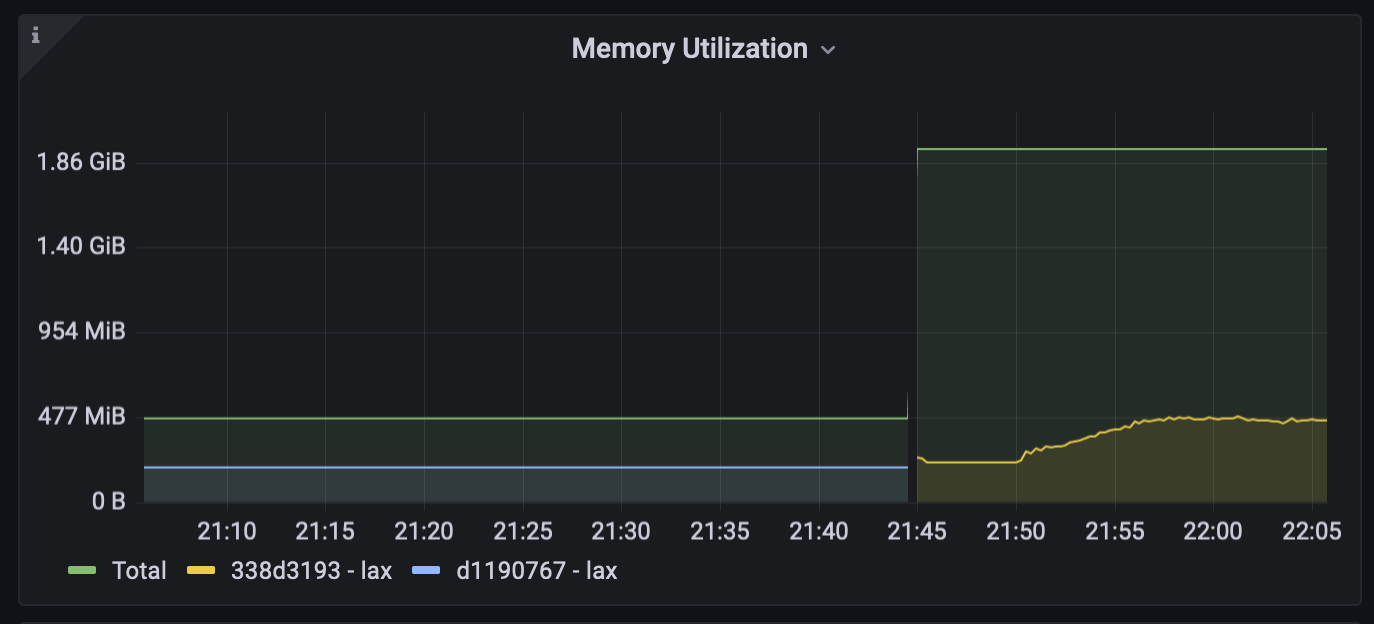

For example, here's a memory log that shows two scenarios:

- I added enough files to upload that makes my memory usage grow over time and crash my service

- I only added enough files to show memory was increasing BUT not crash my service, as you can see, the memory usage decreases slowly after finishing the uploads. (It continues back to its initial state)

Bear with me here as I'm taking a deep dive in memory / streams while debugging this problem...but since the memory from 2. does not stay at its elevated level and decreases back to its normal(ish) state I believe that we can deduce that this is a backpressure issue where the chunks loaded in to buffers can not be uploaded as fast as they are generated and thus cannot be deallocated fast enough?

Honestly I'm a bit surprised I couldn't find many issues stemming from this problem so I feel like maybe I'm doing something wrong?

SDK version number

@aws-sdk/[email protected]

Which JavaScript Runtime is this issue in?

Node.js

Details of the browser/Node.js/ReactNative version

v17.9.1

Reproduction Steps

I simplified my microservice code to simply read in a file from S3 and then immediately use the aws-sdk/lib-storage class Upload to upload the file back in to storage. Example code below:

//handler.ts

const { Body } = await s3API.getObject("xxx.mp3");

try {

const { size: uploadSize } = await s3API.uploadTo(job.data.destinationAudioEnding, Body as any, "audio/mpeg");

size = uploadSize;

resolve(true)

} catch (error) {

if (error instanceof Error) {

console.log(error.message);

}

resolve(true);

}

Where uploadTo is a wrapper around Upload

// s3.ts

public async uploadTo(key: string, stream: Readable, contentType: string) {

let size = 0;

const upload = new Upload({

client: this.s3Client,

params: {

Bucket: `${process.env.R2_BUCKET}`,

Key: key,

// we pipe to a passthrough to handle the case that the stream isn't initialized yet

Body: stream.pipe(new PassThrough()),

ContentType: contentType,

},

});

upload.on("httpUploadProgress", (progress) => {

size = progress.loaded ?? 0;

});

await upload.done();

return {

size

}

}

However I'm a bit stumped because I loaded up the sdk locally and logged output from the getChunkStream method and also logged when the done method was called and confirmed that all chunks had finished iterating before done had been called. Could this mean that even though the chunks have all been uploaded, that there is a delay in deallocating them from memory after uploading? Is there a way we can force deallocation once uploaded possibly?

Observed Behavior

I observe memory increasing steadily over time until uploads stop and then this memory allocation decreases slowly back to its "initial" size. My understanding leads me to believe that this could be a result of back pressure or buffers not being released via GC.

Expected Behavior

I expect to not receive OOM errors when bulk uploading files.

Possible Solution

No response

Additional Information/Context

Please let me know if there's any more info I can provide. This has been a tough one for me to track down!

Related issues / PRs:

#2612 <-- this PR has discovered improvements to the library memory footprint but was never implemented

then a follow up in the v2 repo mentioning that memory is still an issue in v3 (for PutObjectCommands) aws/aws-sdk-js#3128 (comment)

This stackoverflow answer has a comment mentioning memory issues within V3 https://stackoverflow.com/a/69988031/6480913

EDIT:

Actually you know what, I upgraded my staging service resources from 512MB to 2GB RAM and after bulk uploading more files...it almost seems like the library needs around 500MB of memory before it stabilizes. So while it looked like there was a memory leak in my 512MB config, it was because the library couldnt reach its stable state and crashed since there wasnt enough memory allocated for it anyways.

However before closing I would just like to push more attention to this PR to help reduce the memory footprint a bit because 500MB was a bit shocking for me #2612