-

Notifications

You must be signed in to change notification settings - Fork 8.2k

posix: clock: fix seconds calculation #52977

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

posix: clock: fix seconds calculation #52977

Conversation

add4518 to

1e4c037

Compare

a449e12 to

f49e7bc

Compare

|

f49e7bc to

ae2ddbc

Compare

1976dc3 to

9fb9849

Compare

9fb9849 to

5a43db6

Compare

lib/posix/clock.c

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This doesn't look right. The conversion function should be precise and reduce to the division already on all systems where that's possible. The bug is in the second line I think, where it feeds the result back into the reverse conversion; that's going to lose bits in configurations where the tick rate isn't divisible by ms. Can you check that just the second line fixes the issue?

Also... I haven't pulled up the whole file to check, but I have a hard time believing that that nremainder value is anything but a footgun in an implementation of clock_gettime(). There shouldn't be any state held, and if we want higher precision values we should start there instead of back-converting from coarser units like seconds.

My notion of an idealized implementation here should be as simple as uint64_t ns = k_ticks_to_us_floor64(k_uptime_ticks()), and then just doing division/modulus ops to extract tv_nsec and tv_sec.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@andyross - with just the second line, we get the failure below, which is off by an order of magnitude (on qemu_x86_64). Perhaps that's an indicator that z_tmcvt() needs a testsuite (Edit: I added a minimal one under tests/unit/time_units).

START - test_clock_gettime_rollover

CONFIG_SYS_CLOCK_TICKS_PER_SEC: 100

rollover_s: 184467440737095516

t-1: {18446744073709551, 596000000}

To me, straight division to get elapsed_secs makes sense.

The ticks value must always be >= elapsed_secs * CONFIG_SYS_CLOCK_TICKS_PER_SEC, so it looks like the nremainder calculation is a (potentially) cheaper way to get ticks % CONFIG_SYS_CLOCK_TICKS_PER_SEC.

Directly below, we use k_ticks_to_ns_floor32() to convert nremainder to nanoseconds, which seems ok to me.

Can you explain your issue in a bit more detail? And actually, this is the sort of thing that we should probably have a test for, so I'll implement a test for the scenario you outline if you can provide some additional detail.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

IMHO, the routines in time_units.h should have the set of k_ticks_to_secs_*() functions. It's milli, micro, and nano, but not full seconds. I guess the thought was that conversion to seconds is simple enough, one should write out the division, as is done here. But as soon as you toss in rates determined at run time or non-integral clock speeds, it would be nice to not make everyone write their own code for it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My notion of an idealized implementation here should be as simple as

uint64_t ns = k_ticks_to_us_floor64(k_uptime_ticks()),

You meant _to_ns of course. This expression does overflow before a 64-bit cycle counter overflows, for clock rates < 1 GHz. It does take some 584 years to do this. So realistically, it ought to be good enough for any reasonable value of a timeout or time delta or timestamp.

However, @cfriedt's test looks at values around where the cycle counter overflows, which is large enough to trigger the overflow.

32c85b6 to

fc4e2e0

Compare

|

fc4e2e0 to

4cad493

Compare

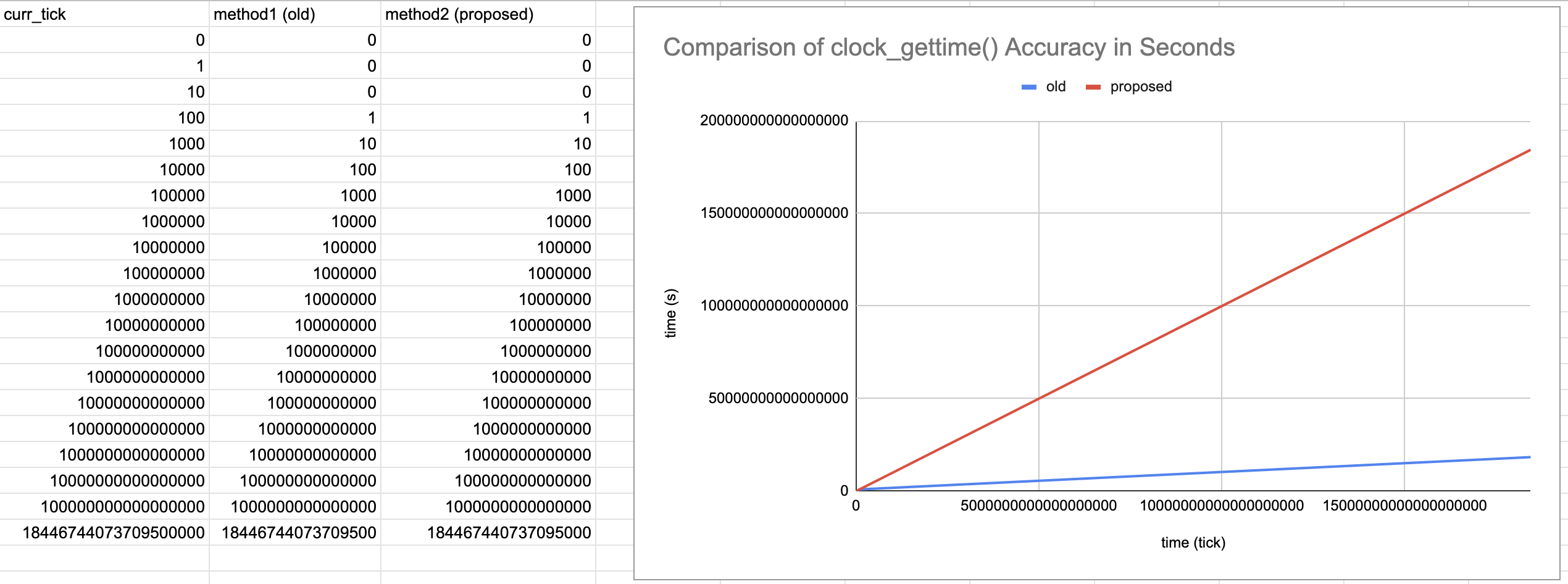

The previous method used to calculate seconds in `clock_gettime()` seemed to have an inaccuracy that grew with time causing the seconds to be off by an order of magnitude when ticks would roll over. This change fixes the method used to calculate seconds. Signed-off-by: Chris Friedt <[email protected]>

4cad493 to

53d9b05

Compare

|

|

@andyross - if there is a doubt in your mind, this should probably help. Almost looks like a Christmas tree, so Merry Christmas (if you celebrate)! 🎅 ❄️ ☃️ 🎄 That's with So with ticks/s = 100, the error factor is 10, but when ticks/s = 10, the error factor is 100. There is no error when using the method in this PR. I'm kind of concerned about where the order of magnitude difference comes from in the old method. |

Accurate timekeeping is something that is often taken for granted. However, reliability of timekeeping code is critical for most core and subsystem code. Furthermore, Many higher-level timekeeping utilities in Zephyr work off of ticks but there is no way to modify ticks directly which would require either unnecessary delays in test code or non-ideal compromises in test coverage. Since timekeeping is so critical, there should be as few barriers to testing timekeeping code as possible, while preserving integrity of the kernel's public interface. With this, we expose `sys_clock_tick_set()` as a system call only when `CONFIG_ZTEST` is set, declared within the ztest framework. Signed-off-by: Chris Friedt <[email protected]>

Add a test to verify previous fixes when calculating time in seconds for `clock_gettime()`. Signed-off-by: Chris Friedt <[email protected]>

53d9b05 to

662f37d

Compare

|

I don't get how those numbers came from const uint64_t rollover_s = UINT64_MAX / CONFIG_SYS_CLOCK_TICKS_PER_SEC;

…

curr_tick = rollover_s * CONFIG_SYS_CLOCK_TICKS_PER_SEC;

cout << curr_tick << "\t" << method1() << "\t" << method2() << endl;Shouldn't the final value of curr_tick be 18446744073709551600 and not 18446744073709500000? Anyway, the existing code has an obvious bug if Another bug is in the test code. It finds the second in which the 64-bit tick counter overflows. But the result will be stored in a timespec. This is allowed to have a 32-bit signed seconds field. This will overflow before the tick counter unless |

That's only to get an arbitrary However, I realized that my for-loop boundary conditions were not in correct units. Still illustrates the issue in any case.

👍

Given that Zephyr will own the POSIX headers, that's a good point. We should enforce that it's 64-bit (a recommendation for the 2038 problem). It's not something that will be fixed here https://pubs.opengroup.org/onlinepubs/9699919799/functions/time.html Thanks for the review. |

|

The overflow test doesn't respect |

The previous method used to calculate seconds in

clock_gettime()seemed to have an inaccuracy that grew with time, causing the seconds to be off by an order of magnitude when ticks would roll over.Edit: technically, this is likely a bug in

z_tmcvt().Fixes #52975