-

Notifications

You must be signed in to change notification settings - Fork 617

Add kappa #267

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add kappa #267

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @AakashKumarNain, thanks for the contribution! I wonder if I misunderstand something here:

Shouldn't update_state aggregate the previous statistics like what other metrics in tf.keras.metrics do? For example EDIT:

y_true = np.array([0, 1, 0, 1, 0])

y_pred = np.array([0, 1, 0, 1, 1])

m = tf.keras.metrics.BinaryAccuracy()

m.update_state(y_true, y_pred) # 1st acc: 0.8

print(m.result().numpy()) # 0.8

y_true = np.array([0, 1, 0, 1, 0])

y_pred = np.array([0, 1, 0, 1, 0])

m.update_state(y_true, y_pred) # 2nd acc: 1.0

print(m.result().numpy()) # 0.9 = (0.8 + 1) / 2

m.reset_states() # reset if neededSeems that the implementation now only takes current input into account. Is there any reason that we have to only compute the current input's Cohen's Kappa instead of accumulating states and computing overall Cohen's Kappa? Please correct me if I misunderstand. cc @facaiy.

EDIT: #265 (comment)

|

@AakashKumarNain I think tf.kears.metric.AUC is a good example. We should save/update intermediate variables (say, confusion matrix), rather than the result (kappa score). |

|

Thank you @facaiy for the information. @WindQAQ @facaiy I think I have found a very elegant solution to the problem. I cannot think of anything better than this. Please take a look at this notebook. I will make the changes in PR once you are okay with the solution, though the errors from graph mode are up again in test cases. https://colab.research.google.com/drive/10CNyrnq10RUTHssUcfSdtIyYdGnC5bGD |

|

@AakashKumarNain Aakash, the new solution looks good :-) |

…d_kappa Mergre changes

|

@facaiy Thanks Yan. Can you please help me with the errors in the test case? I have shown it in the same notebook |

|

Could you remove |

|

It didn't help. Now the error is raised due to the |

|

I'm not so sure. Seems that we have to initialize variables by ourselves or use metric in a keras model, please refer to metric's test cases :-) https://github.com/tensorflow/tensorflow/blob/r2.0/tensorflow/python/keras/metrics_test.py |

|

I checked that and I am doing the same thing. This line |

|

Hi, @AakashKumarNain, you have to initialize variables within the metric object: self.evaluate(tf.compat.v1.variables_initializer(kp_obj1.variables))Here is the revised notebook. |

|

Thank you @WindQAQ for looking into it. Can you provide me the access to the notebook? |

|

Sorry about that... Already shared it. Please click the link above again. |

|

Got it. Thanks. |

…d_kappa add changes from tf_addons-master

|

@AakashKumarNain I'm trying to figure that out. Could you tell your TF 2.x version using: |

|

@facaiy @WindQAQ @Squadrick I am on Another funny thing is that everything works with Either the version in |

Sorry I haven't been tracking this too closely. If you're refering to why py3 tests are passing in our CI it's probably because there is no py34 tf2-nightly available so it's running on an old version. See #279 |

|

But @seanpmorgan no matter what version, |

|

I checked on py36 and there is no alias for |

|

Can confirm, |

|

@Squadrick I fixed it. Can you trigger the test again, please? |

|

Thanks @Squadrick Finally! This has taken an enormous amount of time. Thanks @facaiy @WindQAQ @seanpmorgan for all the guidance and efforts. PS: Is there a list where we are keeping track of all the removed |

|

@AakashKumarNain Thanks again for the contribution. |

Not that I know of, but may be worth asking. The best I would look at is https://github.com/tensorflow/tensorflow/blob/master/tensorflow/tools/compatibility/all_renames_v2.py But that mostly uses compat.v1 as replacement. And thanks very much to you for putting in the time to get it all working! It sets a great example for stateful metrics in Addons! |

|

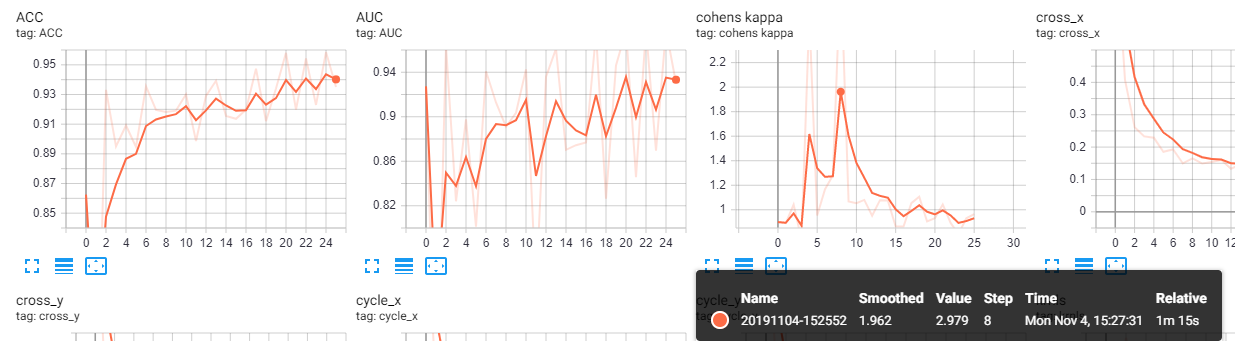

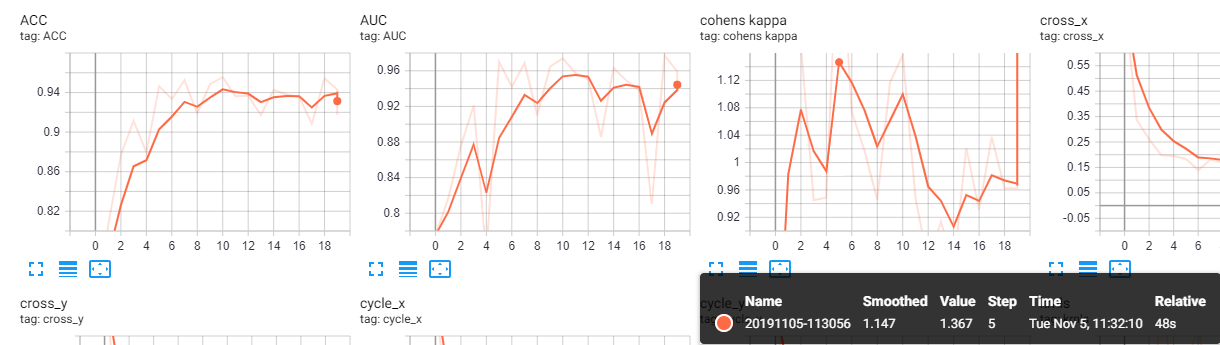

Hello, I am using tfa.metrics.CohenKappa and something seems to be really wrong. While tf.keras.metrics.Accuracy is working fine, the CohenKappa gets values above 1 (sometimes above 2..). I have a dictionary of metrics: and then after each training epoch I call this: As you can see on Tensorboard, it does not really make any sense. I am using TF 2.0 and the docker image tensorflow/tensorflow:2.0.0-gpu. |

|

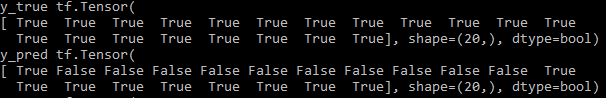

@llu025 Hi, is it possible to dump |

I am not really sure what you mean with "dump". If you are interested in what they contain, they are boolean, so either 0s or 1s. |

I mean the exact values of them, which could make kappa score > 1. |

|

Yeah, it would be really helpful to debug if you provide the values that produced this score |

|

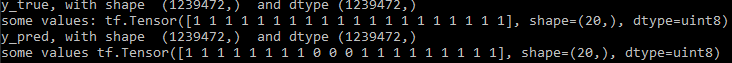

I printed 20 consecutive values from a random index for both I thought the problem might have been the use of boolean labels instead of integers, so I added this in my so I dump them again but I still get kappa above 1 |

|

Thank you! |

|

@WindQAQ Thanks for the test cases. Yeah, it seems that we need to change the dtype to |

|

This should be fix in #675 :D |

Thank you @WindQAQ . I checked the same on my end. Seems that dtype was the issue. |

Added Cohens Kappa as a new metric.