-

Notifications

You must be signed in to change notification settings - Fork 814

migrate IWSLT2016 to datapipes. #1545

migrate IWSLT2016 to datapipes. #1545

Conversation

|

I plan to simplify some of the logic here tomorrow. |

715fcde to

ab7b7e9

Compare

|

OK, this is significantly cleaner now. I think this is ready for review! |

parmeet

left a comment

parmeet

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Overall it LGTM! It's just not so easy to ensure the correctness with failing CI unit-tests on datasets due to cache failure (I am looking into it why the cache is failing). Could you please ensure it's working locally as well?

|

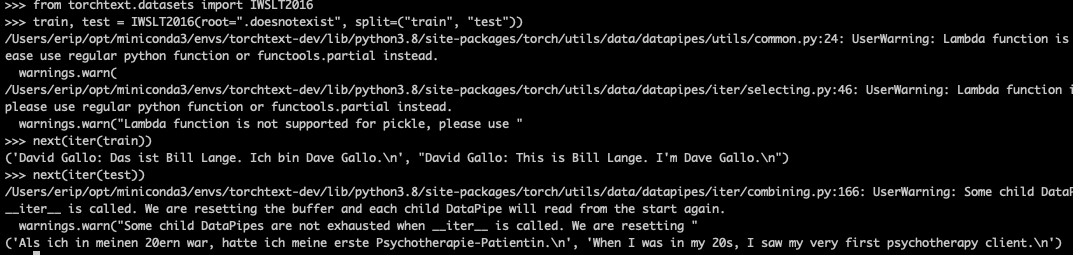

Hmm, tests are passing but playing in the REPL is breaking. I'll fix this and push... |

|

I'm getting a bit mixed up with the logic so I'm just going to brain dump here...

|

Nayef211

left a comment

Nayef211

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for taking on this migration @erip. This dataset is definitely more complex than the other's that we've worked on so far. Just left a couple of comments from my side but lmk if you agree with the suggestions 😄

Thanks @erip for this exercise. This is certainly not as straightforward as other datasets. My understanding about file_path fn in the context of caching is that it would look for all the files returned by that function and return True/False depending on whether they exist on filesystem or not (here is the code: https://github.com/pytorch/data/blob/160ce809f8c3ee686af0e3e701d7341827841eeb/torchdata/datapipes/iter/util/cacheholder.py#L182) Based on this, I would like to think about the steps as follows:

So in a way, there are 4 levels of caching, 1) Download, 2) Outer extraction, 3) inner extraction, 4) clean-up. I think caching is certainly quite involved after 1 , so i would suggest to break this PR into two. In this PR we only introduce caching for download as usual. Then we introduce the caching mechanism for other parts as follow-up PR. This would also make it bit easier to review. Let me know if this helps? cc: @ejguan, to make sure I didn't misguided on the caching story :) |

|

@parmeet that's helpful. I have things nearly good and am on the cleaning step in the last caching block. The issue currently is that when |

f689558 to

9381a88

Compare

a88a99e to

60f0b80

Compare

|

OK, I'm happier with this. 😸 |

woww, this looks great. Thanks so much @erip for reading well from my half-baked comments and working so hard on this PR :). This certainly is the most challenging so far. I think we should be good to go with what we have now! Edit: The speed-ups looks great and we are extracting what's necessary hence saving the disk space!! |

parmeet

left a comment

parmeet

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM! Let's merge it once we address the minor comment here #1545 (comment)

|

Done, @parmeet! |

Reference #1494

This is a bit messy, but seems kosher. cc @ejguan for review