-

Notifications

You must be signed in to change notification settings - Fork 735

Overdrive cpp extension #1299

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Overdrive cpp extension #1299

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the contribution, and sorry for taking so long to get back to you.

Overall it looks good to me.

@cpuhrsch Can you take a second look?

torchaudio/functional/filtering.py

Outdated

| # for i in range(waveform.shape[-1]): | ||

| # last_out = temp[:, i] - last_in + 0.995 * last_out | ||

| # last_in = temp[:, i] | ||

| # output_waveform[:, i] = waveform[:, i] * 0.5 + last_out * 0.75 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think you can get rid of these comments now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@bhargavkathivarapu sounds good. Could you try measure the performance improvement?

Something like the following and change the shape of tensor to variety of shapes.

If numactl is missing, you can install it with sudo apt-get install -y numactl on Ubuntu.

OMP_NUM_THREADS=1 numactl --membind 0 --cpubind 0 python -m timeit -n 100 -r 5 -s """

import torch;

import torchaudio

x = torch.zeros(32, 100, dtype=torch.float)

""" """

torchaudio.functional.overdrive(x)

"""

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM depending some basic benchmarks that show clear improvement.

|

Changes made

Performance details Numctl details ( VM with 2 vCPUs) OMP_NUM_THREADS=1 numactl --membind 0 --cpubind 0 python -m timeit -n 100 -r 5 -s """

import torch

import torchaudio

x = torch.zeros(2, 100, dtype=torch.float)

torchaudio.functional.overdrive(x)

"""(2 , 100 ) = 100 loops, best of 5: 14.1 nsec per loop |

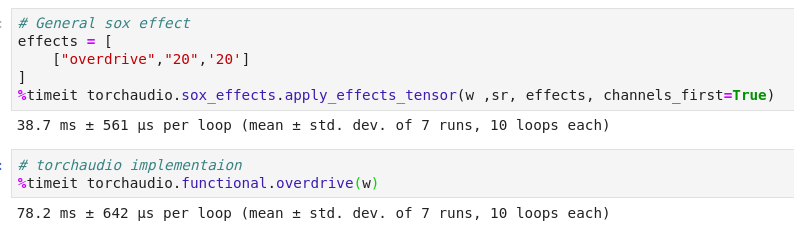

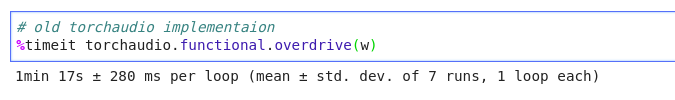

Thanks for the update. So my understanding is that the speed is comparable to sox implementation. Can you compare the same |

|

Can you merge the latest master commit to include #1297? I believe I killed the flaky bug. 🐛 |

Merge master branch into overdrive-new

|

@mthrok . Now all checks passed after merging master.

( Reference 60 second clip ) Tensor shape of w = [1, 1323000] Comparison for that tensor w

|

Wonderful! Thanks! |

New PR for overdrive C++ extension similar to lfilter . Relates to #580 ( old PR )