-

Notifications

You must be signed in to change notification settings - Fork 739

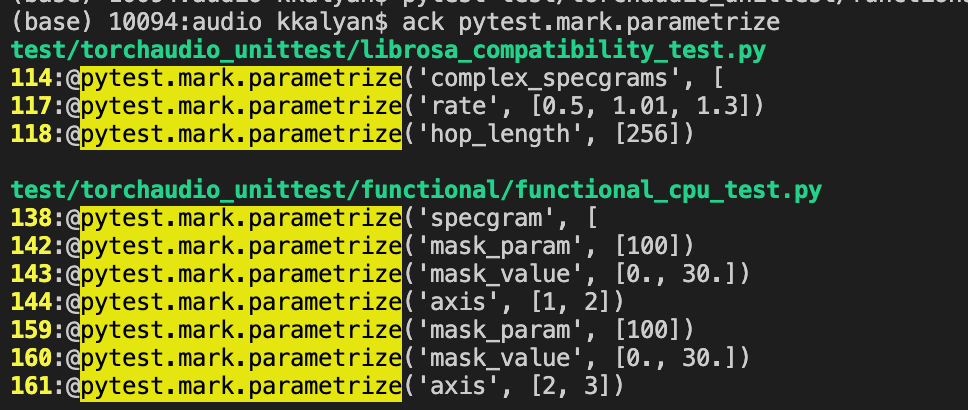

Replace pytest's paremeterization with parameterized #1157

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| torch.testing.assert_allclose(norm_tensor, expected_norm_tensor, atol=1e-5, rtol=1e-5) | ||

| class TestComplexNorm(common_utils.TorchaudioTestCase): | ||

|

|

||

| @parameterized.expand([ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I suppose this is not quite right. list(itertools.product(...) might be the right way to go about this. However that would require importing import itertools.

@mthrok which would be the best way to go about this?.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, that's right. That's the pattern I use everywhere. itertools is a standard library so there is no problem.

Maybe it's good time to add a helper decorator for @partameterized.expand(list(itertools.product pattern, but let's not bother at this moment.

| (torch.randn(1, 2, 1025, 400, 2), 1), | ||

| (torch.randn(1025, 400, 2), 2) | ||

| ]) | ||

| def test_complex_norm(self, complex_tensor, power): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Performing torch.randn in decorator makes it difficult to control the randomness, so instead can you parameterize the shape?

def test_complex_norm(self, shape, power):

torch.manual_seed(0)

complex_tensor = torch.randn(*shape)

mthrok

left a comment

mthrok

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good. Made some suggestions.

| complex_tensor = torch.randn(*shape) | ||

| expected_norm_tensor = complex_tensor.pow(2).sum(-1).pow(power / 2) | ||

| norm_tensor = F.complex_norm(complex_tensor, power) | ||

| torch.testing.assert_allclose(norm_tensor, expected_norm_tensor, atol=1e-5, rtol=1e-5) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can you replace torch.testing.assert_allclose with self.assertEqual?

the use of torch.testing.assert_allclose is discouraged now.

|

|

||

| class TestDB_to_amplitude(common_utils.TorchaudioTestCase): | ||

| def test_DB_to_amplitude(self): | ||

| torch.random.manual_seed(42) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's leave this test untouched. #1113 is modifying this test suite.

| complex_stretch = complex_specgrams_stretch[index].numpy() | ||

| complex_stretch = complex_stretch[..., 0] + 1j * complex_stretch[..., 1] | ||

|

|

||

| assert np.allclose(complex_stretch, expected_complex_stretch, atol=1e-5) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can you use self.assertEqual here? You might need to wrap the expected using torch.from_numpy.

|

Thanks! |

Made Changes to

fixes: #1156