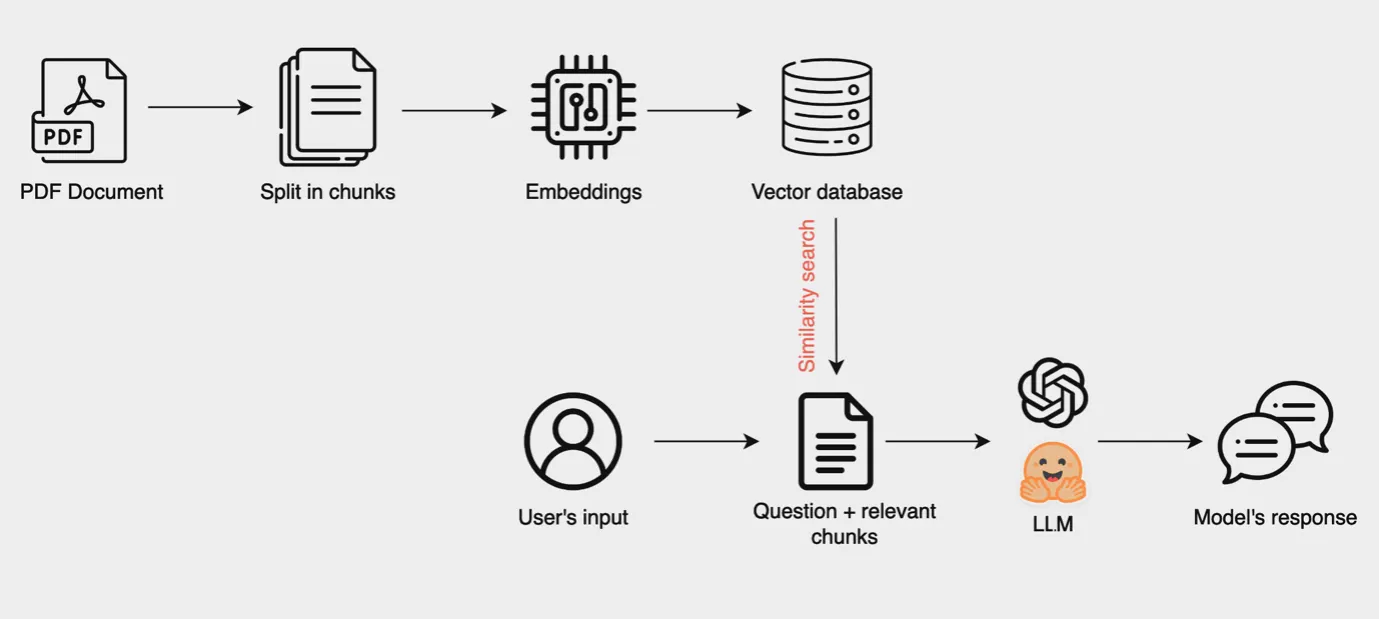

This project is a Retrieval-Augmented Generation (RAG) based chatbot that lets users ask questions about their PDF documents. It leverages:

- 🧠 Ollama LLMs (e.g., Mistral)

- 📚 Sentence Transformers for embeddings

- 🔍 FAISS for vector similarity search

- 🖥️ Gradio for a web-based chatbot interface

The pipeline involves parsing PDFs, splitting them into chunks, and storing embeddings in a FAISS vector database. The chatbot retrieves relevant document sections using these embeddings and answers user queries through a custom-trained language model (Ollama, e.g., Mistral). A Gradio-based web interface allows users to interact with the chatbot, which provides answers, validation against expected answers, and source information.

├── chatbot.py # RAG chatbot logic using Ollama + FAISS

├── vectordb_setup.py # Creates vector DB from PDF files

├── ChatBotGUI.py # Gradio web interface

├── create_relevance.py # Created relevancy list for context and pred/expected answer

├── files/ # Place your PDF files here

├── query.json # (Optional) Ground-truth Q&A for validation

├── vectordb/ # Saved FAISS vector DB (auto-generated)

├── requirements.txt # Python dependencies

└── setup.sh # Virtual environment + dependency setupgit clone https://github.com/your-username/PDFCrawler.git

cd PDFCrawlerbash setup.shThis will:

- Create a Python virtual environment

- Install all dependencies listed in requirements.txt

- Install and configure Ollama (if not already installed)

Place all your .pdf files inside the files/ folder.

python3 vectordb_setup.pyThis will:

- Load and split PDFs

- Generate embeddings using Sentence Transformers

- Save vectors into a local FAISS vector store under vectordb/

python3 live-chat.pyThis launches a Gradio web app for you to interact with your PDF-aware chatbot.

If you'd like to validate chatbot responses, you can include a query.json file in this format:

{

"queries": [

{

"question": "What is the capital of France?",

"answer": "Paris"

},

{

"question": "Who wrote The Odyssey?",

"answer": "Homer"

}

]

}The chatbot will compare its response to the expected answer and show ✅/❌ match results.

| Component | Model Name |

|---|---|

| Embeddings | sentence-transformers/all-MiniLM-L6-v2 |

| LLM (Ollama) | mistral |

You can change these in live-chat.py or chatbot.py

LangChain

FAISS

HuggingFace Sentence Transformers

Ollama

Gradio

- Support for multi-modal PDFs (images + text)

- Session memory for follow-up questions

- Dockerized deployment and Hugging Face Spaces version