-

Notifications

You must be signed in to change notification settings - Fork 6.5k

Controlnet training #2545

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

Controlnet training #2545

Changes from all commits

Commits

Show all changes

66 commits

Select commit

Hold shift + click to select a range

aac7961

Controlnet training code initial commit

Ttl 6985732

Script for adding a controlnet to existing model

Ttl da73a3e

Fix control image transform

Ttl 6501ca2

Add license header and remove more unused configs

Ttl 62fdaf8

controlnet training readme

Ttl 7a92d6f

Allow nonlocal model in add_controlnet.py

Ttl fcbe52e

Formatting

Ttl 87e67bc

Remove unused code

Ttl 391112e

Code quality

Ttl 6f34077

Initialize controlnet in training script

Ttl c375d7b

Formatting

Ttl c3a9abb

Address review comments

Ttl 388a28d

doc style

Ttl 089d3a8

Merge branch 'main' into controlnet_train

williamberman 1c45006

explicit constructor args and submodule names

williamberman 9e5760b

hub dataset

williamberman d932d61

empty prompts

williamberman 0e30636

add conditioning image

williamberman 173b0a4

rename

williamberman 3ac81ea

remove instance data dir

williamberman 43b56bb

Merge branch 'main' into controlnet_train

williamberman 3a672ea

image_transforms -> -1,1 . conditioning_image_transformers -> 0, 1

williamberman e989e9b

nits

williamberman 5452d79

remove local rank config

williamberman 0d0c3a5

validation images

williamberman 40dad23

Merge branch 'main' into controlnet_train

williamberman 2ac68da

proportion_empty_prompts typo

williamberman eaf8c3a

weight copying to controlnet bug

williamberman b7d1b15

call log validation fix

williamberman 7b4baea

fix

williamberman 035f664

gitignore wandb

williamberman b0049a3

fix progress bar and resume from checkpoint iteration

williamberman f3caf3e

initial step fix

williamberman e90b04e

log multiple images

williamberman 26e12e2

fix

williamberman cec5749

fixes

williamberman 124dac4

tracker project name configurable

williamberman 0ce5b4c

misc

williamberman 545cd38

Merge branch 'main' into controlnet_train

williamberman 4c51da9

add controlnet requirements.txt

williamberman c540085

update docs

williamberman 768df18

image labels

williamberman 3590e4b

small fixes

williamberman 3f405ac

log validation using existing models for pipeline

williamberman c7c4857

fix for deepspeed saving

williamberman fc4f089

memory usage docs

williamberman 0f7b132

Update examples/controlnet/train_controlnet.py

williamberman c8514ba

Update examples/controlnet/train_controlnet.py

williamberman 3b06caf

Update examples/controlnet/README.md

williamberman 7e72a13

Update examples/controlnet/README.md

williamberman a4c78ff

Update examples/controlnet/README.md

williamberman 49988a2

Update examples/controlnet/README.md

williamberman e15d274

Update examples/controlnet/README.md

williamberman 7bd26d7

Update examples/controlnet/README.md

williamberman 8f90e36

Update examples/controlnet/README.md

williamberman ed140aa

Update examples/controlnet/README.md

williamberman e10289f

remove extra is main process check

williamberman 602cc02

link to dataset in intro paragraph

williamberman 71a7936

remove unnecessary paragraph

williamberman 820aa23

note on deepspeed

williamberman 011ca31

Update examples/controlnet/README.md

williamberman 2dce652

assert -> value error

williamberman 9e87526

weights and biases note

williamberman 6fd13ea

move images out of git

williamberman cb49716

remove .gitignore

williamberman a729cb8

Merge branch 'main' into controlnet_train

williamberman File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -172,3 +172,5 @@ tags | |

|

|

||

| # ruff | ||

| .ruff_cache | ||

|

|

||

| wandb | ||

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,269 @@ | ||

| # ControlNet training example | ||

williamberman marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| [Adding Conditional Control to Text-to-Image Diffusion Models](https://arxiv.org/abs/2302.05543) by Lvmin Zhang and Maneesh Agrawala. | ||

|

|

||

| This example is based on the [training example in the original ControlNet repository](https://github.com/lllyasviel/ControlNet/blob/main/docs/train.md). It trains a ControlNet to fill circles using a [small synthetic dataset](https://huggingface.co/datasets/fusing/fill50k). | ||

|

|

||

| ## Installing the dependencies | ||

|

|

||

| Before running the scripts, make sure to install the library's training dependencies: | ||

|

|

||

| **Important** | ||

|

|

||

| To make sure you can successfully run the latest versions of the example scripts, we highly recommend **installing from source** and keeping the install up to date as we update the example scripts frequently and install some example-specific requirements. To do this, execute the following steps in a new virtual environment: | ||

| ```bash | ||

| git clone https://github.com/huggingface/diffusers | ||

| cd diffusers | ||

| pip install -e . | ||

| ``` | ||

|

|

||

| Then cd in the example folder and run | ||

| ```bash | ||

| pip install -r requirements.txt | ||

| ``` | ||

|

|

||

| And initialize an [🤗Accelerate](https://github.com/huggingface/accelerate/) environment with: | ||

|

|

||

| ```bash | ||

| accelerate config | ||

| ``` | ||

|

|

||

| Or for a default accelerate configuration without answering questions about your environment | ||

|

|

||

| ```bash | ||

| accelerate config default | ||

| ``` | ||

|

|

||

| Or if your environment doesn't support an interactive shell e.g. a notebook | ||

|

|

||

| ```python | ||

| from accelerate.utils import write_basic_config | ||

| write_basic_config() | ||

| ``` | ||

|

|

||

| ## Circle filling dataset | ||

williamberman marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| The original dataset is hosted in the [ControlNet repo](https://huggingface.co/lllyasviel/ControlNet/blob/main/training/fill50k.zip). We re-uploaded it to be compatible with `datasets` [here](https://huggingface.co/datasets/fusing/fill50k). Note that `datasets` handles dataloading within the training script. | ||

|

|

||

| Our training examples use [Stable Diffusion 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) as the original set of ControlNet models were trained from it. However, ControlNet can be trained to augment any Stable Diffusion compatible model (such as [CompVis/stable-diffusion-v1-4](https://huggingface.co/CompVis/stable-diffusion-v1-4)) or [stabilityai/stable-diffusion-2-1](https://huggingface.co/stabilityai/stable-diffusion-2-1). | ||

|

|

||

| ## Training | ||

|

|

||

| Our training examples use two test conditioning images. They can be downloaded by running | ||

|

|

||

| ```sh | ||

| wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_1.png | ||

|

|

||

| wget https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/controlnet_training/conditioning_image_2.png | ||

| ``` | ||

|

|

||

|

|

||

| ```bash | ||

| export MODEL_DIR="runwayml/stable-diffusion-v1-5" | ||

| export OUTPUT_DIR="path to save model" | ||

|

|

||

| accelerate launch train_controlnet.py \ | ||

| --pretrained_model_name_or_path=$MODEL_DIR \ | ||

| --output_dir=$OUTPUT_DIR \ | ||

| --dataset_name=fusing/fill50k \ | ||

| --resolution=512 \ | ||

| --learning_rate=1e-5 \ | ||

| --validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \ | ||

| --validation_prompt "red circle with blue background" "cyan circle with brown floral background" \ | ||

| --train_batch_size=4 | ||

| ``` | ||

|

|

||

| This default configuration requires ~38GB VRAM. | ||

|

|

||

| By default, the training script logs outputs to tensorboard. Pass `--report_to wandb` to use weights and | ||

| biases. | ||

|

|

||

| Gradient accumulation with a smaller batch size can be used to reduce training requirements to ~20 GB VRAM. | ||

|

|

||

| ```bash | ||

| export MODEL_DIR="runwayml/stable-diffusion-v1-5" | ||

| export OUTPUT_DIR="path to save model" | ||

|

|

||

| accelerate launch train_controlnet.py \ | ||

| --pretrained_model_name_or_path=$MODEL_DIR \ | ||

| --output_dir=$OUTPUT_DIR \ | ||

| --dataset_name=fusing/fill50k \ | ||

| --resolution=512 \ | ||

| --learning_rate=1e-5 \ | ||

| --validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \ | ||

| --validation_prompt "red circle with blue background" "cyan circle with brown floral background" \ | ||

| --train_batch_size=1 \ | ||

| --gradient_accumulation_steps=4 | ||

| ``` | ||

|

|

||

| ## Example results | ||

|

|

||

| #### After 300 steps with batch size 8 | ||

|

|

||

| | | | | ||

| |-------------------|:-------------------------:| | ||

| | | red circle with blue background | | ||

|  |  | | ||

| | | cyan circle with brown floral background | | ||

|  |  | | ||

|

|

||

|

|

||

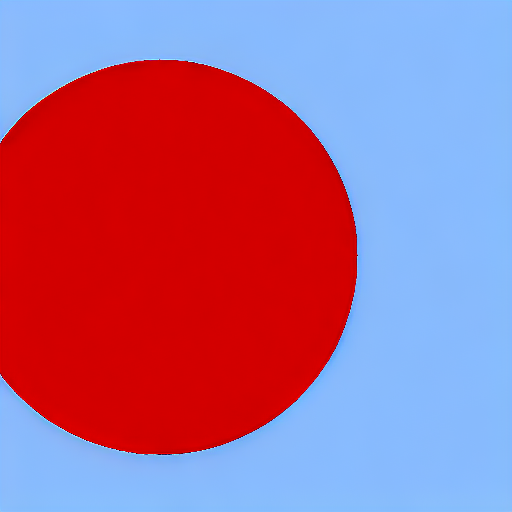

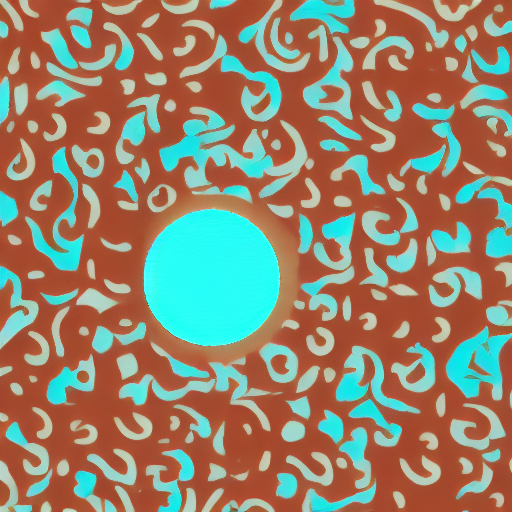

| #### After 6000 steps with batch size 8: | ||

|

|

||

| | | | | ||

| |-------------------|:-------------------------:| | ||

| | | red circle with blue background | | ||

|  |  | | ||

| | | cyan circle with brown floral background | | ||

|  |  | | ||

|

|

||

| ## Training on a 16 GB GPU | ||

williamberman marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| Optimizations: | ||

| - Gradient checkpointing | ||

| - bitsandbyte's 8-bit optimizer | ||

|

|

||

| [bitandbytes install instructions](https://github.com/TimDettmers/bitsandbytes#requirements--installation). | ||

|

|

||

| ```bash | ||

| export MODEL_DIR="runwayml/stable-diffusion-v1-5" | ||

| export OUTPUT_DIR="path to save model" | ||

|

|

||

| accelerate launch train_controlnet.py \ | ||

| --pretrained_model_name_or_path=$MODEL_DIR \ | ||

| --output_dir=$OUTPUT_DIR \ | ||

| --dataset_name=fusing/fill50k \ | ||

| --resolution=512 \ | ||

| --learning_rate=1e-5 \ | ||

| --validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \ | ||

| --validation_prompt "red circle with blue background" "cyan circle with brown floral background" \ | ||

| --train_batch_size=1 \ | ||

| --gradient_accumulation_steps=4 \ | ||

| --gradient_checkpointing \ | ||

| --use_8bit_adam | ||

| ``` | ||

|

|

||

| ## Training on a 12 GB GPU | ||

|

|

||

| Optimizations: | ||

| - Gradient checkpointing | ||

| - bitsandbyte's 8-bit optimizer | ||

| - xformers | ||

| - set grads to none | ||

|

|

||

| ```bash | ||

| export MODEL_DIR="runwayml/stable-diffusion-v1-5" | ||

| export OUTPUT_DIR="path to save model" | ||

|

|

||

| accelerate launch train_controlnet.py \ | ||

| --pretrained_model_name_or_path=$MODEL_DIR \ | ||

| --output_dir=$OUTPUT_DIR \ | ||

| --dataset_name=fusing/fill50k \ | ||

| --resolution=512 \ | ||

| --learning_rate=1e-5 \ | ||

| --validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \ | ||

| --validation_prompt "red circle with blue background" "cyan circle with brown floral background" \ | ||

| --train_batch_size=1 \ | ||

| --gradient_accumulation_steps=4 \ | ||

| --gradient_checkpointing \ | ||

| --use_8bit_adam \ | ||

| --enable_xformers_memory_efficient_attention \ | ||

| --set_grads_to_none | ||

| ``` | ||

|

|

||

williamberman marked this conversation as resolved.

Show resolved

Hide resolved

|

||

| When using `enable_xformers_memory_efficient_attention`, please make sure to install `xformers` by `pip install xformers`. | ||

|

|

||

| ## Training on an 8 GB GPU | ||

|

|

||

| We have not exhaustively tested DeepSpeed support for ControlNet. While the configuration does | ||

| save memory, we have not confirmed the configuration to train successfully. You will very likely | ||

| have to make changes to the config to have a successful training run. | ||

williamberman marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| Optimizations: | ||

| - Gradient checkpointing | ||

| - xformers | ||

| - set grads to none | ||

| - DeepSpeed stage 2 with parameter and optimizer offloading | ||

| - fp16 mixed precision | ||

|

|

||

| [DeepSpeed](https://www.deepspeed.ai/) can offload tensors from VRAM to either | ||

| CPU or NVME. This requires significantly more RAM (about 25 GB). | ||

|

|

||

| Use `accelerate config` to enable DeepSpeed stage 2. | ||

|

|

||

| The relevant parts of the resulting accelerate config file are | ||

|

|

||

| ```yaml | ||

| compute_environment: LOCAL_MACHINE | ||

| deepspeed_config: | ||

| gradient_accumulation_steps: 4 | ||

| offload_optimizer_device: cpu | ||

| offload_param_device: cpu | ||

| zero3_init_flag: false | ||

| zero_stage: 2 | ||

| distributed_type: DEEPSPEED | ||

| ``` | ||

|

|

||

| See [documentation](https://huggingface.co/docs/accelerate/usage_guides/deepspeed) for more DeepSpeed configuration options. | ||

|

|

||

| Changing the default Adam optimizer to DeepSpeed's Adam | ||

| `deepspeed.ops.adam.DeepSpeedCPUAdam` gives a substantial speedup but | ||

| it requires CUDA toolchain with the same version as pytorch. 8-bit optimizer | ||

| does not seem to be compatible with DeepSpeed at the moment. | ||

|

|

||

| ```bash | ||

| export MODEL_DIR="runwayml/stable-diffusion-v1-5" | ||

| export OUTPUT_DIR="path to save model" | ||

|

|

||

| accelerate launch train_controlnet.py \ | ||

| --pretrained_model_name_or_path=$MODEL_DIR \ | ||

| --output_dir=$OUTPUT_DIR \ | ||

| --dataset_name=fusing/fill50k \ | ||

| --resolution=512 \ | ||

| --validation_image "./conditioning_image_1.png" "./conditioning_image_2.png" \ | ||

| --validation_prompt "red circle with blue background" "cyan circle with brown floral background" \ | ||

| --train_batch_size=1 \ | ||

| --gradient_accumulation_steps=4 \ | ||

| --gradient_checkpointing \ | ||

| --enable_xformers_memory_efficient_attention \ | ||

| --set_grads_to_none \ | ||

| --mixed_precision fp16 | ||

| ``` | ||

|

|

||

| ## Performing inference with the trained ControlNet | ||

|

|

||

| The trained model can be run the same as the original ControlNet pipeline with the newly trained ControlNet. | ||

| Set `base_model_path` and `controlnet_path` to the values `--pretrained_model_name_or_path` and | ||

| `--output_dir` were respectively set to in the training script. | ||

|

|

||

| ```py | ||

| from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler | ||

| from diffusers.utils import load_image | ||

| import torch | ||

|

|

||

| base_model_path = "path to model" | ||

| controlnet_path = "path to controlnet" | ||

williamberman marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| controlnet = ControlNetModel.from_pretrained(controlnet_path, torch_dtype=torch.float16) | ||

| pipe = StableDiffusionControlNetPipeline.from_pretrained( | ||

| base_model_path, controlnet=controlnet, torch_dtype=torch.float16 | ||

| ) | ||

|

|

||

| # speed up diffusion process with faster scheduler and memory optimization | ||

| pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config) | ||

| # remove following line if xformers is not installed | ||

| pipe.enable_xformers_memory_efficient_attention() | ||

|

|

||

| pipe.enable_model_cpu_offload() | ||

|

|

||

| control_image = load_image("./conditioning_image_1.png") | ||

| prompt = "pale golden rod circle with old lace background" | ||

|

|

||

| # generate image | ||

| generator = torch.manual_seed(0) | ||

| image = pipe( | ||

| prompt, num_inference_steps=20, generator=generator, image=control_image | ||

| ).images[0] | ||

|

|

||

| image.save("./output.png") | ||

| ``` | ||

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,6 @@ | ||

| accelerate | ||

| torchvision | ||

| transformers>=4.25.1 | ||

| ftfy | ||

| tensorboard | ||

| datasets |

Oops, something went wrong.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Uh oh!

There was an error while loading. Please reload this page.