-

-

Notifications

You must be signed in to change notification settings - Fork 4.5k

fix(derived_code_mappings): Do not abort if a repo fails to process #44126

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change | ||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

@@ -97,38 +97,22 @@ def get_rate_limit(self, specific_resource: str = "core") -> GithubRateLimitInfo | |||||||||||||||||||||||

| # https://docs.github.com/en/rest/git/trees#get-a-tree | ||||||||||||||||||||||||

| def get_tree(self, repo_full_name: str, tree_sha: str) -> JSONData: | ||||||||||||||||||||||||

| tree: JSONData = {} | ||||||||||||||||||||||||

| try: | ||||||||||||||||||||||||

| # We do not cache this call since it is a rather large object | ||||||||||||||||||||||||

| contents: Dict[str, Any] = self.get( | ||||||||||||||||||||||||

| f"/repos/{repo_full_name}/git/trees/{tree_sha}", | ||||||||||||||||||||||||

| # Will cause all objects or subtrees referenced by the tree specified in :tree_sha | ||||||||||||||||||||||||

| params={"recursive": 1}, | ||||||||||||||||||||||||

| # We do not cache this call since it is a rather large object | ||||||||||||||||||||||||

| contents: Dict[str, Any] = self.get( | ||||||||||||||||||||||||

| f"/repos/{repo_full_name}/git/trees/{tree_sha}", | ||||||||||||||||||||||||

| # Will cause all objects or subtrees referenced by the tree specified in :tree_sha | ||||||||||||||||||||||||

| params={"recursive": 1}, | ||||||||||||||||||||||||

| ) | ||||||||||||||||||||||||

| # If truncated is true in the response then the number of items in the tree array exceeded our maximum limit. | ||||||||||||||||||||||||

| # If you need to fetch more items, use the non-recursive method of fetching trees, and fetch one sub-tree at a time. | ||||||||||||||||||||||||

| # Note: The limit for the tree array is 100,000 entries with a maximum size of 7 MB when using the recursive parameter. | ||||||||||||||||||||||||

| # XXX: We will need to improve this by iterating through trees without using the recursive parameter | ||||||||||||||||||||||||

| if contents.get("truncated"): | ||||||||||||||||||||||||

| # e.g. getsentry/DataForThePeople | ||||||||||||||||||||||||

| logger.warning( | ||||||||||||||||||||||||

| f"The tree for {repo_full_name} has been truncated. Use different a approach for retrieving contents of tree." | ||||||||||||||||||||||||

| ) | ||||||||||||||||||||||||

| # If truncated is true in the response then the number of items in the tree array exceeded our maximum limit. | ||||||||||||||||||||||||

| # If you need to fetch more items, use the non-recursive method of fetching trees, and fetch one sub-tree at a time. | ||||||||||||||||||||||||

| # Note: The limit for the tree array is 100,000 entries with a maximum size of 7 MB when using the recursive parameter. | ||||||||||||||||||||||||

| # XXX: We will need to improve this by iterating through trees without using the recursive parameter | ||||||||||||||||||||||||

| if contents.get("truncated"): | ||||||||||||||||||||||||

| # e.g. getsentry/DataForThePeople | ||||||||||||||||||||||||

| logger.warning( | ||||||||||||||||||||||||

| f"The tree for {repo_full_name} has been truncated. Use different a approach for retrieving contents of tree." | ||||||||||||||||||||||||

| ) | ||||||||||||||||||||||||

| tree = contents["tree"] | ||||||||||||||||||||||||

| except ApiError as error: | ||||||||||||||||||||||||

|

||||||||||||||||||||||||

| try: | |

| # The Github API rate limit is reset every hour | |

| # Spread the expiration of the cache of each repo across the day | |

| trees[repo_full_name] = self._populate_tree( | |

| repo_info, only_use_cache, (3600 * 24) + (3600 * (index % 24)) | |

| ) | |

| except ApiError as error: | |

| process_error(error, extra) | |

| except Exception: | |

| # Report for investigatagiation but do not stop processing | |

| logger.exception( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

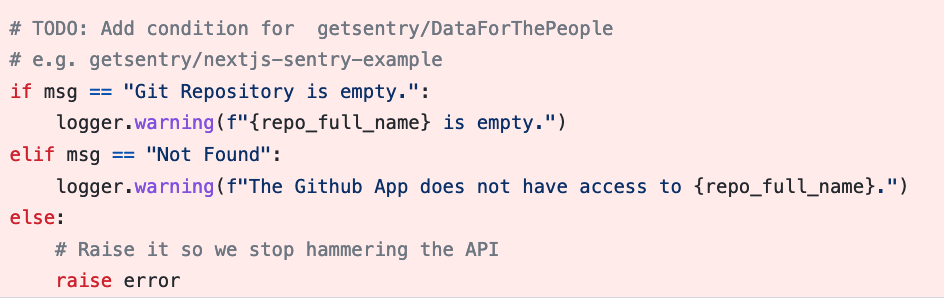

In here we raise any non-identified error and this would cause the processing of all repos to abort.

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Removing this except block and letting the derived tasks handle it over there:

https://github.com/getsentry/sentry/blob/67327c3e2b5b59e7afc61bc26330ce987202b945/src/sentry/tasks/derive_code_mappings.py#L76-L90

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This block basically comes from get_tree. We're now handling in here to allow keep moving forward with processing repositories rather than aborting.

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If a call to _populate_tree raises an error, we will now report to Sentry and not abort the processing.

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -609,13 +609,11 @@ def get_installation_helper(self): | |

| def test_get_trees_for_org_works(self): | ||

| """Fetch the tree representation of a repo""" | ||

| installation = self.get_installation_helper() | ||

| cache.clear() | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I noticed that the next test would sometimes fail because the cache side effects of this test would affect the next. I tried to add this as part of |

||

| self.set_rate_limit(MINIMUM_REQUESTS + 50) | ||

| expected_trees = { | ||

| "Test-Organization/bar": RepoTree(Repo("Test-Organization/bar", "main"), []), | ||

| "Test-Organization/baz": RepoTree(Repo("Test-Organization/baz", "master"), []), | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

|

||

| "Test-Organization/foo": RepoTree( | ||

| Repo("Test-Organization/foo", "master"), | ||

| ["src/sentry/api/endpoints/auth_login.py"], | ||

| Repo("Test-Organization/foo", "master"), ["src/sentry/api/endpoints/auth_login.py"] | ||

| ), | ||

| "Test-Organization/xyz": RepoTree( | ||

| Repo("Test-Organization/xyz", "master"), ["src/foo.py"] | ||

|

|

@@ -646,6 +644,8 @@ def test_get_trees_for_org_works(self): | |

| def test_get_trees_for_org_prevent_exhaustion_some_repos(self): | ||

| """Some repos will hit the network but the rest will grab from the cache.""" | ||

| gh_org = "Test-Organization" | ||

| repos_key = "githubtrees:repositories:Test-Organization" | ||

| cache.clear() | ||

| installation = self.get_installation_helper() | ||

| expected_trees = { | ||

| f"{gh_org}/xyz": RepoTree(Repo(f"{gh_org}/xyz", "master"), ["src/foo.py"]), | ||

|

|

@@ -657,8 +657,15 @@ def test_get_trees_for_org_prevent_exhaustion_some_repos(self): | |

| with patch("sentry.integrations.github.client.MINIMUM_REQUESTS", new=5, autospec=False): | ||

| # We start with one request left before reaching the minimum remaining requests floor | ||

| self.set_rate_limit(remaining=6) | ||

| assert cache.get(repos_key) is None | ||

|

Member

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. This is a good enough test to notice the cache cleared. |

||

| trees = installation.get_trees_for_org() | ||

| assert trees == expected_trees # xyz will have files but not foo | ||

| assert cache.get(repos_key) == [ | ||

| {"full_name": "Test-Organization/xyz", "default_branch": "master"}, | ||

| {"full_name": "Test-Organization/foo", "default_branch": "master"}, | ||

| {"full_name": "Test-Organization/bar", "default_branch": "main"}, | ||

| {"full_name": "Test-Organization/baz", "default_branch": "master"}, | ||

| ] | ||

|

|

||

| # Another call should not make us loose the files for xyz | ||

| self.set_rate_limit(remaining=5) | ||

|

|

@@ -668,8 +675,10 @@ def test_get_trees_for_org_prevent_exhaustion_some_repos(self): | |

| # We reset the remaining values | ||

| self.set_rate_limit(remaining=20) | ||

| trees = installation.get_trees_for_org() | ||

| # Now that the rate limit is reset we should get files for foo | ||

| expected_trees[f"{gh_org}/foo"] = RepoTree( | ||

| Repo(f"{gh_org}/foo", "master"), ["src/sentry/api/endpoints/auth_login.py"] | ||

| ) | ||

| assert trees == expected_trees | ||

| assert trees == { | ||

| f"{gh_org}/xyz": RepoTree(Repo(f"{gh_org}/xyz", "master"), ["src/foo.py"]), | ||

| # Now that the rate limit is reset we should get files for foo | ||

| f"{gh_org}/foo": RepoTree( | ||

| Repo(f"{gh_org}/foo", "master"), ["src/sentry/api/endpoints/auth_login.py"] | ||

| ), | ||

| } | ||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If you use "Hide whitespace" this PR is easier to review.