-

Notifications

You must be signed in to change notification settings - Fork 5

Description

Fable port

Issue #125 | Created by @pkese | 2020-06-02 20:00:16 UTC | post-1.0

This is somewhat of an elevator pitch referring to the profit meme in DiffSharp/DiffSharp#69 (comment)

My proposal for the ???? part is to consider Fable integration as a possible driver for that.

Why?

When I was learning neural networks a few years ago, I remember playing a lot with Andrej Karpathy's https://cs.stanford.edu/people/karpathy/convnetjs/ deep-learning library in Javascript.

The main reason why I actually spent time playing with slow Javascript backpropagation rather than training neural networks in Python on real Nvidia GPU are

a) it's a great learning and teaching tool,

b) it's extremely accessible (all you need is browser, no install necessary),

c) and most importantly, it's interactive and extremely involving.

Another argument effective just recently is that the GPUs are nowadays accessible also in browsers through WebGL.

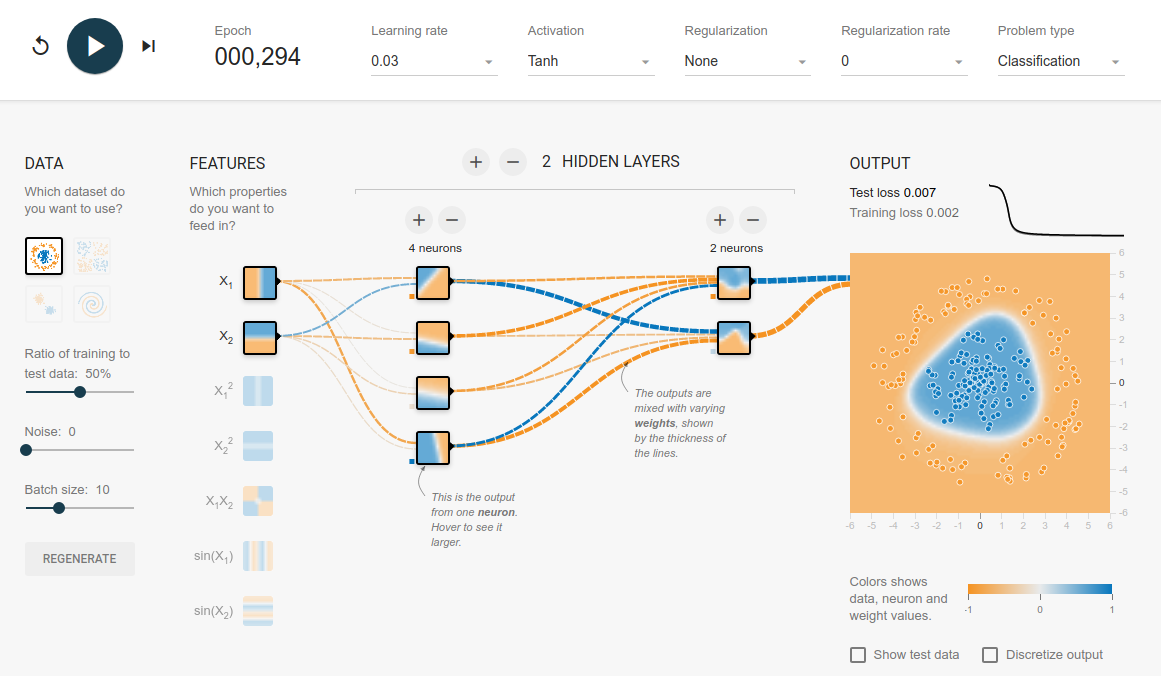

By far the coolest thing is that in browser the state of neural network can easily be visualized in real time, which makes it very addictive: you change some parameters, choose what to visualize, press Run and see the effects of your changes as things progress... Then rinse and repeat. It's easy to spend heaps of time having fun with that.

Scroll down for some images...

Rendering such images takes quite some effort in Python and you still don't seem them as things progress, whereas on browser, all of this is accessible through a few lines of code. You can easily pick any layer of neural network to visualize. In real time.

While JS frameworks like tensorflow.js do exist, F# has unique position to target both browser and backend with the same language (Tensorflow has different implementations for Python and JS). Anything you learn or write in browser is transferable to backend.

And vice versa. Models trained in backend are easily transferable to frontend, which also makes it a good business proposition.

And you squint and it looks like python.

So the idea is to use one of JS WebGL tensor libraries as an additional DiffSharp Backend. I don't think that would be hard to do (I'd be willing to invest time into it).

The bigger obstacle is that Fable is lacking support for multidimensional arrays. I've tried to look into that already, but it will require someone with more knowledge on Fable-compiler internals to work on that.

So what's really missing is to find a way to somehow invite Fable people into this story and ask them to help us out with multidimensional arrays.

.

These are sample images: This is what you don't get to see in real time, if you train your networks in Python.

https://blog.fastforwardlabs.com/2016/08/12/introducing-variational-autoencoders-in-prose-and.html

http://kennycason.com/posts/2018-05-04-deep-autoencoder-kotlin.html

Comment by @gbaydin | 2020-06-02 23:39:01 UTC

@pkese thank you for this suggestion. It would be good to clarify a few things.

I'm not familiar with Fable, but as I understand, it's an F#-to-JavaScript compiler that allows one to write web applications with F#. Please correct me if I'm wrong.

I think it would be really cool to have F# web demos and projects using DiffSharp that visualize how some standard machine learning techniques (like training a neural network with backpropagation) work. If my understanding of what Fable is is correct, the reference backend in DiffSharp (a raw tensor library implemented entirely in F# without any external dependencies) would allow this type of applications already when used with Fable. For small scale of examples like training a VAE on MNIST digits (the first animation you shared) the reference backend does a surprisingly good job performance-wise. One could start with that as a proof-of-concept then add a WebGL backend to make it more performant.

Note that the existing reference backend in DiffSharp is already the F# equivalent to https://cs.stanford.edu/people/karpathy/convnetjs/. convnetjs is a bare-bones JavaScript library implementing 3d tensors and basic things like convolutions and matrix-multiplication in JavaScript without any BLAS or GPU dependencies.

This type of applications are awesome for introducing machine learning to people by showing how the internals work. I can imagine things like https://playground.tensorflow.org/ can be implemented with Fable (that type of thing would definitely work very well with the reference backend due to being small-scale). I think this would be a very good starting point to establish the setup:

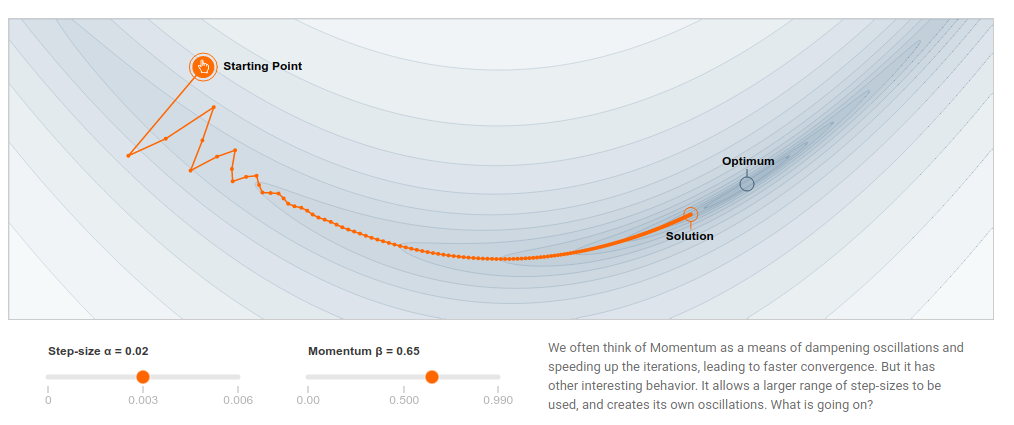

One could also do things like the interactive work people share in distill.pub, such as this 2d optimization demo: https://distill.pub/2017/momentum/ This is definitely something that would work very well with the existing reference backend.

With a WebGL backend more advanced/heavier things can be done.

Comment by @gbaydin | 2020-06-02 23:46:23 UTC

Rendering such images takes quite some effort in Python and you still don't seem them as things progress

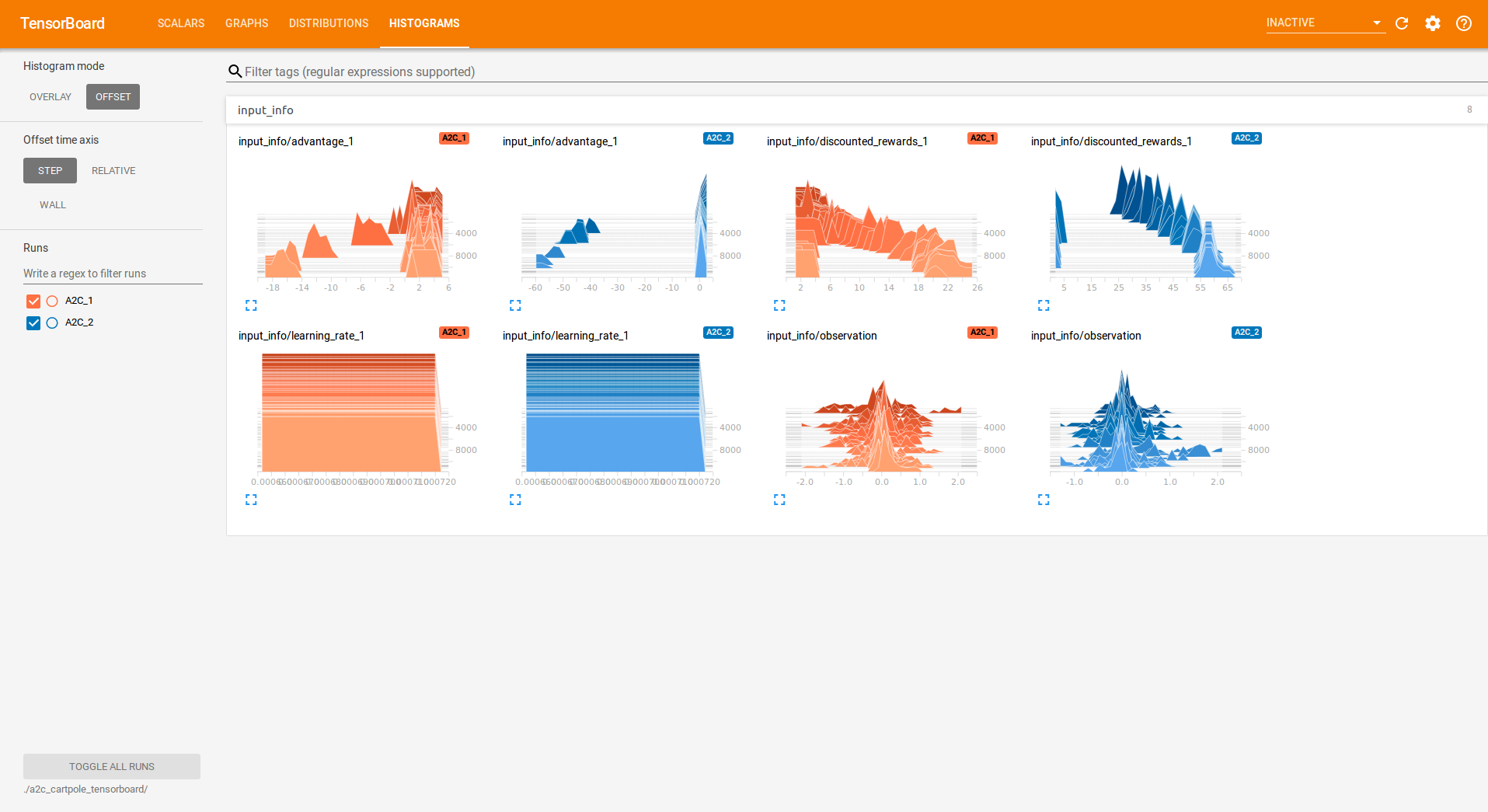

I don't agree with this comment. People regularly use visualizations in Python ML work that can be either custom plotting code (e.g., with matplotlib primitives), developing things in Jupyter (for this part the latest developments with dotnet interactive and F# are really promising), or connecting their code to toolkits like https://www.tensorflow.org/tensorboard/ and https://github.com/facebookresearch/visdom.

By the way, another very cool project would be to write an interface from DiffSharp to TensorBoard or Visdom. (For example PyTorch recently added TensorBoard integration https://pytorch.org/docs/stable/tensorboard.html)

Adding support for this would involve writing some tensor serialization/networking code to connect to the API provided by these systems. Then DiffSharp users can do live visualizations of their ML pipelines during training. I think it would be very straightforward to implement the necessary parts if F#.

Comment by @dsyme | 2020-06-03 11:54:33 UTC

Some technical notes regarding Fable.

-

Fable or WebSharper cross-compile would be somewhat intrusive - both in the code and in the testing.

-

Because of this, the best approach technically would probably be for someone to maintain a downstream fork or branch (it doesn't much matter which) which cross-compiles the Reference implementation and testing, with the Torch backend removed (a "haircut"), under the full knowledge that there will be considerable churn in the core implementation.

-

That's the way that the Fable port of FSharp.Compiler.Service was created and is maintained at the moment, and indeed FCS was itself created as a fork+haircut of the core F# tooling.

-

This can be an effective maintenance strategy but requires regular integration, e.g. this. It's sometimes possible to automate this integration

-

Normally it's not realistic to integrate the necessary changes back even under

#ifbecause of the engineering complexity induced at this stage. -

@gbaydin and myself aren't at the point where we could do this but if @pkese or someone else would like to work on it (as a downstream fork+haircut) I'm sure we can cooperate in a similar way to happened for FCS

Comment by @pkese | 2020-06-03 13:41:35 UTC

I agree that a downstream fork would be the right approach.

And it was not my intention to put any additional burden on anyone's shoulders. As I said, I'd be willing to work on that myself (especially now that I had the unexpected opportunity to get familiar with DiffSharp codebase yesterday under Don's mentorship 😉).

The real difficult part is the missing multidimensional array implementation in Fable compiler which I'm not sure how to approach. I'll try to ask Fable people if they could to offer some help.

Comment by @gbaydin | 2020-06-03 15:04:51 UTC

The real difficult part is the missing multidimensional array implementation in Fable compiler which I'm not sure how to approach. I'll try to ask Fable people if they could to offer some help.

Perhaps you wouldn't need a multidimensional array implementation in Fable, if they already support 1d arrays. DiffSharp reference backend implements multidimensional arrays (tensors) using simple arrays (like a 1d data array and a 1d shape array). The code does use some 2d arrays in parts like slicing however.