-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-40834][SQL] Use SparkListenerSQLExecutionEnd to track final SQL status in UI #38302

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

not sure if we need to expose the full error stack

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

how do we report error message before this PR?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

two use cases:

- live event listener

User can get the original exception fromQueryExecutionListener - history event

User can not get exception fromSparkListenerSQLExecutionEnd. In general, the error info can be catched from stage events

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why do we need to add error to SparkListenerSQLExecutionEnd now?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

To clarify, I think adding a isFailed flag should be sufficient, but since we need to change the event, it's better to add error message which is better. Do I understand it correctly?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, right. As we are here, I think error msg has more meaningful than a flag, both of them can work though.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

shall we call SparkThrowableHelper.getMessage so that users can get the JSON format error message via config? cc @MaxGekk

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

or always use JSON format as this will be stored in the event log.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

here we catch all Throwable, how about this ?

ex.map {

case e: SparkThrowable =>

SparkThrowableHelper.getMessage(e, ErrorMessageFormat.STANDARD)

case e =>

// unexpected behavior

s"${e.getClass.getCanonicalName}: ${e.getMessage}"

})There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we make s"${e.getClass.getCanonicalName}: ${e.getMessage}" JSON format as well? e.g. the java class name can be the error class.

|

cc @gengliangwang @cloud-fan @HyukjinKwon thank you |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@cloud-fan this follows the standard error msg json format

|

+CC @shardulm94, @thejdeep |

cloud-fan

left a comment

cloud-fan

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM. cc @gengliangwang @MaxGekk

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I find this \"messageParameters\":{\"message\":\"test\"} odd...

Shouldn't it just be an error message?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I just follow the style of error json format, so we can keep consistent with SparkThrowable. We'd expect all java throwables are wrapped by SparkThrowable so we will never go into here.

|

@gengliangwang @cloud-fan do you have any concerns about this pr ? |

|

thanks, merging to master! |

| case class SparkListenerSQLExecutionEnd( | ||

| executionId: Long, | ||

| time: Long, | ||

| errorMessage: Option[String] = None) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thinking about this more, I think we should use Some("") to indicate no error, as None may mean event logs produced by old version of Spark that don't have this error message field. @ulysses-you

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I made a followup: #38747

| case class SparkListenerSQLExecutionEnd( | ||

| executionId: Long, | ||

| time: Long, | ||

| errorMessage: Option[String] = None) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Doesn't this break binary compatibility? I think it should have overridden constructor.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is not a public class. It generates JSON string where backward compatibility matters, and this PR added tests for it.

### What changes were proposed in this pull request? This is a followup of #38302 . For events generated by old versions of Spark, which do not have the new `errorMessage` field, we should use the old way to detect query execution status (failed or not). This PR also adds a UI test for the expected behavior. ### Why are the changes needed? backward compatibility ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? new tests Closes #38747 from cloud-fan/ui. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…L status in UI

### What changes were proposed in this pull request?

Use event `SparkListenerSQLExecutionEnd` to track if the SQL/DataFrame is completion instead of using job status.

### Why are the changes needed?

The SQL may succeed with some failed jobs. For example, a inner join with one empty side and one large side, the plan would finish and the large side is still running.

### Does this PR introduce _any_ user-facing change?

yes, correct the sql status in UI

### How was this patch tested?

add test for backward compatibility and manually test

```sql

CREATE TABLE t1 (c1 int) USING PARQUET;

CREATE TABLE t2 USING PARQUET AS SELECT 1 AS c2;

```

```bash

./bin/spark-sql -e "SELECT /*+ merge(tmp) */ * FROM t1 JOIN (SELECT c2, java_method('java.lang.Thread', 'sleep', 10000L) FROM t2) tmp ON c1 = c2;"

```

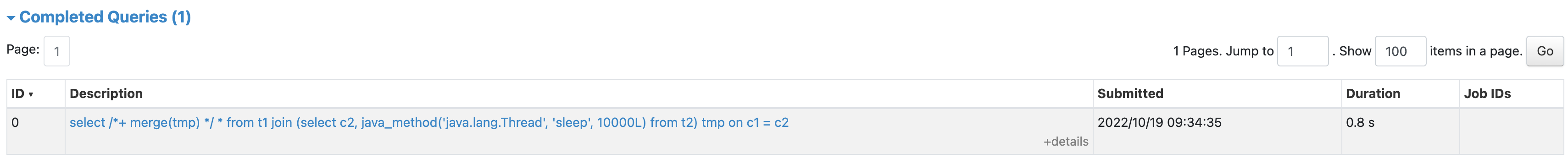

before:

<img width="1712" alt="image" src="https://user-images.githubusercontent.com/12025282/196576790-7e4eeb29-024f-4ac3-bdec-f4e894448b57.png">

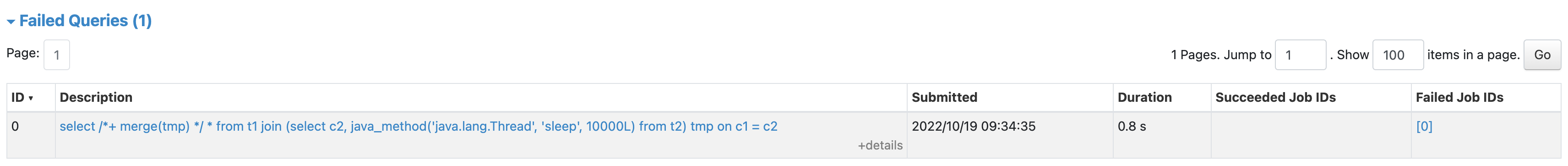

after:

<img width="1709" alt="image" src="https://user-images.githubusercontent.com/12025282/196576674-15d80366-bd42-417b-80bf-eeec0b1ef046.png">

Closes apache#38302 from ulysses-you/sql-end.

Authored-by: ulysses-you <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? This is a followup of apache#38302 . For events generated by old versions of Spark, which do not have the new `errorMessage` field, we should use the old way to detect query execution status (failed or not). This PR also adds a UI test for the expected behavior. ### Why are the changes needed? backward compatibility ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? new tests Closes apache#38747 from cloud-fan/ui. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

### What changes were proposed in this pull request? This is a followup of apache#38302 . For events generated by old versions of Spark, which do not have the new `errorMessage` field, we should use the old way to detect query execution status (failed or not). This PR also adds a UI test for the expected behavior. ### Why are the changes needed? backward compatibility ### Does this PR introduce _any_ user-facing change? no ### How was this patch tested? new tests Closes apache#38747 from cloud-fan/ui. Authored-by: Wenchen Fan <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

|

hi @ulysses-you any chance you can take a look at https://issues.apache.org/jira/browse/SPARK-41735? |

What changes were proposed in this pull request?

Use event

SparkListenerSQLExecutionEndto track if the SQL/DataFrame is completion instead of using job status.Why are the changes needed?

The SQL may succeed with some failed jobs. For example, a inner join with one empty side and one large side, the plan would finish and the large side is still running.

Does this PR introduce any user-facing change?

yes, correct the sql status in UI

How was this patch tested?

add test for backward compatibility and manually test

./bin/spark-sql -e "SELECT /*+ merge(tmp) */ * FROM t1 JOIN (SELECT c2, java_method('java.lang.Thread', 'sleep', 10000L) FROM t2) tmp ON c1 = c2;"before:

after: