-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-38309][CORE] Fix SHS shuffleTotalReads and shuffleTotalBlocks percentile metrics

#35637

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can one of the admins verify this patch? |

|

Just out of curiosity, can you explain again the idea that negative = negative + negative? why add the same thing to itself? it looks funny but I'm sure there's a reason it works - may be worth a comment |

This doesn't add the same number together twice. This refers to the sign (positive or negative) for the composite indices modified in this PR, |

|

Oh disregard, I read the new code as adding the same variable to itself -- they're local vs remote values. I got it |

|

@srowen if @shahidki31 doesn't answer is there anyone else we can ping to verify the changes? Let me know if I can help. |

mridulm

left a comment

mridulm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we add a specific unit test to validate that the sign is not flipping ?

| private def getTaskMetrics(seed: Int): TaskMetrics = { | ||

| val random = new Random(seed) | ||

| val randomMax = 1000 | ||

| def nextInt(): Int = random.nextInt(randomMax) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Note: This can end up becoming zero.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should have no impact on the validity of the tests here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think you might have mentioned this due to the metric value being made negative for tasks that aren't successful. Zero is accounted for here.

The test After researching if we could write a lower level test directly for the index value I am leaning towards the above test being the appropriate level to test at, but am open to changing this if you disagree.

|

|

Since the existing test was not catching this issue, I want to make sure that we are testing for this behavior. |

mridulm

left a comment

mridulm

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good to me.

Any other thoughts @srowen ?

+CC @shahidki31

shuffleTotalReads and shuffleTotalBlocks percentile metrics

dongjoon-hyun

left a comment

dongjoon-hyun

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1, LGTM, too.

|

Backport to 3.2/3.1 I presume? |

|

@robreeves The PR #26508 was originally intended to compute summary metrics for only successful tasks. That is why made all the non successful tasks' metrics negative, initially. So do you see ShuffleTotalReads and ShuffleTotalBlocks negative even for successful tasks? |

@shahidki31 The problem for ShuffleTotalReads and ShuffleTotalBlocks is that the index values are not ever negative because |

|

Ok. We will be converting to the actual shuffle metrics value here. spark/core/src/main/scala/org/apache/spark/status/storeTypes.scala Lines 270 to 276 in 7715538

But this method is used only for computing the summary metrics. spark/core/src/main/scala/org/apache/spark/status/storeTypes.scala Lines 344 to 351 in 7715538

LGTM. |

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR #26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR #26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes #35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <[email protected]> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 0ad7677) Signed-off-by: Mridul Muralidharan <mridulatgmail.com>

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR #26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR #26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes #35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <[email protected]> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 0ad7677) Signed-off-by: Mridul Muralidharan <mridulatgmail.com>

|

Merged to master/branch-3.2/branch-3.1 Thanks for working on this @robreeves ! |

…ks` percentile metrics ### What changes were proposed in this pull request? #### Background In PR apache#26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See [this comment](apache#26508 (comment)) for context. To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199]. #### Issue For metrics 1) shuffle total reads and 2) shuffle total blocks, PR apache#26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric, `executorRunTime`. #### Fix The index values for `SHUFFLE_TOTAL_READS` and `SHUFFLE_TOTAL_BLOCKS` should not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome. `negative + negative = negative` and `positive + positive = positive`. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used. ### Why are the changes needed? This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct. ### Does this PR introduce _any_ user-facing change? Yes. The user will see the correct percentile values for the stage summary shuffle read bytes. ### How was this patch tested? I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident. I manually validated the fix in the UI. **BEFORE**  **AFTER**  I manually validated the fix in the task summary API (`/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0`). See `shuffleReadMetrics.readBytes` and `shuffleReadMetrics.totalBlocksFetched`. Before: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... } ``` After: ```json { "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... } ``` Closes apache#35637 from robreeves/SPARK-38309. Authored-by: Rob Reeves <[email protected]> Signed-off-by: Mridul Muralidharan <mridul<at>gmail.com> (cherry picked from commit 0ad7677) Signed-off-by: Mridul Muralidharan <mridulatgmail.com> (cherry picked from commit e067b12) Signed-off-by: Dongjoon Hyun <[email protected]>

What changes were proposed in this pull request?

Background

In PR #26508 (SPARK-26260) the SHS stage metric percentiles were updated to only include successful tasks when using disk storage. It did this by making the values for each metric negative when the task is not in a successful state. This approach was chosen to avoid breaking changes to disk storage. See this comment for context.

To get the percentiles, it reads the metric values, starting at 0, in ascending order. This filters out all tasks that are not successful because the values are less than 0. To get the percentile values it scales the percentiles to the list index of successful tasks. For example if there are 200 tasks and you want percentiles [0, 25, 50, 75, 100] the lookup indexes in the task collection are [0, 50, 100, 150, 199].

Issue

For metrics 1) shuffle total reads and 2) shuffle total blocks, PR #26508 incorrectly makes the metric indices positive. This means tasks that are not successful are included in the percentile calculations. The percentile lookup index calculation is still based on the number of successful task so the wrong task metric is returned for a given percentile. This was not caught because the unit test only verified values for one metric,

executorRunTime.Fix

The index values for

SHUFFLE_TOTAL_READSandSHUFFLE_TOTAL_BLOCKSshould not convert back to positive metric values for tasks that are not successful. I believe this was done because the metrics values are summed from two other metrics. Using the raw values still creates the desired outcome.negative + negative = negativeandpositive + positive = positive. There is no case where one metric will be negative and one will be positive. I also verified that these two metrics are only used in the percentile calculations where only successful tasks are used.Why are the changes needed?

This change is required so that the SHS stage percentile metrics for shuffle read bytes and shuffle total blocks are correct.

Does this PR introduce any user-facing change?

Yes. The user will see the correct percentile values for the stage summary shuffle read bytes.

How was this patch tested?

I updated the unit test to verify the percentile values for every task metric. I also modified the unit test to have unique values for every metric. Previously the test had the same metrics for every field. This would not catch bugs like the wrong field being read by accident.

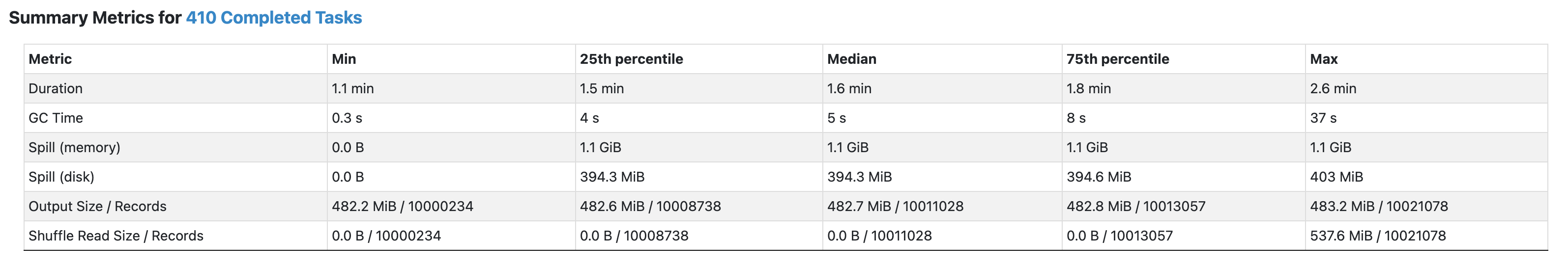

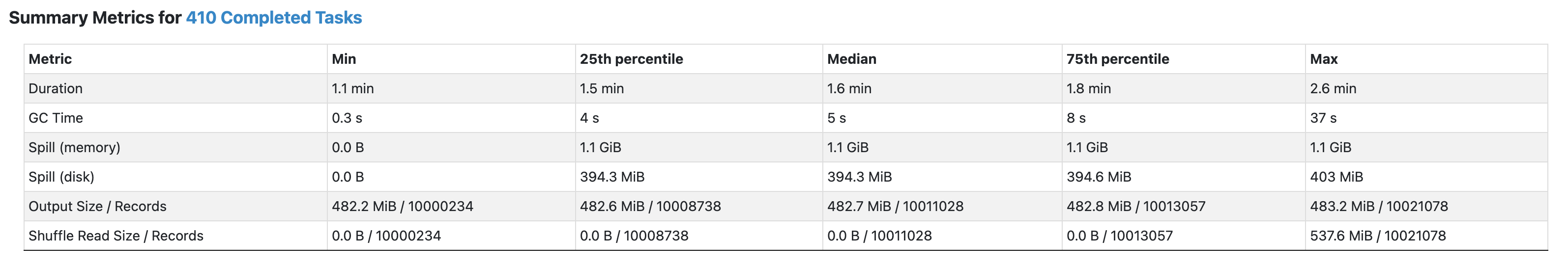

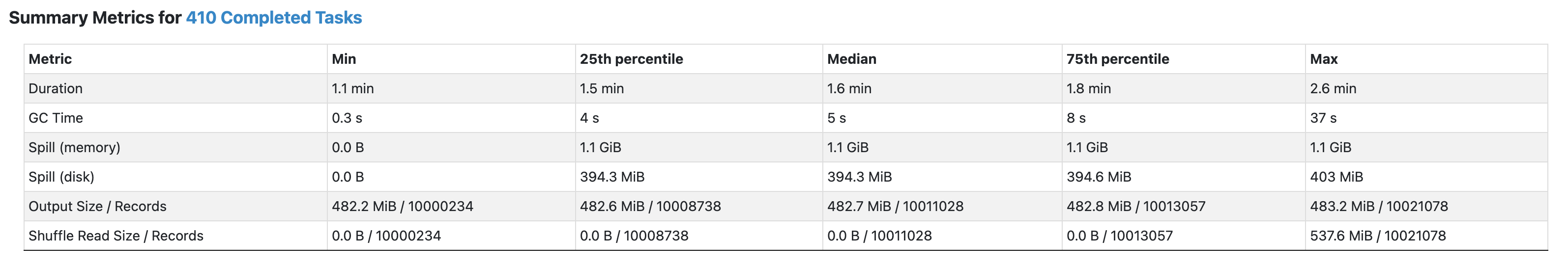

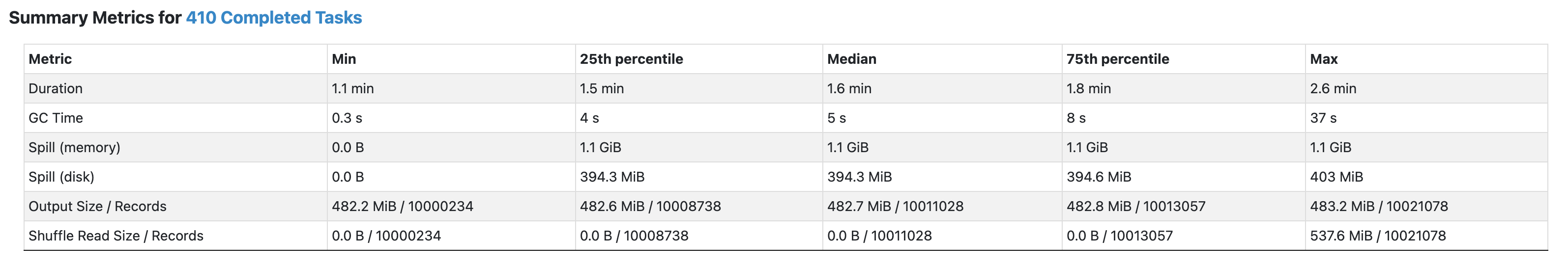

I manually validated the fix in the UI.

BEFORE

AFTER

I manually validated the fix in the task summary API (

/api/v1/applications/application_123/1/stages/14/0/taskSummary\?quantiles\=0,0.25,0.5,0.75,1.0). SeeshuffleReadMetrics.readBytesandshuffleReadMetrics.totalBlocksFetched.Before:

{ "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ -2.0, -2.0, -2.0, -2.0, 5.63718681E8 ], "totalBlocksFetched":[ -2.0, -2.0, -2.0, -2.0, 2.0 ], ... }, ... }After:

{ "quantiles":[ 0.0, 0.25, 0.5, 0.75, 1.0 ], "shuffleReadMetrics":{ "readBytes":[ 5.62865286E8, 5.63779421E8, 5.63941681E8, 5.64327925E8, 5.7674183E8 ], "totalBlocksFetched":[ 2.0, 2.0, 2.0, 2.0, 2.0 ], ... } ... }