-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-29292][SQL][ML] Update rest of default modules (Hive, ML, etc) for Scala 2.13 compilation #29111

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

srowen

left a comment

srowen

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| import java.util.List; | ||

|

|

||

| import scala.collection.mutable.WrappedArray; | ||

| import scala.collection.mutable.Seq; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

WrappedArray is gone in 2.13; this should be an equivalent superclass

| val closest = data.map (p => (closestPoint(p, kPoints), (p, 1))) | ||

|

|

||

| val pointStats = closest.reduceByKey{case ((p1, c1), (p2, c2)) => (p1 + p2, c1 + c2)} | ||

| val pointStats = closest.reduceByKey(mergeResults) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not quite sure why, but a few calls to reduceByKey didn't like the existing syntax in 2.13. I had to break out a typed method. missing parameter type for expanded function

| * Abstract class for estimators that fit models to data. | ||

| */ | ||

| abstract class Estimator[M <: Model[M]] extends PipelineStage { | ||

| abstract class Estimator[M <: Model[M] : ClassTag] extends PipelineStage { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't quite get why 2.13 thinks this needs a ClassTag (and thus some subclasses), but I'm just going with it. Will see if MiMa is OK with it

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Unfortunately, MiMi seems to complain on this and a few others like this.

[error] * method this()Unit in class org.apache.spark.ml.Estimator does not have a correspondent in current version

4232

[error] filter with: ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.Estimator.this")

4233

[error] * method this()Unit in class org.apache.spark.ml.Predictor does not have a correspondent in current version

4234

[error] filter with: ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.Predictor.this")

4235

[error] * method this()Unit in class org.apache.spark.ml.classification.ProbabilisticClassifier does not have a correspondent in current version

4236

[error] filter with: ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.classification.ProbabilisticClassifier.this")

4237

[error] * method this()Unit in class org.apache.spark.ml.classification.Classifier does not have a correspondent in current version

4238

[error] filter with: ProblemFilters.exclude[DirectMissingMethodProblem]("org.apache.spark.ml.classification.Classifier.this")

|

Test build #125859 has finished for PR 29111 at commit

|

|

Yep, as I feared: Adding a ClassTag means it implicitly has a new parameter in the bytecode and that breaks MiMa. Hm. I'll have to think about this more |

|

Test build #125864 has finished for PR 29111 at commit

|

| } | ||

|

|

||

| private def mergeResults(a: (Vector[Double], Int), | ||

| b: (Vector[Double], Int)): (Vector[Double], Int) = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit. Indentation?

| */ | ||

| @Since("2.0.0") | ||

| def fit(dataset: Dataset[_], paramMaps: Array[ParamMap]): Seq[M] = { | ||

| def fit(dataset: Dataset[_], paramMaps: Seq[ParamMap]): Seq[M] = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cc @mengxr and @gatorsmile

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, this fixes the weird compile error (Arrays + generic types are stricter in Scala 2.13) though I don't directly see what it has to do with type M. Still, this is an API change I think MiMa will fail and I think I need another workaround for that. This is an obscure method that isn't even called by tests, AFAICT, so not sure it even has coverage.

|

I think I understand the last test failures, will fix too. |

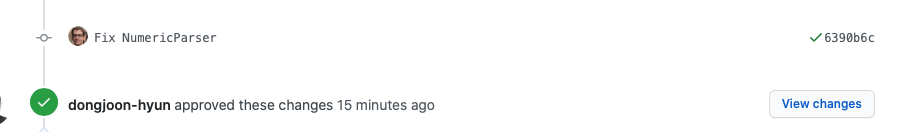

dongjoon-hyun

left a comment

dongjoon-hyun

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1, LGTM. I also verified this manually with Scala 2.13. This works as expected.

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for Spark Project Parent POM 3.1.0-SNAPSHOT:

[INFO]

[INFO] Spark Project Parent POM ........................... SUCCESS [ 2.139 s]

[INFO] Spark Project Tags ................................. SUCCESS [ 4.475 s]

[INFO] Spark Project Sketch ............................... SUCCESS [ 3.514 s]

[INFO] Spark Project Local DB ............................. SUCCESS [ 0.901 s]

[INFO] Spark Project Networking ........................... SUCCESS [ 2.199 s]

[INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 0.820 s]

[INFO] Spark Project Unsafe ............................... SUCCESS [ 4.629 s]

[INFO] Spark Project Launcher ............................. SUCCESS [ 1.263 s]

[INFO] Spark Project Core ................................. SUCCESS [01:16 min]

[INFO] Spark Project ML Local Library ..................... SUCCESS [ 28.387 s]

[INFO] Spark Project GraphX ............................... SUCCESS [ 19.233 s]

[INFO] Spark Project Streaming ............................ SUCCESS [ 32.652 s]

[INFO] Spark Project Catalyst ............................. SUCCESS [01:24 min]

[INFO] Spark Project SQL .................................. SUCCESS [02:08 min]

[INFO] Spark Project ML Library ........................... SUCCESS [01:41 min]

[INFO] Spark Project Tools ................................ SUCCESS [ 6.843 s]

[INFO] Spark Project Hive ................................. SUCCESS [01:03 min]

[INFO] Spark Project REPL ................................. FAILURE [ 4.346 s]

[INFO] Spark Project Assembly ............................. SKIPPED

[INFO] Kafka 0.10+ Token Provider for Streaming ........... SKIPPED

[INFO] Spark Integration for Kafka 0.10 ................... SKIPPED

[INFO] Kafka 0.10+ Source for Structured Streaming ........ SKIPPED

[INFO] Spark Project Examples ............................. SKIPPED

[INFO] Spark Integration for Kafka 0.10 Assembly .......... SKIPPED

[INFO] Spark Avro ......................................... SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 09:26 min

[INFO] Finished at: 2020-07-15T13:22:34-07:00

[INFO] ------------------------------------------------------------------------

As we discussed already, we can revisit the APIs later as a separate step.

Thank you, @srowen . Merged to master. This also passed GitHub Action.

|

I can confirm that modules after REPL also compile (I commented out REPL locally). That's a good step, and I can look at getting the secondary modules compiling next. For anyone watching, I am entirely open to questions about the approach here. The changes are actually quite superficial but broad. I do want to keep an eye on issues like perf regressions - I do not expect them in 2.12 but may be an issue in 2.13 builds. |

|

Oh erm, we didn't get a passing test after my last commit - I did double-check it passes 2.12 tests after the last change, but, should we be watching out for that or is this all down to github actions now? |

|

For a record, this was merged because all tests passed in GitHub Action and I verified the Scala 2.13 compilation (#29111 (review)). |

|

For now, GitHub Action finished in two hours if there is no congestion. |

|

Test FAILed. |

…entImpl.listTablesByType` ### What changes were proposed in this pull request? Explicitly convert `tableNames` to `Seq` in `HiveClientImpl.listTablesByType` as it was done by c28a6fa#diff-6fd847124f8eae45ba2de1cf7d6296feR769 ### Why are the changes needed? See this PR #29111, to compile by Scala 2.13. The changes were discarded by #29363 accidentally. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Compiling by Scala 2.13 Closes #29379 from MaxGekk/fix-listTablesByType-for-views-followup. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…entImpl.listTablesByType` ### What changes were proposed in this pull request? Explicitly convert `tableNames` to `Seq` in `HiveClientImpl.listTablesByType` as it was done by apache/spark@c28a6fa#diff-6fd847124f8eae45ba2de1cf7d6296feR769 ### Why are the changes needed? See this PR apache/spark#29111, to compile by Scala 2.13. The changes were discarded by apache/spark#29363 accidentally. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Compiling by Scala 2.13 Closes #29379 from MaxGekk/fix-listTablesByType-for-views-followup. Authored-by: Max Gekk <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

What changes were proposed in this pull request?

Same as #29078 and #28971 . This makes the rest of the default modules (i.e. those you get without specifying

-Pyarnetc) compile under Scala 2.13. It does not close the JIRA, as a result. this also of course does not demonstrate that tests pass yet in 2.13.Note, this does not fix the

replmodule; that's separate.Why are the changes needed?

Eventually, we need to support a Scala 2.13 build, perhaps in Spark 3.1.

Does this PR introduce any user-facing change?

No

How was this patch tested?

Existing tests. (2.13 was not tested; this is about getting it to compile without breaking 2.12)