-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-26909][FOLLOWUP][SQL] use unsafeRow.hashCode() as hash value in HashAggregate #23821

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Generated codes: |

|

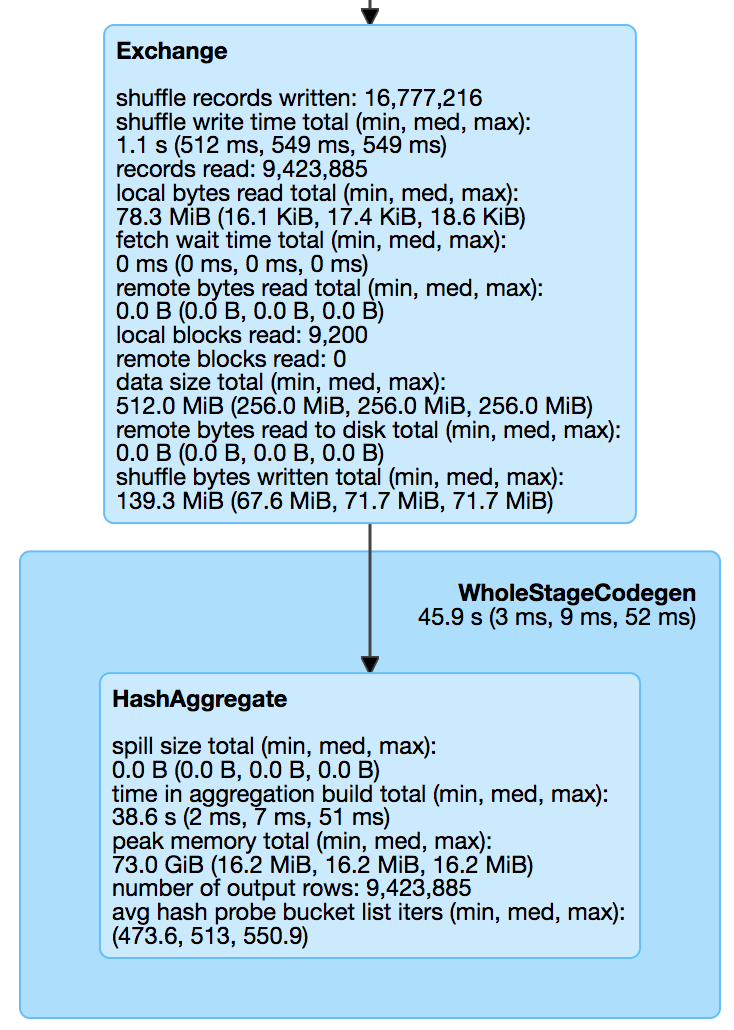

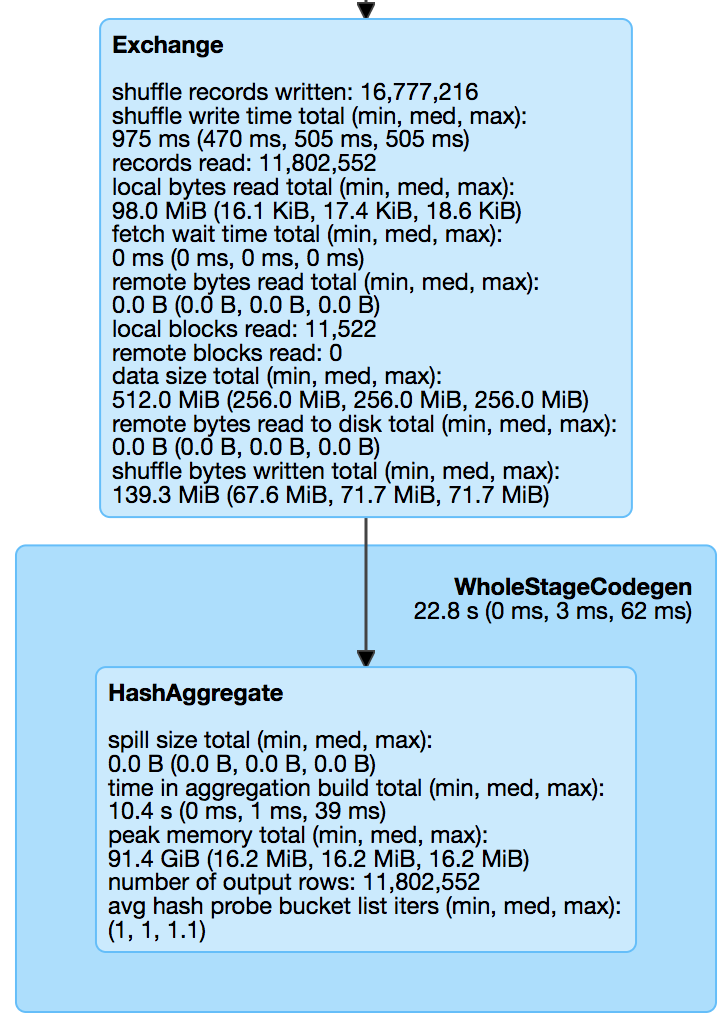

Tests: Before: |

| |// generate grouping key | ||

| |${unsafeRowKeyCode.code} | ||

| |${hashEval.code} | ||

| |int $unsafeRowHash = ${unsafeRowKeyCode.value}.hashCode(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: unsafeKeyHash?

maropu

left a comment

maropu

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Aha, this is a smarter way...

|

Test build #102455 has finished for PR 23821 at commit

|

|

retest this please |

|

Test build #102464 has finished for PR 23821 at commit

|

|

retest this please |

|

@cloud-fan , kindly help review. |

|

did you verify it that it can avoid the hash conflict with the problematic query? |

I used another query to verify this issue. See: #23821 (comment) |

|

thanks, merging to master! |

What changes were proposed in this pull request?

This is a followup PR for #21149.

New way uses unsafeRow.hashCode() as hash value in HashAggregate.

The unsafe row has [null bit set] etc., so the hash should be different from shuffle hash, and then we don't need a special seed.

How was this patch tested?

UTs.