-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-26650][CORE]Yarn Client throws 'ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration' #23605

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Unlike Hadoop and Hive, Spark will not be built with HBase libs. It will only work when end users link the HBase libraries or contain HBase libraries in their app jar.

So the message doesn't look correct, though I think Fail to invoke HBaseConfiguration is more misleading.

Actually the root issue is that HBaseDelegationToken is activated even end users don't use HBase in their apps, which #23499 is trying to avoid with service loader.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks I will check the PR

|

Test build #101491 has finished for PR 23605 at commit

|

|

Also, please describe how you met this issue, reproducible steps, analysis of the problem and how the PR fixes the PR in JIRA and/or PR. |

|

@HyukjinKwon The issue is pretty easy to reproduce in the latest code, if we dont give any configs related to hbase, which was not happening previously. Thanks |

|

It's easy to reproduce but I would show what you tried then making people try it by themselves to deduplicate efforts and time. Please fill the details up. |

…adoop.hbase.HBaseConfiguration'

|

Thanks @HyukjinKwon . updated |

core/src/main/scala/org/apache/spark/deploy/security/HBaseDelegationTokenProvider.scala

Show resolved

Hide resolved

|

Test build #101508 has finished for PR 23605 at commit

|

What changes were proposed in this pull request?

When we launch an application in yarn client without any hbase related libraries, spark is throwing a misleading exception.

Added a separate warning message for "NoClassFoundException".

How was this patch tested?

Test steps:

bin/spark-shell --master yarn

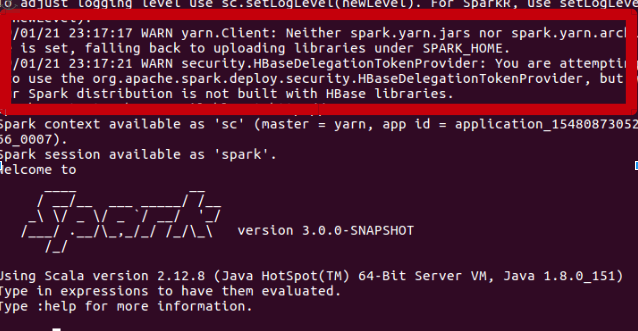

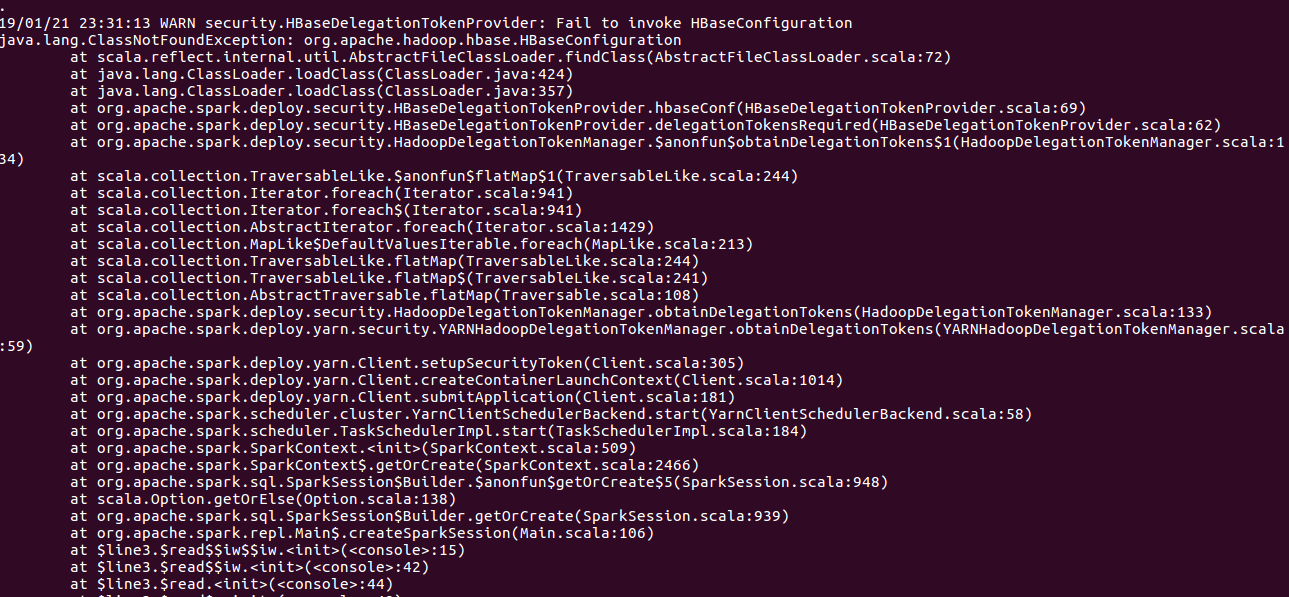

Before fix:

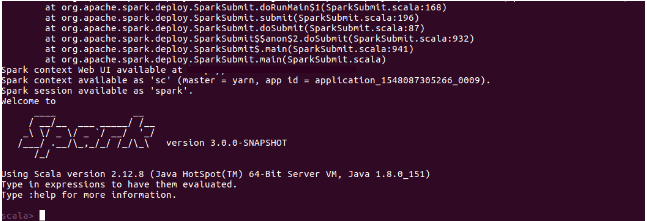

After fix: