-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-25115] [Core] Eliminate extra memory copy done when a ByteBuf is used that is backed by > 1 ByteBuffer. #22105

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -140,8 +140,24 @@ private int copyByteBuf(ByteBuf buf, WritableByteChannel target) throws IOExcept | |

| // SPARK-24578: cap the sub-region's size of returned nio buffer to improve the performance | ||

| // for the case that the passed-in buffer has too many components. | ||

| int length = Math.min(buf.readableBytes(), NIO_BUFFER_LIMIT); | ||

| ByteBuffer buffer = buf.nioBuffer(buf.readerIndex(), length); | ||

| int written = target.write(buffer); | ||

| // If the ByteBuf holds more then one ByteBuffer we should better call nioBuffers(...) | ||

| // to eliminate extra memory copies. | ||

| int written = 0; | ||

| if (buf.nioBufferCount() == 1) { | ||

| ByteBuffer buffer = buf.nioBuffer(buf.readerIndex(), length); | ||

| written = target.write(buffer); | ||

| } else { | ||

| ByteBuffer[] buffers = buf.nioBuffers(buf.readerIndex(), length); | ||

| for (ByteBuffer buffer: buffers) { | ||

| int remaining = buffer.remaining(); | ||

| int w = target.write(buffer); | ||

| written += w; | ||

|

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Can we guarantee

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. We are using |

||

| if (w < remaining) { | ||

| // Could not write all, we need to break now. | ||

| break; | ||

| } | ||

| } | ||

| } | ||

| buf.skipBytes(written); | ||

| return written; | ||

| } | ||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this limit needed now at all? I think your change was suggested as a future enhancement when reviewing SPARK-24578 but I never noticed a PR to implement it until yours...

@attilapiros

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@vanzin This is to avoid memory copy when writing a large ByteBuffer. You merged this actually: #12083

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My understanding is that the code here is avoiding the copy by using

nioBuffers()already, so that limit shouldn't be needed, right? Or maybe I'm missing something.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@vanzin The ByteBuffer here may be just a large ByteBuffer. See my comment here: #12083 (comment)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I re-read that discussion and you're right. I know this has been checked in, but the comment is now stale; the limit is there because of the behavior of the JRE code, not because of the composite buffer. It would be good to update it.

Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Out of my curiosity, how did we come out with number of NIO_BUFFER_LIMIT 256KB?

In Hadoop, they are using 8KB

For most OSes, in the

write(ByteBuffer[])API insun.nio.ch.IOUtil, it goes one buffer at a time, and gets a temporary direct buffer from theBufferCache, up to a limit ofIOUtil#IOV_MAXwhich is 1KB.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That was only briefly discussed in the PR that Ryan linked above... the original code actually used 512k.

I think Hadoop's limit is a little low, but maybe 256k is a bit high. IIRC socket buffers are 32k by default on Linux, so it seems unlikely you'd be able to write 256k in one call (ignoring what IOUtil does internally). But maybe in practice it works ok.

If anyone has the time to test this out that would be great.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe @normanmaurer has some insight?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

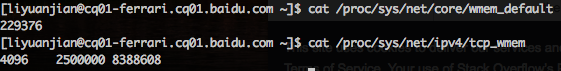

After reading the context in #12083 and this discussion, I want to provide a possibility about 256k in one call can work in practice. As in our scenario, user will change

/proc/sys/net/core/wmem_defaultbased on their online behavior, generally we'll set this value larger thanwmem_default.So maybe 256k of NIO_BUFFER_LIMIT is ok here? We just need add more annotation to remind what params related with this value.