-

Notifications

You must be signed in to change notification settings - Fork 28.9k

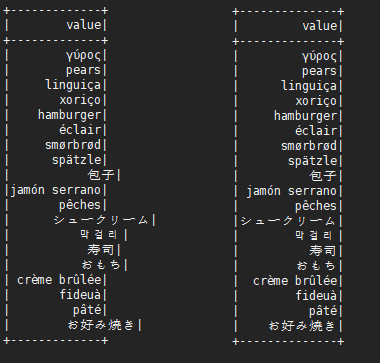

[SPARK-25108][SQL] Fix the show method to display the wide character alignment problem #22048

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

before: +---+---------------------------+-------------+ |id |中国 |s2 | +---+---------------------------+-------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world]| |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312] | |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+---------------------------+-------------+ after: +---+--------------------------+----------------+ | id| 中国| s2| +---+--------------------------+----------------+ | 1| ab| [a]| | 2| null| [中国, abc]| | 3| ab1| [hello world]| | 4|か行 きゃ(kya) きゅ(kyu...| [“中国]| | 5| 中国(你好)a|[“中(国), 312]| | 6| 中国山(东)服务区| [“中(国)]| | 7| 中国山东服务区| [中(国)]| | 8| | [中国]| +---+--------------------------+----------------+

|

Since this change does not look minor, could you please create a JIRA entry? |

|

I created a PR to solve the same problem. |

|

After testing, it is found that regular expressions are changed to the following. @kiszk |

|

@xuejianbest Could you please create a JIRA entry and add test cases to this PR? |

|

Below is the display effect I tested on Xshell5. |

|

Thank you for creating a JIRA entry and for putting the result. The test case is not available yet. |

| // We set a minimum column width at '3' | ||

| val minimumColWidth = 3 | ||

|

|

||

| val regex = """[^\x00-\u2e39]""".r |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This could use a comment and slightly better name for the variable? I also wonder if a regex is a little bit slow for scanning every character.

However it's not clear this definition is accurate enough. According to things like https://stackoverflow.com/questions/13505075/analyzing-full-width-or-half-width-character-in-java we're really looking for the concept of "fullwidth" East Asian characters. The answer there provides a somewhat more precise definition, though others say you need something like icu4j for a proper definition.

Maybe at least adopt the alternative proposed in the SO answer? It's not necessary to be perfect here, as it's a cosmetic issue.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree with @srowen's comment. To make it clear, could you please add tests for more characters?

|

Ping @xuejianbest |

Modify regular expressions to make them more precise. Modify variable names and add comment.

|

I looked at all the 0x0000-0xFFFF characters (unicode) and showed them under Xshell, then found all the full width characters. Get the latest regular expression. A new version is submitted, and the variable name is changed and an annotation is added. See below. |

|

@xuejianbest thank you for your update. Would it be possible to commit test cases, too? |

| // We set a minimum column width at '3' | ||

| val minimumColWidth = 3 | ||

|

|

||

| //Regular expression matching full width characters |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Very small nit that might fail scalastyle -- space after comment slashes

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, thanks.

| val minimumColWidth = 3 | ||

|

|

||

| //Regular expression matching full width characters | ||

| val fullWidthRegex = """[\u1100-\u115F\u2E80-\uA4CF\uAC00-\uD7A3\uF900-\uFAFF\uFE10-\uFE19\uFE30-\uFE6F\uFF00-\uFF60\uFFE0-\uFFE6]""".r |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wonder if detecting this using a regex for each character is slow? probably not meaningfully enough to care about, but I wonder.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I generated 1000 strings, each consisting of 1000 characters with a random unicode of 0x0000-0xFFFF. (a total of 1 million characters.)

Then use this regular expression to find the full width character of these strings.

I tested 100 rounds and then averaged.

It takes 49 milliseconds to complete matching all 1000 strings.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, not worth optimizing now.

| for (row <- rows) { | ||

| for ((cell, i) <- row.zipWithIndex) { | ||

| colWidths(i) = math.max(colWidths(i), cell.length) | ||

| colWidths(i) = math.max(colWidths(i), cell.length + fullWidthRegex.findAllIn(cell).size) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What about a utility method for the x.length + fullWidthRegex.findAllIn(x).size expression that recurs here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I committed a new version. See if this is appropriate please ?

@srowen

Putting `fullWidthRegex` outside `stringHalfWidth` is to reduce the cost of compiling regular expressions. Since compilation is expensive, frequently used Regexes should be constructed once.

| // The number of half width of a string | ||

| def stringHalfWidth = (str: String) => { | ||

| str.length + fullWidthRegex.findAllIn(str).size | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

better to move this method into util.Utils or something as a helper function?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

better to add tests for the helper function, too.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Moved it into util.Utils.

| val minimumColWidth = 3 | ||

|

|

||

| // Regular expression matching full width characters | ||

| val fullWidthRegex = """[\u1100-\u115F\u2E80-\uA4CF\uAC00-\uD7A3\uF900-\uFAFF\uFE10-\uFE19\uFE30-\uFE6F\uFF00-\uFF60\uFFE0-\uFFE6]""".r |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This line goes over the limit, 100.

Move the stringHalfWidth method into util.Utils and add tests for it.

| * A full width character occupies two half widths | ||

| */ | ||

| def stringHalfWidth(str: String): Int = { | ||

| if(str == null) 0 else str.length + fullWidthRegex.findAllIn(str).size |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: space before if

| /** | ||

| * Regular expression matching full width characters | ||

| */ | ||

| private lazy val fullWidthRegex = ("""[""" + |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Doesn't need to be lazy

|

Also, can you add tests in |

|

Test build #4305 has finished for PR 22048 at commit

|

|

Why did it fail? |

|

Please help me @srowen |

|

Have a look at the test results, which show the style problem it flags: So it doesn't like non-ASCII chars after all! I wonder how they exist in the other parts of the source. I checked and other parts just disable the check for sections of code where it's appropriate to use unicode chars. Just wrap these parts of the code in: Sorry, didn't know this was needed. |

|

style errors, we cannot include non-ascii characters in files; |

|

+1 for adding |

To solve style errors because of include non-ascii characters in files. Disable the check for sections of code where it's appropriate to use unicode chars.

|

I see. A new commit has been done. |

|

retest this please |

|

Hm! so the warning triggers on unicode escape sequences? That seems like a bug in the style checker. Oh well, yes keep them. I'll retest ... |

|

Test build #4329 has finished for PR 22048 at commit

|

|

LGTM, then let me ask @gatorsmile |

| """\uFE10-\uFE19""" + | ||

| """\uFE30-\uFE6F""" + | ||

| """\uFF00-\uFF60""" + | ||

| """\uFFE0-\uFFE6""" + |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

A general question.

- How to get this Regex list? Any reference? It sounds like this should be a general problem

- What is the performance impact?

Can you answer them and post them in the PR description?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

- How to get this Regex list? Any reference? It sounds like this should be a general problem

I looked at all the 0x0000-0xFFFF characters (unicode) and showed them under Xshell, then found all the full width characters. Get the regular expression.

- What is the performance impact?

I generated 1000 strings, each consisting of 1000 characters with a random unicode of 0x0000-0xFFFF. (a total of 1 million characters.)

Then use this regular expression to find the full width character of these strings.

I tested 100 rounds and then averaged.

It takes 49 milliseconds to complete matching all 1000 strings.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I looked at all the 0x0000-0xFFFF characters (unicode) and showed them under Xshell, then found all the full width characters. Get the regular expression.

Can you describe them there and put a references to a public unicode document?

See the comment in UTF8String;

https://github.com/apache/spark/blob/master/common/unsafe/src/main/java/org/apache/spark/unsafe/types/UTF8String.java#L65

It takes 49 milliseconds to complete matching all 1000 strings.

How about some additional overheads when calling showString as compared to showString w/o this patch?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can you describe them there and put a references to a public unicode document?

This is a regular expression match using unicode, regardless of the specific encoding.

For example, the following string is encoded using gbk instead of utf8, and the match still works:

val bytes = Array[Byte](0xd6.toByte, 0xd0.toByte, 0xB9.toByte, 0xFA.toByte)

val s1 = new String(bytes, "gbk")

println(s1) //中国

val fullWidthRegex = ("""[""" +

// scalastyle:off nonascii

"""\u1100-\u115F""" +

"""\u2E80-\uA4CF""" +

"""\uAC00-\uD7A3""" +

"""\uF900-\uFAFF""" +

"""\uFE10-\uFE19""" +

"""\uFE30-\uFE6F""" +

"""\uFF00-\uFF60""" +

"""\uFFE0-\uFFE6""" +

// scalastyle:on nonascii

"""]""").r

println(fullWidthRegex.findAllIn(s1).size) //2

This regular expression is obtained experimentally under a specific font.

I don't understand what you are going to do.

How about some additional overheads when calling showString as compared to showString w/o this patch?

I tested a Dataset consisting of 100 rows, each row has two columns, one column is the index (0-99), and the other column is a random string of length 100 characters, and then the showString display is called separately.

The original showString method (w/o this patch) took about 42ms, and the improved time took about 46ms, and the performance was about 10% worse.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this is fine. Just copy a summary of your comments here into the comments in the code. Yes this has nothing to do with UTF8 encoding directly. You are matching UCS2 really, 16bit char values.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do I need to merge the above commited into one commit,

Or add another new commit?

Or change the last commit comments ?

@srowen

|

Just add a new commit with a little info about what this does and how you generated it. The commits are squashed anyway. |

…blem Some characters (called full-width characters) occupy two ordinary characters (called half-width characters) width when displayed in a terminal such as Xshell. Therefore, when the Dataset.show() method is called, if there are full-width characters and half-width characters at the same time, alignment problems will occur. This commit adds a method to calculate the number of half widths in a given string, so that it can get the correct fill when calling show method, which gives the display a more perfect alignment. Performance impact: Tested a Dataset consisting of 100 rows, each row has two columns, one column is the index (0-99), and the other column is a random string of length 100 characters, and then the showString display is called separately. The original show method (w/o this patch) took about 42ms, and the improved time took about 46ms, the performance was about 10% worse.

|

I see, Thinks. |

|

Merging to master and 2.4, though for some reason I can't back-port with the merge script right now. Will try later. |

|

@dongjoon-hyun could I ask a favor; can you try merging this? the merge script should say it's merged and offer to back-port to 2.4. However I keep getting errors about it not being mergeable, although all upstream branches and my clone all are up to date. |

|

@srowen . Sure. I'll try to backport this. |

|

Ur, it seems that I'm facing the same situation. In this case, should we just cherry-pick to |

…alignment problem This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems. It is effective for English, Chinese characters, Japanese, Korean and so on. ```scala before: +---+---------------------------+-------------+ |id |中国 |s2 | +---+---------------------------+-------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world]| |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312] | |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+---------------------------+-------------+ after: +---+-----------------------------------+----------------+ |id |中国 |s2 | +---+-----------------------------------+----------------+ |1 |ab |[a] | |2 |null |[中国, abc] | |3 |ab1 |[hello world] | |4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] | |5 |中国(你好)a |[“中(国), 312]| |6 |中国山(东)服务区 |[“中(国)] | |7 |中国山东服务区 |[中(国)] | |8 | |[中国] | +---+-----------------------------------+----------------+ ``` ## What changes were proposed in this pull request? When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem. Try to fix this problem. ## How was this patch tested? (Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)  Please review http://spark.apache.org/contributing.html before opening a pull request. Closes #22048 from xuejianbest/master. Authored-by: xuejianbest <[email protected]> Signed-off-by: Sean Owen <[email protected]>

|

OK, it isn't just me. We'll see if it's a broader problem. I manually cherry-picked to branch-2.4 |

This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems.

It is effective for English, Chinese characters, Japanese, Korean and so on.

What changes were proposed in this pull request?

When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem.

Try to fix this problem.

How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

Please review http://spark.apache.org/contributing.html before opening a pull request.