-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-23426][SQL] Use hive ORC impl and disable PPD for Spark 2.3.0

#20610

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

hive ORC implementation for Spark 2.3.0

| .stringConf | ||

| .checkValues(Set("hive", "native")) | ||

| .createWithDefault("native") | ||

| .createWithDefault("hive") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We also need to disable the ORC pushdown, because the ORC reader of Hive 1.2.1 has a few bugs.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

BTW, we don't have a test case for that, do we? Actually, I want to have a test case for that.

docs/sql-programming-guide.md

Outdated

| <tr> | ||

| <td><code>spark.sql.orc.impl</code></td> | ||

| <td><code>native</code></td> | ||

| <td><code>hive</code></td> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We do not need this in the migration guide. Please create a new section for ORC

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yep.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is there a reason the impl was changed back to the old implementation? this breaks spark.read.orc

hive ORC implementation for Spark 2.3.0hive ORC impl and disable PPD for Spark 2.3.0

| <td><code>true</code></td> | ||

| <td>Enables vectorized orc decoding in <code>native</code> implementation. If <code>false</code>, a new non-vectorized ORC reader is used in <code>native</code> implementation. For <code>hive</code> implementation, this is ignored.</td> | ||

| </tr> | ||

| </table> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@gatorsmile . Now, this becomes a section.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks!

|

Test build #87450 has finished for PR 20610 at commit

|

|

Ur, can I make another PR to fix the test failures? |

|

Test build #87451 has finished for PR 20610 at commit

|

|

I think it makes sense to fix the test cases in the same PR, as long as they are not bug fixes. |

|

No problem. |

| override def afterAll(): Unit = { | ||

| spark.sessionState.conf.unsetConf(SQLConf.ORC_IMPLEMENTATION) | ||

| super.afterAll() | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The test coverage is the same.

|

|

||

| override def afterAll(): Unit = { | ||

| spark.sessionState.conf.unsetConf(SQLConf.ORC_IMPLEMENTATION) | ||

| super.afterAll() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

try {

spark.sessionState.conf.unsetConf(SQLConf.ORC_IMPLEMENTATION)

} finally {

super.afterAll()

}

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks. Yep. It's done.

docs/sql-programming-guide.md

Outdated

|

|

||

| ## ORC Files | ||

|

|

||

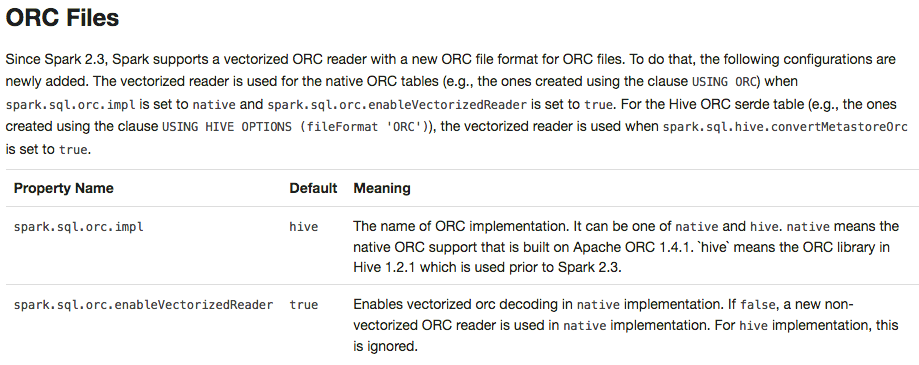

| Since Spark 2.3, Spark supports a vectorized ORC reader with a new ORC file format for ORC files. To do that, the following configurations are newly added. The vectorized reader is used for the native ORC tables (e.g., the ones created using the clause `USING ORC`) when `spark.sql.orc.impl` is set to `native` and `spark.sql.orc.enableVectorizedReader` is set to `true`. For the Hive ORC serde table (e.g., the ones created using the clause `USING HIVE OPTIONS (fileFormat 'ORC')`), the vectorized reader is used when `spark.sql.hive.convertMetastoreOrc` is set to `true`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

table -> tables

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

is set to true -> is also set to true?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ur, there is multiple is set to true. Which part do you mean?

| <td>Enables vectorized orc decoding in <code>native</code> implementation. If <code>false</code>, a new non-vectorized ORC reader is used in <code>native</code> implementation. For <code>hive</code> implementation, this is ignored.</td> | ||

| </tr> | ||

| </table> | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The description of spark.sql.orc.filterPushdown is disappeared?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes. It's disabled back. @viirya

|

Test build #87453 has finished for PR 20610 at commit

|

docs/sql-programming-guide.md

Outdated

| native ORC tables (e.g., the ones created using the clause `USING ORC`) when `spark.sql.orc.impl` | ||

| is set to `native` and `spark.sql.orc.enableVectorizedReader` is set to `true`. For the Hive ORC | ||

| serde tables (e.g., the ones created using the clause `USING HIVE OPTIONS (fileFormat 'ORC')`), | ||

| the vectorized reader is used when `spark.sql.hive.convertMetastoreOrc` is set to `true`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@viirya . I split into multiple lines. Could you point out once more?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

when spark.sql.hive.convertMetastoreOrc is (also) set to true?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you. I see.

|

Test build #87460 has finished for PR 20610 at commit

|

|

Test build #87468 has finished for PR 20610 at commit

|

|

Retest this please. |

docs/sql-programming-guide.md

Outdated

| <tr> | ||

| <td><code>spark.sql.orc.impl</code></td> | ||

| <td><code>hive</code></td> | ||

| <td>The name of ORC implementation. It can be one of <code>native</code> and <code>hive</code>. <code>native</code> means the native ORC support that is built on Apache ORC 1.4.1. `hive` means the ORC library in Hive 1.2.1 which is used prior to Spark 2.3.</td> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Remove which is used prior to Spark 2.3?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks!

|

Test build #87471 has finished for PR 20610 at commit

|

| class FileStreamSinkSuite extends StreamTest { | ||

| import testImplicits._ | ||

|

|

||

| override def beforeAll(): Unit = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: a simpler way to fix this

override val conf = super.conf.copy(SQLConf.ORC_IMPLEMENTATION -> "native")

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi, @cloud-fan .

I tested it, but that doesn't work in this FileStreamSinkSuite.

|

Test build #87475 has finished for PR 20610 at commit

|

|

LGTM Thanks! Merged to master/2.3 |

## What changes were proposed in this pull request? To prevent any regressions, this PR changes ORC implementation to `hive` by default like Spark 2.2.X. Users can enable `native` ORC. Also, ORC PPD is also restored to `false` like Spark 2.2.X.  ## How was this patch tested? Pass all test cases. Author: Dongjoon Hyun <[email protected]> Closes #20610 from dongjoon-hyun/SPARK-ORC-DISABLE. (cherry picked from commit 2f0498d) Signed-off-by: gatorsmile <[email protected]>

|

Thank you, @gatorsmile , @cloud-fan , and @viirya . |

What changes were proposed in this pull request?

To prevent any regressions, this PR changes ORC implementation to

hiveby default like Spark 2.2.X.Users can enable

nativeORC. Also, ORC PPD is also restored tofalselike Spark 2.2.X.How was this patch tested?

Pass all test cases.