-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-22793][SQL][BACKPORT-2.0]Memory leak in Spark Thrift Server #19989

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Can one of the admins verify this patch? |

|

I am not sure about this change actually. In this way all the users would use the same |

|

cc @liufengdb |

|

I think this method can take care of resource clean up automatically: https://github.com/apache/spark/blob/master/sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/session/SessionManager.java#L151 Can you really make a heap dump and find out why the sessions are not cleaned up? |

|

as i debug, every time when i connect to thrift server through beeline, the BTW, Session Timeout Checker does not work in SessionManager. and i create another PR to follow it #20025 . Thanks @liufengdb |

|

Could anybody please to check this PR or find out how to correct it? It seems a critical bug. Thanks very much! @cloud-fan @rxin @gatorsmile @vanzin @jerryshao @zsxwing |

|

This is not a backport as this patch is not merged to master yet. Let's move the discussion to the primary PR that against the master branch. |

|

OK , please move to #20029. Thanks all. |

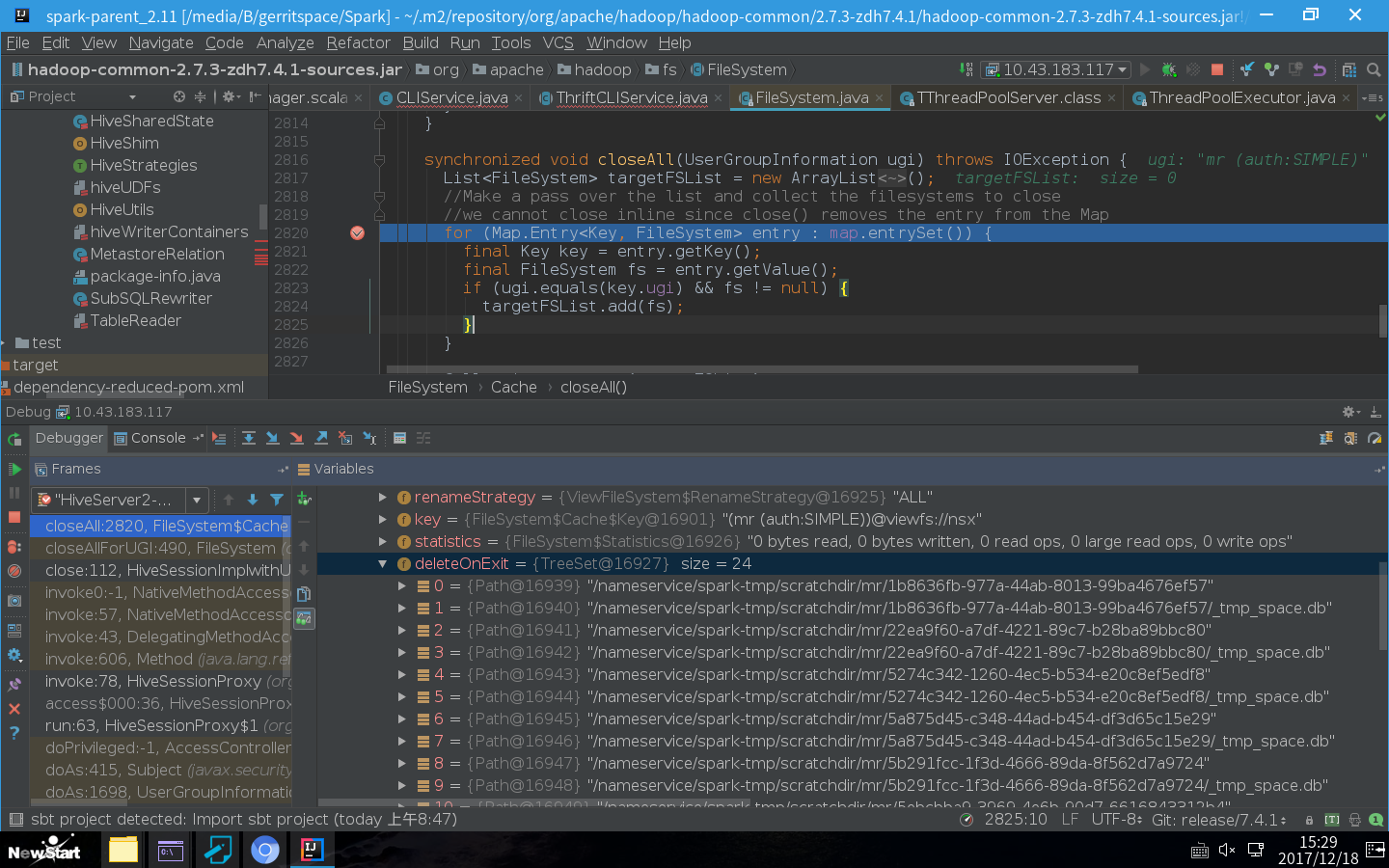

# What changes were proposed in this pull request? 1. Start HiveThriftServer2. 2. Connect to thriftserver through beeline. 3. Close the beeline. 4. repeat step2 and step 3 for many times. we found there are many directories never be dropped under the path `hive.exec.local.scratchdir` and `hive.exec.scratchdir`, as we know the scratchdir has been added to deleteOnExit when it be created. So it means that the cache size of FileSystem `deleteOnExit` will keep increasing until JVM terminated. In addition, we use `jmap -histo:live [PID]` to printout the size of objects in HiveThriftServer2 Process, we can find the object `org.apache.spark.sql.hive.client.HiveClientImpl` and `org.apache.hadoop.hive.ql.session.SessionState` keep increasing even though we closed all the beeline connections, which may caused the leak of Memory. # How was this patch tested? manual tests This PR follw-up the #19989 Author: zuotingbing <[email protected]> Closes #20029 from zuotingbing/SPARK-22793. (cherry picked from commit be9a804) Signed-off-by: gatorsmile <[email protected]>

# What changes were proposed in this pull request? 1. Start HiveThriftServer2. 2. Connect to thriftserver through beeline. 3. Close the beeline. 4. repeat step2 and step 3 for many times. we found there are many directories never be dropped under the path `hive.exec.local.scratchdir` and `hive.exec.scratchdir`, as we know the scratchdir has been added to deleteOnExit when it be created. So it means that the cache size of FileSystem `deleteOnExit` will keep increasing until JVM terminated. In addition, we use `jmap -histo:live [PID]` to printout the size of objects in HiveThriftServer2 Process, we can find the object `org.apache.spark.sql.hive.client.HiveClientImpl` and `org.apache.hadoop.hive.ql.session.SessionState` keep increasing even though we closed all the beeline connections, which may caused the leak of Memory. # How was this patch tested? manual tests This PR follw-up the apache#19989 Author: zuotingbing <[email protected]> Closes apache#20029 from zuotingbing/SPARK-22793.

|

@gatorsmile @liufengdb Could you please also check this PR ? it [BACKPORT-2.0] from master/2.3 about #20029 |

|

@zuotingbing No new 2.0 release is planned. Thus, we do not backport it to 2.0. |

|

ok, got it. Thanks! |

# What changes were proposed in this pull request? 1. Start HiveThriftServer2. 2. Connect to thriftserver through beeline. 3. Close the beeline. 4. repeat step2 and step 3 for many times. we found there are many directories never be dropped under the path `hive.exec.local.scratchdir` and `hive.exec.scratchdir`, as we know the scratchdir has been added to deleteOnExit when it be created. So it means that the cache size of FileSystem `deleteOnExit` will keep increasing until JVM terminated. In addition, we use `jmap -histo:live [PID]` to printout the size of objects in HiveThriftServer2 Process, we can find the object `org.apache.spark.sql.hive.client.HiveClientImpl` and `org.apache.hadoop.hive.ql.session.SessionState` keep increasing even though we closed all the beeline connections, which may caused the leak of Memory. # How was this patch tested? manual tests This PR follw-up the apache#19989 Author: zuotingbing <[email protected]> Closes apache#20029 from zuotingbing/SPARK-22793. (cherry picked from commit be9a804)

What changes were proposed in this pull request?

we found there are many directories never be dropped under the path

hive.exec.local.scratchdirandhive.exec.scratchdir, as we know the scratchdir has been added todeleteOnExitwhen it be created. So it means that the cache size of FileSystemdeleteOnExitwill keep increasing until JVM terminated.In addition, we use

jmap -histo:live [PID]to printout the size of objects in HiveThriftServer2 Process, we can find the object

org.apache.spark.sql.hive.client.HiveClientImplandorg.apache.hadoop.hive.ql.session.SessionStatekeep increasing even though we closed all the beeline connections, which caused the leak of Memory.How was this patch tested?

manual tests