-

Notifications

You must be signed in to change notification settings - Fork 3.4k

HBASE-25984: Avoid premature reuse of sync futures in FSHLog #3371

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| */ | ||

| private void markFutureDoneAndOffer(SyncFuture future, long txid, Throwable t) { | ||

| future.done(txid, t); | ||

| syncFutureCache.offer(future); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This patch in the current form doesn't get rid of future overwrites as it does not seem to cause any issues in AsyncWAL case (based on code reading), but if the reviewers think we should do that, I can refactor accordingly.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Where is the future overwrite here? The call to 'done'?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@saintstack I documented the race here..

Once done() is called, the future can be reused immediately from another handler (without this patch). That was causing deadlocks in FSHLog. Based on my analysis of AsyncFSWAL, I think the overwrites are possible but it should not affect the correctness as the safe point is attained in a different manner. So wanted to check with Duo who is the expert on that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry. Should have done more background reading before commenting.

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks nice. Cache makes sense.

Should there be bounds on the cache size? There doesn't seem to be any.

I do wonder about all threads contending on the cache object (where, IIRC, there was on coordination before).

| */ | ||

| private void markFutureDoneAndOffer(SyncFuture future, long txid, Throwable t) { | ||

| future.done(txid, t); | ||

| syncFutureCache.offer(future); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Where is the future overwrite here? The call to 'done'?

| public SyncFutureCache(final Configuration conf) { | ||

| final int handlerCount = conf.getInt(HConstants.REGION_SERVER_HANDLER_COUNT, | ||

| HConstants.DEFAULT_REGION_SERVER_HANDLER_COUNT); | ||

| syncFutureCache = CacheBuilder.newBuilder().initialCapacity(handlerCount) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I thought this guava cache 'slow'. That Ben Manes tried to get a patch to guava that improved it but couldn't get interest so his caffeine cache has means of implementing gauava cache api.... so you can drop in his thing instead.

Maybe it doesn't matter here because scale of objects is small? It is critical section though.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah sweet, I heard about this Guava vs Caffeine thing, let me replace that. (didn't notice any performance issues with the patch but if we get some extra performance why not)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure if there is a benefit over a ThreadLocal in your case. The expiration time here seems to be only to evict when the thread dies, which a TL does automatically. A weakKey cache might be the closest equivalent to that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

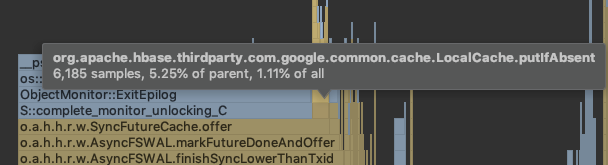

It also looks like your on 2.8.1, whereas a putIfAbsent optimization was added in 2.8.2 to avoid locking if the entry is present. That might help.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We don't need a putIfAbsent() either, switched to put() as we are ok with the overwrites here, it doesn't show up in the profiler now. Ya we can't use the

I'm not sure if there is a benefit over a ThreadLocal in your case.

Right, we can't use TL here because of the bug, but WeakKey cache seems like a good alternative, thanks for the pointer.

Good point, I can add a limit but practically it doesn't matter, I think. We only grow as many as handler threads (and some extra for temporary operations) which should be low thousands and we already have "expireAfterAccess" set to 2mins, so the cache should be GC-ed automatically. I'll still add a max limit, just in case.

Cache is keyed by the Thread object using the future. So the contention scope is the cache key but there is only a single thread using a sync future at any point in time, so in effect I think there is no contention. |

You might check if guava cache is backed by a ConcurrentHashMap (going by API, it looks like it). CHM #get and #put are lockless but the likes of #putIfAbsent are not; they lock the 'bucket' the key is in. We have a PerformanceEvaluation for WAL that might help here... Could try a run and see if you see the cache mentioned in a profile, etc. |

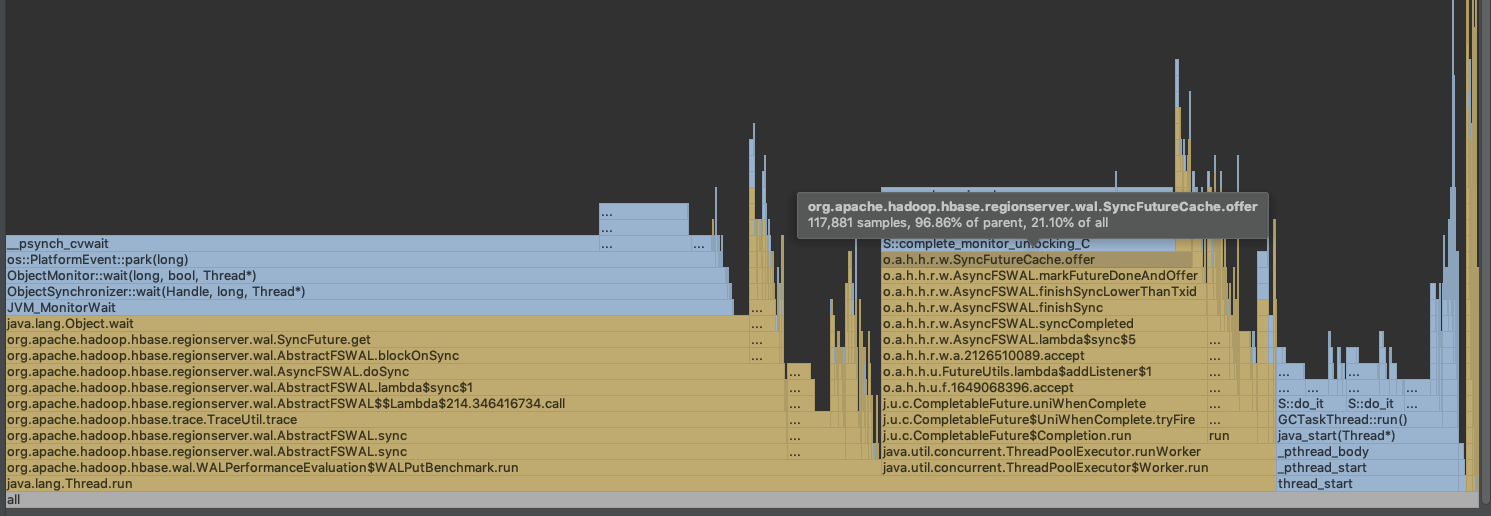

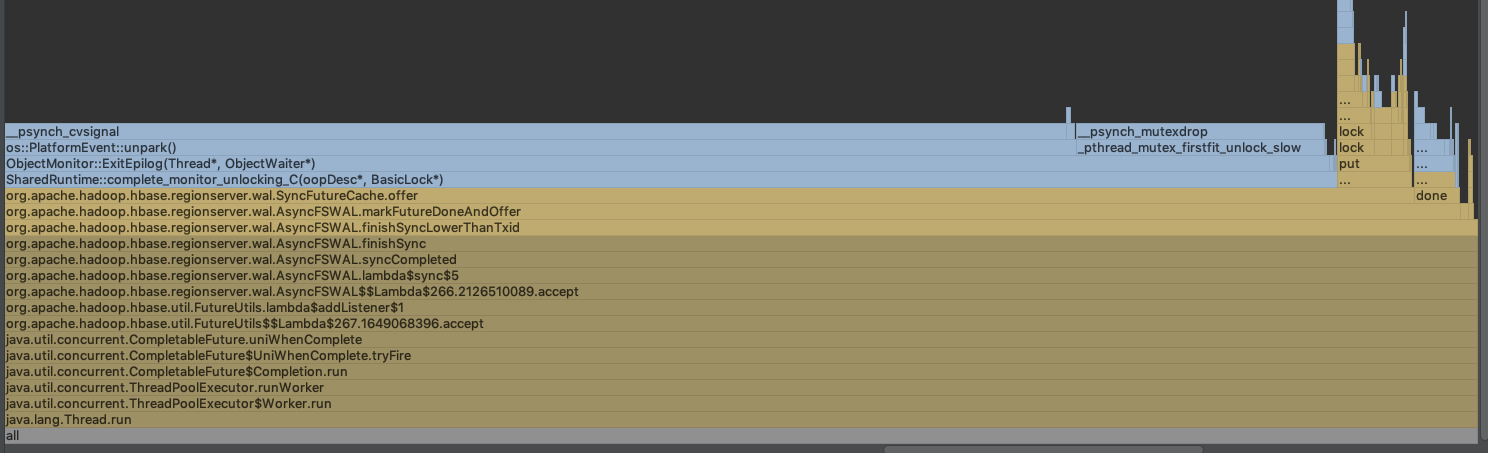

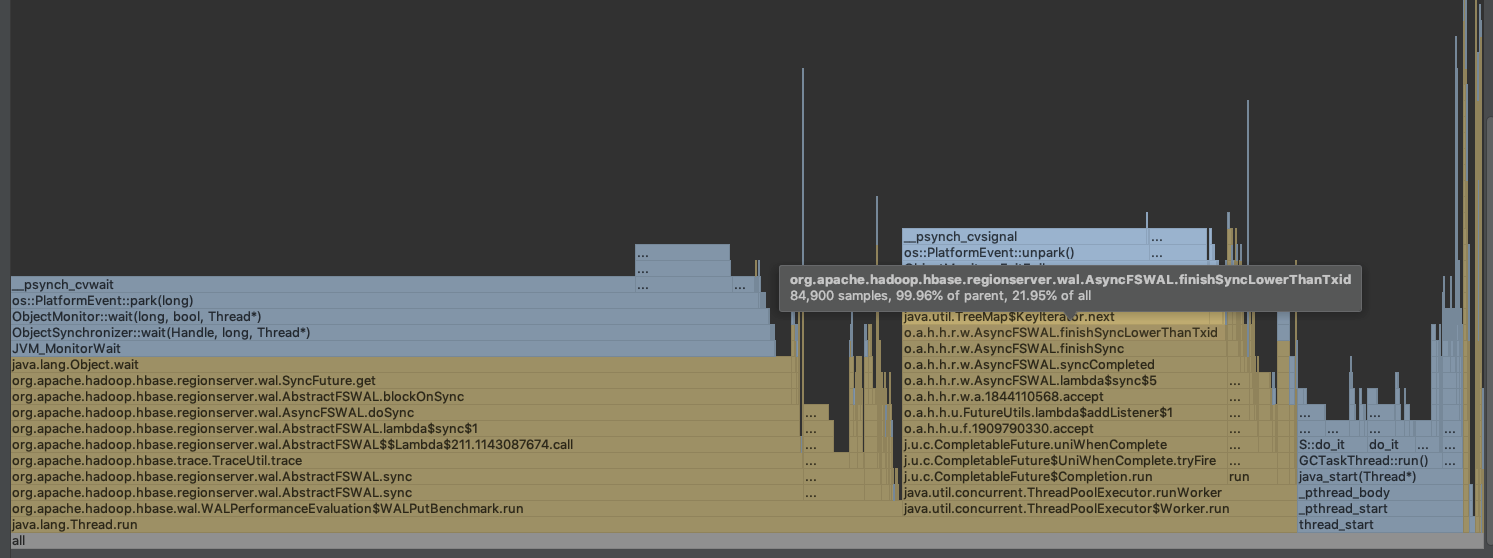

Nice catch, this coarse grained segment locking thing didn't cross my mind, thanks for the correction. I did run the WAL PE, here are the results, there is like ~1% drop for async WAL but performance was better in FSHLog case. Following are the results for the default AsyncFSWAL implementation. cc: @apurtell bin/hbase org.apache.hadoop.hbase.wal.WALPerformanceEvaluation -threads 256 -roll 10000 -verify With Patch: Without patch: It does show up on the profiler though.. doesn't seem like a great idea then? |

|

Let me try Caffeine and see what it looks like. |

|

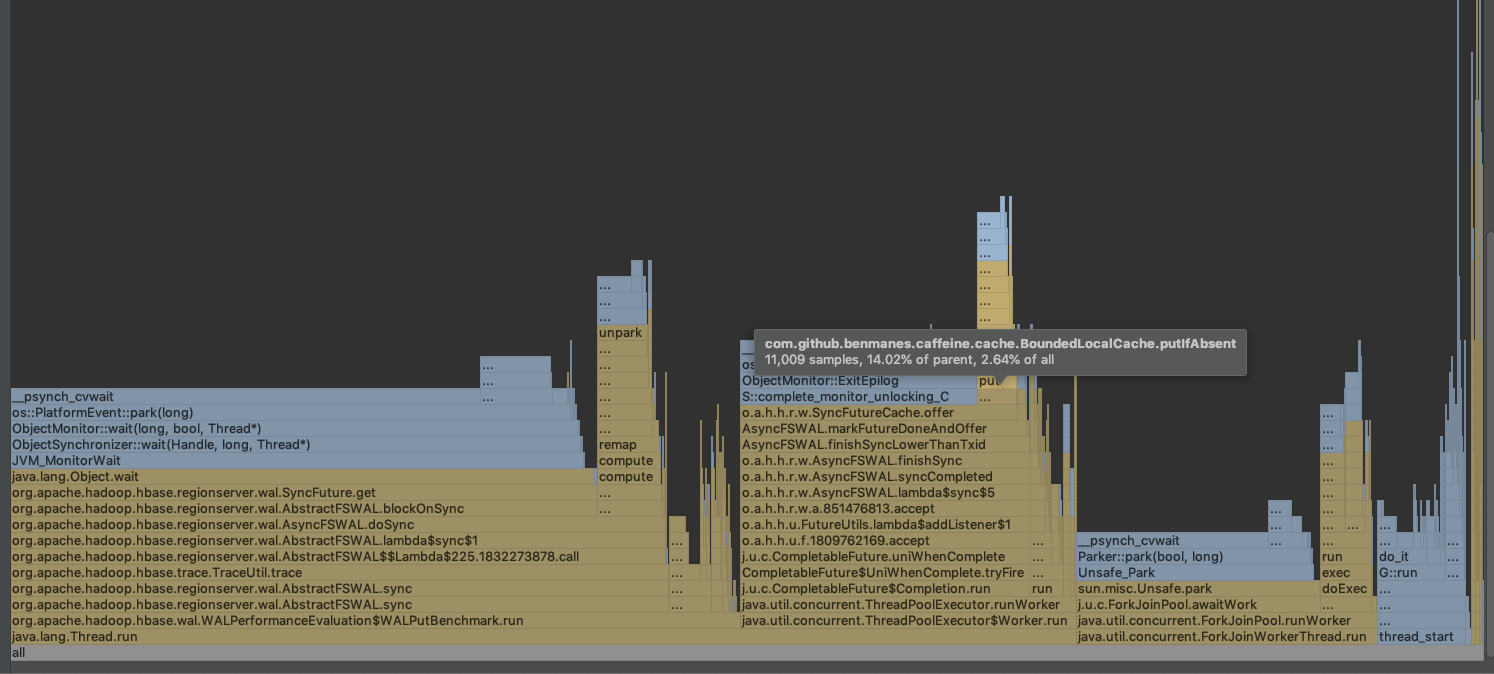

Caffeine seems to perform even worse overall (even though flame graph % is slightly lower), may be its some noise on my machine too. With patch using Caffeine |

|

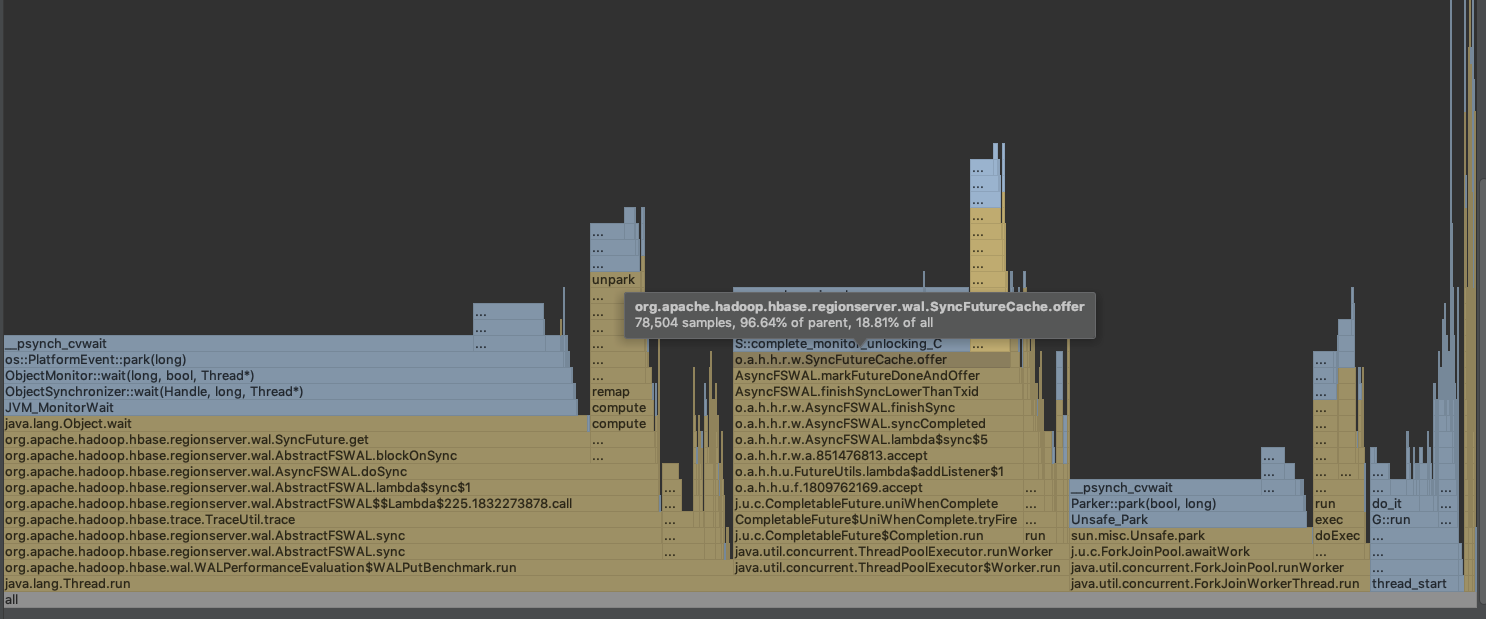

Flame graph without any changes.. overall it seems like we have 1.xx% overhead due to the cache and guava seems slightly better than Caffeine. cc: @saintstack / @apurtell Without Changes |

|

Thread local was used to avoid threads having to coordinate around a resource; this plus ringbuffer was meant to get us a write that was w/o locking (till we hit dfsclient at least). This impl is old though now given redo in asyncwal. Thanks for doing the nice graphs and perf runs above. Interesting on caffeine vs guava; probably this workload (but in compare of caffeine against our block cache CHM, CHM seemed better though caffeine looked to have much nicer 'locking' profile). It is hard to argue w/ a perf improvement (and improved safety). I'd be in favor of commit. Funny though how its 103 appends per sync w/ patch and w/o (buffer limit?). You might try using get/put instead of getIfPresent or whatever it is you are using just to see what is possible perf-wise. Have you looked at flamegraphs for asyncwal? Perhaps you'll see where the 1% is going? Yeah, would be good to get @Apache9 input. Nice find @bharathv (after reading the JIRA) |

Ya that makes sense. I also have a version that fixes that problem by retaining Thread locals (see this). It works by clarifying two states DONE and RELEASED, if this contention is a concern for any reason, just FYI.

I don't know what % of it is noise in the environment, it is on my local machine may have some interference (tried to minimize as much as I can).

It is going into putIfAbsent(), see below. |

|

I think the assumption here is that, the thread which holds the SyncFuture will block on it. If timed out, we will kill the regionserver so it is not a big deal to make a per thread cache. If this is not the case then, I agree to use a general cache, but it will require us to manually return the SyncFuture though. Will take a look at the performance result later. Thanks. |

Thanks for taking a look. Can you please point me to the code (I may have missed it). If we timed out (as seen by client), the timeout (in some form) is propagated back to the caller but in the background sync call could still be successful (if there are issues like slow syncs/HDFS etc) ? or did I misinterpret what you are saying? |

For example, on region flush And a DroppedSnapshotException will trigger a region server abort. Anyway, there is no strong guarantee on killing the regionserver when timeout, especially it is not easy to add this logic in SyncFuture, I'm OK with the approach here. Thanks. |

| syncFutureCache.asMap().compute(Thread.currentThread(), (thread, syncFuture) -> { | ||

| result[0] = syncFuture; | ||

| return null; | ||

| }); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

you could simplify this by using remove(key) instead of a computation, e.g.

SyncFuture future = syncFutureCache.asMap().remove(Thread.currentThread());

return (future == null) ? new SyncFuture() : future;There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Neat, done!

| public void cleanUp() { | ||

| if (syncFutureCache != null) { | ||

| syncFutureCache.invalidateAll(); | ||

| } | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This might be a confusing method name, as cleanUp for the cache lib means that pending maintenance work is performed immediately (e.g. discarding any expired entries). It doesn't clear the cache like this does, so a user might be surprised by whichever mental model they have. Instead, please call this clear, invalidateAll, etc to avoid conflicting names.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Makes sense, done.

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

|

💔 -1 overall

This message was automatically generated. |

|

🎊 +1 overall

This message was automatically generated. |

|

🎊 +1 overall

This message was automatically generated. |

|

🎊 +1 overall

This message was automatically generated. |

|

Can someone sign off on this change please? Need a +1 to merge. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Left minor questions, overall looks good to ship, +1

| SyncFuture future = syncFutureCache.asMap().remove(Thread.currentThread()); | ||

| return (future == null) ? new SyncFuture() : future; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This suggestion was great. I think we can make this change in branch-1 as well (sorry I lost the track, not sure if branch-1 PR is still pending for merge or already merged)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is no branch-1 patch (yet).

| @InterfaceAudience.Private | ||

| public final class SyncFutureCache { | ||

|

|

||

| private static final long SYNC_FUTURE_INVALIDATION_TIMEOUT_MINS = 2; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

2 min is good estimate? Trying to understand if we might run into overhead (cache entry getting expired followed by same entry getting created for same thread from pool)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If a handler remained idle for 2 mins, that indicates there isn't enough load on the server in which case there is no advantage in keeping this around. Usually only helpful if there is high load and potential for reuse so that we don't need to GC these small objects often.

…3371) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani [email protected] (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani [email protected] (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani [email protected] (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3392) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3393) Signed-off-by: Viraj Jasani <[email protected]> Signed-off-by: Wei-Chiu Chuang <[email protected]> (cherry picked from commit 5a19bcf)

…3394) Signed-off-by: Viraj Jasani <[email protected]> (cherry picked from commit 5a19bcf)

…3371) Signed-off-by: Viraj Jasani [email protected] (cherry picked from commit 5a19bcf)

…3398) Signed-off-by: Viraj Jasani [email protected] (cherry picked from commit 5a19bcf)

No description provided.