-

Notifications

You must be signed in to change notification settings - Fork 297

Allow 10Gb for test runs in Cirrus. #4800

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@pp-mo LGTM 👍

Let's see what cirris-ci says...

|

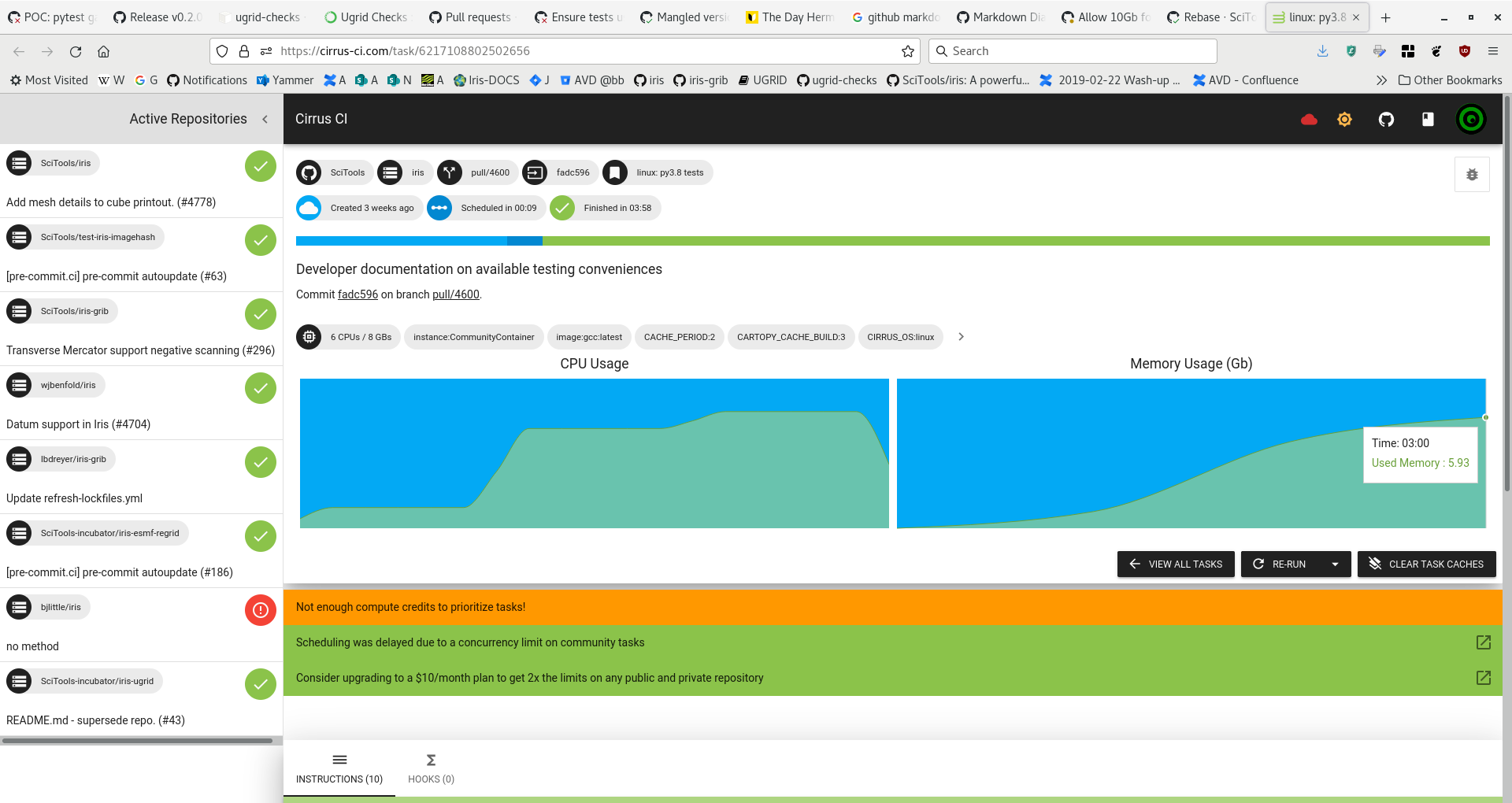

Following previous musings + information #4794 (comment) @lbdreyer suggested that you can see, on the Cirrus memory graphs, that memory usage has increased. Numbers look roughly like this ...

Exactly why the memory usage should have notably increased is unclear. |

@pp-mo Could be something... could be nothing, but it would be good to get a handle on this. That said, it may be that the run order of the tests have changed, for whatever reason, resulting in a spike? Just a suggestion for the observable change in behaviour based on nothing more that speculation 🤔 |

Well now I added a few older ones to the table. I will try re-spinning the oldest one from the above list with a lowest memory burden (#4600, previously had 5.81 Mb) ... |

|

According to @https://github.com/SciTools/iris-test-data/releases, this footprint continues to bloat. Just a fact, not necessarily a smoking gun. |

|

As an aside, I see that the benchmarking use of our test data is behind the curve... still on Not that it should matter here. |

But I thought that, since we now only download versioned builds of iris-test-data, that should not affect the re-spins of "older PRs" that I have been doing ? |

|

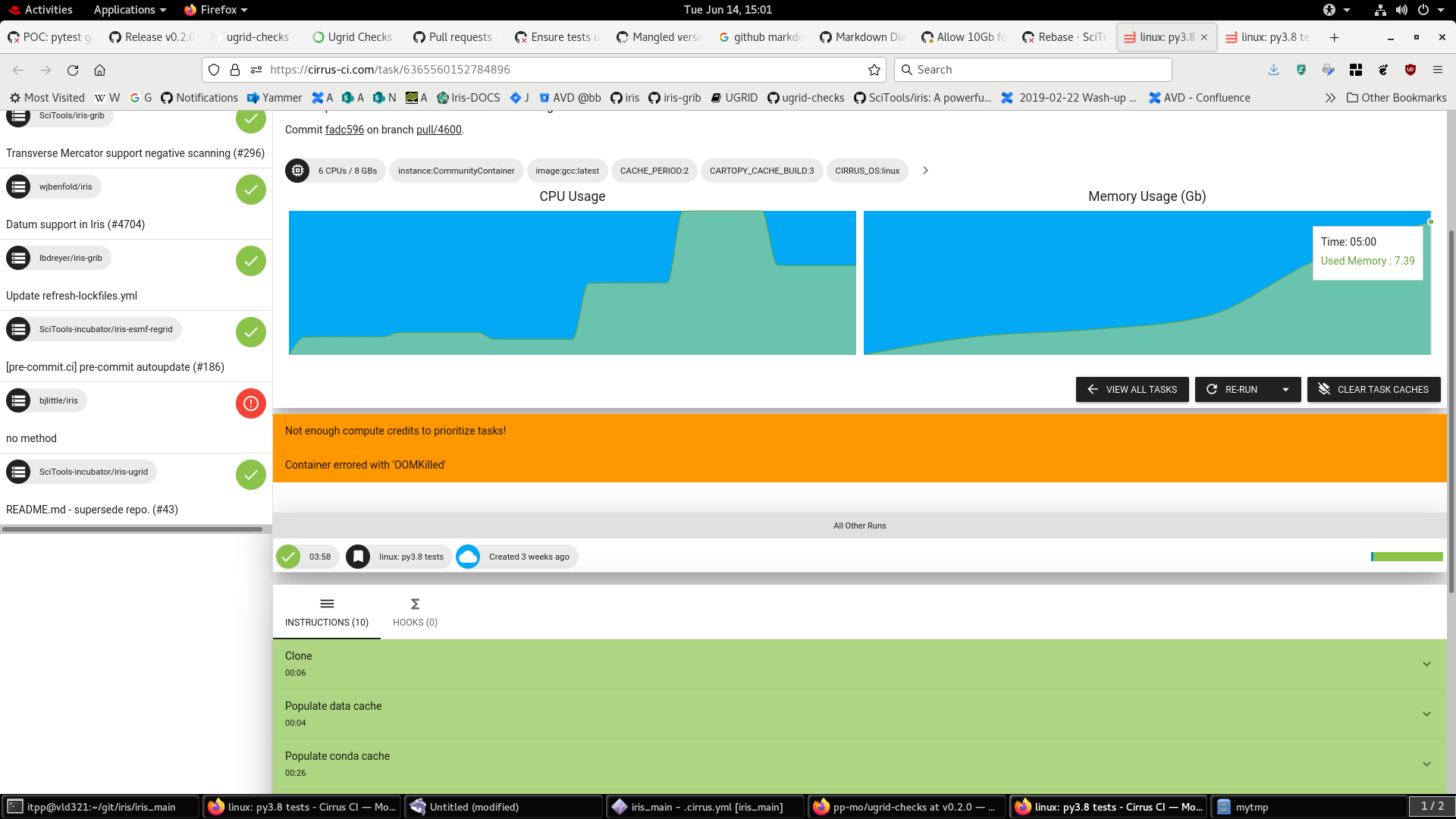

Here is the re-spin of the above #4600 : I'm not clear why we "only" have a peak of 7.39Mb, when we've previously seen 8.00. So, it does look like 5.93 --> 7.39 Mb |

|

(UPDATE: I was confused, it was the doctests that had problems). |

I'm going to cancel + respin it ... |

Ok, the tests did now run There is definitely something going on here. Possibly it's their platform? |

|

I know this is still a kluge, 3941fe3, but a temporary quick win might still be worth it, right now. |

|

An observation: tests in this PR seem to be running with 32 workers which seems rather a lot. In #4503 GHA version, it's only 2 workers. |

FYI, I think we are not proceeding with this. |

Yep, It's just nice to have an explanation. #4767 only used 15 workers, so maybe they have been creeping up for some reason. |

|

Closed by #4503 |

This promises to be an ultra-simple fix for our latest CI problems,

as noted here

Wellll, a sufficient stop-gap anyway.

It could well still be the right time to adopt #4503