-

Notifications

You must be signed in to change notification settings - Fork 260

Closed

Labels

Description

Describe the bug

When reading parquet file that contains MapType, this error is observed:

Job aborted due to stage failure: Task 1335 in stage 7.0 failed 4 times, most recent failure: Lost task 1335.3 in stage 7.0 (TID 2515, 10.148.44.12, executor 23): ai.rapids.cudf.CudfException: cuDF failure at: /ansible-managed/jenkins-slave/slave4/workspace/spark/cudf18_nightly/cpp/src/structs/structs_column_factories.cu:94: Child columns must have the same number of rows as the Struct column.

at ai.rapids.cudf.Table.readParquet(Native Method)

at ai.rapids.cudf.Table.readParquet(Table.java:683)

at com.nvidia.spark.rapids.MultiFileCloudParquetPartitionReader.$anonfun$readBufferToTable$2(GpuParquetScan.scala:1533)

at com.nvidia.spark.rapids.Arm.withResource(Arm.scala:28)

at com.nvidia.spark.rapids.Arm.withResource$(Arm.scala:26)

at com.nvidia.spark.rapids.FileParquetPartitionReaderBase.withResource(GpuParquetScan.scala:477)

at com.nvidia.spark.rapids.MultiFileCloudParquetPartitionReader.$anonfun$readBufferToTable$1(GpuParquetScan.scala:1532)

at com.nvidia.spark.rapids.Arm.withResource(Arm.scala:28)

at com.nvidia.spark.rapids.Arm.withResource$(Arm.scala:26)

at com.nvidia.spark.rapids.FileParquetPartitionReaderBase.withResource(GpuParquetScan.scala:477)

at com.nvidia.spark.rapids.MultiFileCloudParquetPartitionReader.readBufferToTable(GpuParquetScan.scala:1520)

at com.nvidia.spark.rapids.MultiFileCloudParquetPartitionReader.readBatch(GpuParquetScan.scala:1399)

at com.nvidia.spark.rapids.MultiFileCloudParquetPartitionReader.$anonfun$next$2(GpuParquetScan.scala:1449)

at com.nvidia.spark.rapids.Arm.withResource(Arm.scala:28)

at com.nvidia.spark.rapids.Arm.withResource$(Arm.scala:26)

at com.nvidia.spark.rapids.FileParquetPartitionReaderBase.withResource(GpuParquetScan.scala:477)

at com.nvidia.spark.rapids.MultiFileCloudParquetPartitionReader.next(GpuParquetScan.scala:1422)

at com.nvidia.spark.rapids.PartitionIterator.hasNext(GpuDataSourceRDD.scala:59)

at com.nvidia.spark.rapids.MetricsBatchIterator.hasNext(GpuDataSourceRDD.scala:76)

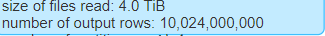

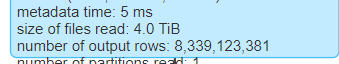

2 SQL charts from 1 successful run and a failed run:

Steps/Code to reproduce bug

It's one of our LHA queries that run on NGC.

It's not 100% reproduced during several runs.

Also, this error will not 100% make the job fail, sometimes a retry task will succeed, and the whole application will be finished successfully.

Environment details (please complete the following information)

- Environment location: Nvidia NGC

- Spark configuration settings related to the issue :

--executor-memory 32G \

--executor-cores 8 \

--driver-memory 16G \

--conf spark.cores.max=224 \

--conf spark.local.dir=${SPARK_LOCAL_DIR} \

--conf spark.eventLog.enabled=true \

--conf spark.driver.host=${SPARK_MASTER_HOST} \

--conf spark.driver.extraClassPath=${SPARK_CUDF_JAR}:${SPARK_RAPIDS_PLUGIN_JAR} \

--conf spark.executor.extraClassPath=/usr/lib:/usr/lib/ucx:${SPARK_CUDF_JAR}:${SPARK_RAPIDS_PLUGIN_JAR} \

--conf spark.executor.extraJavaOptions=-Djava.io.tmpdir=${SPARK_LOCAL_DIR}\ -Dai.rapids.cudf.prefer-pinned=true \

--conf spark.sql.broadcastTimeout=7200 \

--conf spark.sql.adaptive.enabled=false \

--conf spark.rapids.sql.explain=true \

--conf spark.sql.files.maxPartitionBytes=2G \

--conf spark.network.timeout=3600s \

--conf spark.executor.resource.gpu.vendor=nvidia.com \

--conf spark.executor.resource.gpu.amount=1 \

--conf spark.task.resource.gpu.amount=0.1 \

--conf spark.rapids.sql.concurrentGpuTasks=2 \

--conf spark.task.cpus=1 \

--conf spark.locality.wait=0 \

--conf spark.shuffle.manager=com.nvidia.spark.rapids.spark301.RapidsShuffleManager \

--conf spark.shuffle.service.enabled=false \

--conf spark.rapids.shuffle.maxMetadataSize=1MB \

--conf spark.rapids.shuffle.transport.enabled=true \

--conf spark.rapids.shuffle.compression.codec=none \

--conf spark.executorEnv.UCX_TLS=cuda_copy,cuda_ipc,rc \

--conf spark.executorEnv.UCX_ERROR_SIGNALS= \

--conf spark.executorEnv.UCX_MAX_RNDV_RAILS=1 \

--conf spark.executorEnv.UCX_CUDA_IPC_CACHE=y \

--conf spark.executorEnv.UCX_MEMTYPE_CACHE=n \

--conf spark.executorEnv.LD_LIBRARY_PATH=$LD_LIBRARY_PATH \

--conf spark.rapids.shuffle.ucx.bounceBuffers.size=4MB \

--conf spark.rapids.shuffle.ucx.bounceBuffers.device.count=32 \

--conf spark.rapids.shuffle.ucx.bounceBuffers.host.count=32 \

--conf spark.sql.shuffle.partitions=200 \

--conf spark.plugins=com.nvidia.spark.SQLPlugin \

--conf spark.rapids.memory.pinnedPool.size=4G \

--conf spark.rapids.memory.host.spillStorageSize=12G \

--conf spark.rapids.memory.gpu.pool=ARENA \

--conf spark.rapids.memory.gpu.allocFraction=0.1 \

--conf spark.rapids.memory.gpu.maxAllocFraction=1 \

--conf spark.rapids.sql.castFloatToString.enabled=true \

--conf spark.rapids.sql.castStringToFloat.enabled=true \

--conf spark.rapids.sql.castStringToInteger.enabled=true \

--conf spark.rapids.sql.variableFloatAgg.enabled=true \

--conf spark.rapids.sql.incompatibleOps.enabled=true \

--conf spark.hadoop.fs.s3a.access.key=$AWS_ACCESS_KEY_ID \

--conf spark.hadoop.fs.s3a.secret.key=$AWS_SECRET_ACCESS_KEY \

--conf spark.hadoop.fs.s3a.endpoint=swiftstack-maglev.ngc.nvidia.com \

--conf spark.hadoop.fs.s3a.path.style.access=true \

--conf spark.hadoop.fs.s3a.connection.maximum=256 \

...

Additional context

This is only a tracking issue for this error. There are some internal links and I will send a mail with them and more details.