-

Notifications

You must be signed in to change notification settings - Fork 3.6k

Closed

Labels

bugSomething isn't workingSomething isn't workinghelp wantedOpen to be worked onOpen to be worked on

Description

🐛 Bug

When returning dict of values from test_step() in LightningModule:

def test_step(self, batch, batch_idx):

output = self.layer(batch)

loss = self.loss(batch, output)

self.log('fake_test_acc', loss)

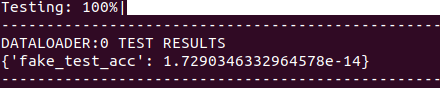

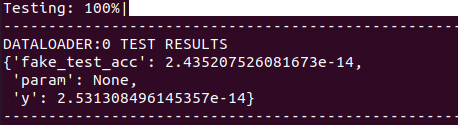

return {"y": loss, "param": None}The logged values and returned values are both displayed in terminal when testing:

If we declare test_epoch_end() method in LightningModule, then the extra values are ignored:

def test_epoch_end(self, outputs) -> None:

passIs this intentional behaviour??

This gets especially confusing when returning a non scalar tensor from test_step():

def test_step(self, batch, batch_idx):

output = self.layer(batch)

loss = self.loss(batch, output)

self.log('fake_test_acc', loss)

return {"output": output}Without declaring test_epoch_end method I get the following error:

ValueError: only one element tensors can be converted to Python scalars

But again, this exception error doesn't happen if empy test_epoch_end() method is declared in my LightningModule...

To Reproduce

Repo with boring model:

https://github.com/hobogalaxy/lit-bug2

Expected behavior

I believe the returned values should be just ignored in terminal output and shouln't have the potential to crash the run right?

Environment

- CUDA:

- GPU:

- GeForce RTX 2060

- available: True

- version: 11.1

- GPU:

- Packages:

- numpy: 1.19.2

- pyTorch_debug: False

- pyTorch_version: 1.8.0

- pytorch-lightning: 1.2.4

- tqdm: 4.59.0

- System:

- OS: Linux

- architecture:

- 64bit

- ELF

- processor: x86_64

- python: 3.8.8

- version: 0.4.0 release - final checks (releasing later today) #75-Ubuntu SMP Fri Feb 19 18:03:38 UTC 2021

Metadata

Metadata

Assignees

Labels

bugSomething isn't workingSomething isn't workinghelp wantedOpen to be worked onOpen to be worked on