-

Notifications

You must be signed in to change notification settings - Fork 3.6k

Description

Background

Currently we write our checkpoint and profiler output to the Logger, in the case that the user does not specify a path.

(Note: this is for the case of one logger, the case of multiple loggers to be discussed in a different issue).

The exact priority followed is:

Profiler:

- if a

dirpathis provided write to it - if not, write to the Logger

- if there is no Logger, write to

default_root_dir

Checkpointing:

- if a

dirpathis provided write to it - if not, and a

weights_save_pathis provided in the Trainer, write to it (Deprecated in [RFC] Deprecateweights_save_pathfrom the Trainer constructor #11768) - if not, write to the Logger

- if there is no Logger, write to

default_root_dir

Motivation

There are several issues and inconsistencies with how we currently write to the Logger.

- One issue is that for the checkpoint output we put it in a nice directory called "checkpoints", but for the profiler output it is not in a nice directory called "profiler" but instead the individual output files are just sitting in one of the logger's directories.

- There are inconsistencies in terms of where the loggers store the profiler output (in the case where no dirpath is provided). Most of the loggers store the output in their

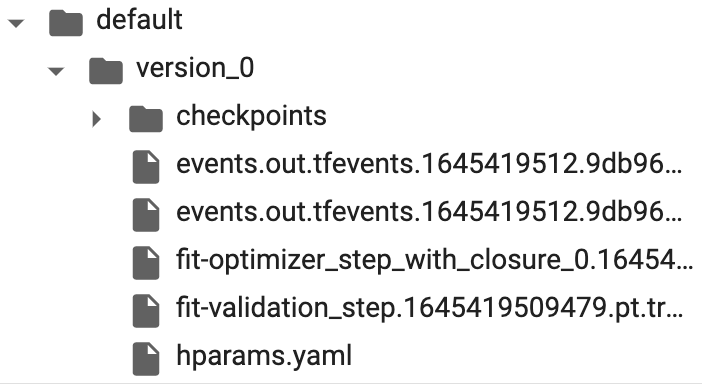

save_dir, but the TensorBoardLogger stores the profiler output in the same place where it stores the checkpoints -save_dir/name/version.

https://github.com/PyTorchLightning/pytorch-lightning/blob/e15a66412cc220fa241ec7cbb64b339a2f124761/pytorch_lightning/trainer/trainer.py#L2095-L2107

Why do we have different behavior for the TensorBoardLogger? Also, why is the profiler output not in the same place as the checkpointing output?

Pitch

-

When logging to the logger, put the profiler output in a directory called "profiler".

-

All loggers should store their output in

save_dir/name/version. Introduce a new property on the Logger Base API calledlog_dir(TBLogger and CSVLogger already have it), which returns this path, and is the place to store profiler and checkpointing output.

This will allow us to clean up this code here:

https://github.com/PyTorchLightning/pytorch-lightning/blob/e15a66412cc220fa241ec7cbb64b339a2f124761/pytorch_lightning/trainer/trainer.py#L2095-L2107

And also massively simplify this code:

https://github.com/PyTorchLightning/pytorch-lightning/blob/e15a66412cc220fa241ec7cbb64b339a2f124761/pytorch_lightning/callbacks/model_checkpoint.py#L582-L596

replacing it with simply ckpt_path = os.path.join(trainer.log_dir, "checkpoints") after #11768 is also complete.

cc @awaelchli @edward-io @Borda @ananthsub @rohitgr7 @kamil-kaczmarek @Raalsky @Blaizzy @ninginthecloud @carmocca @kaushikb11