+  +

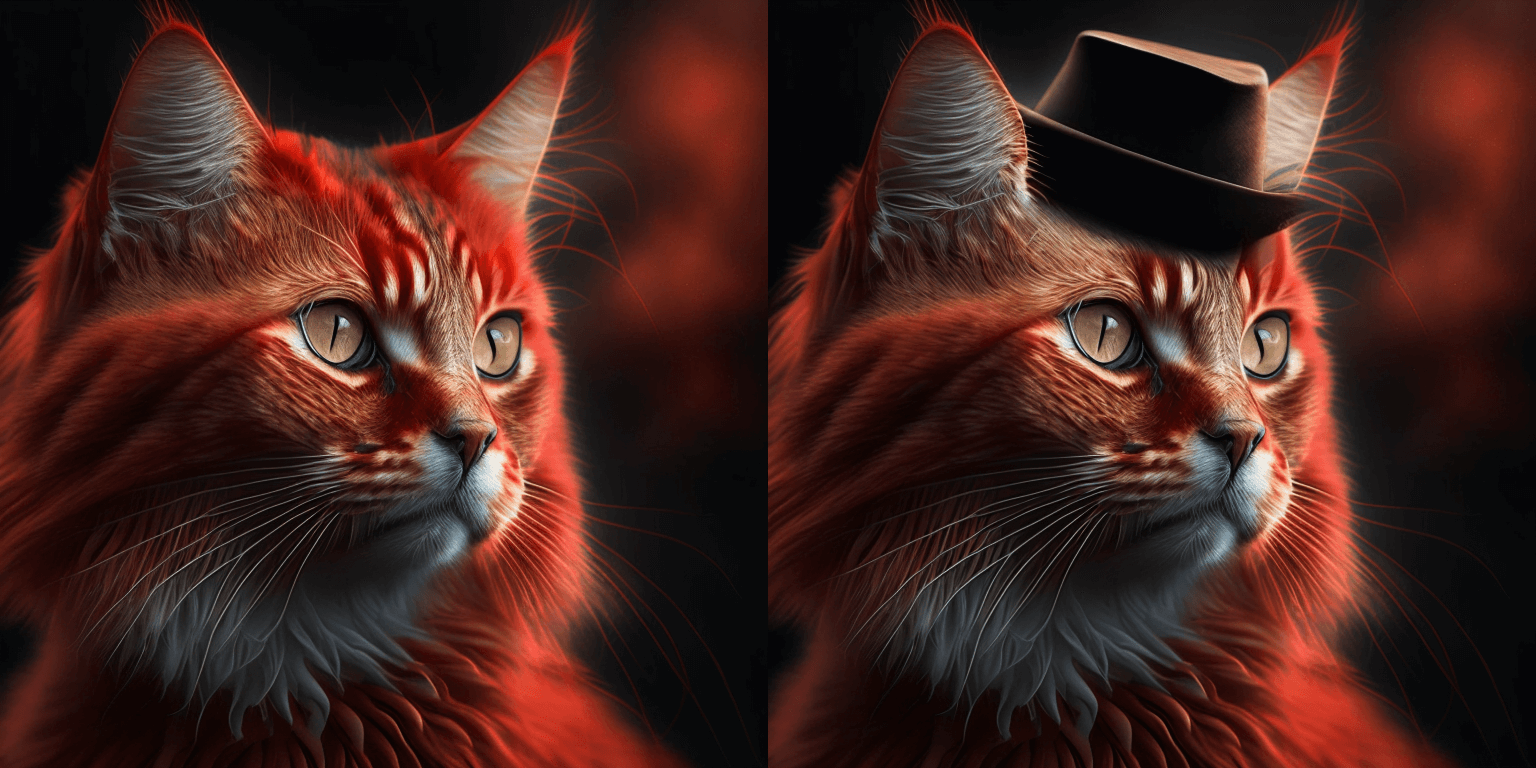

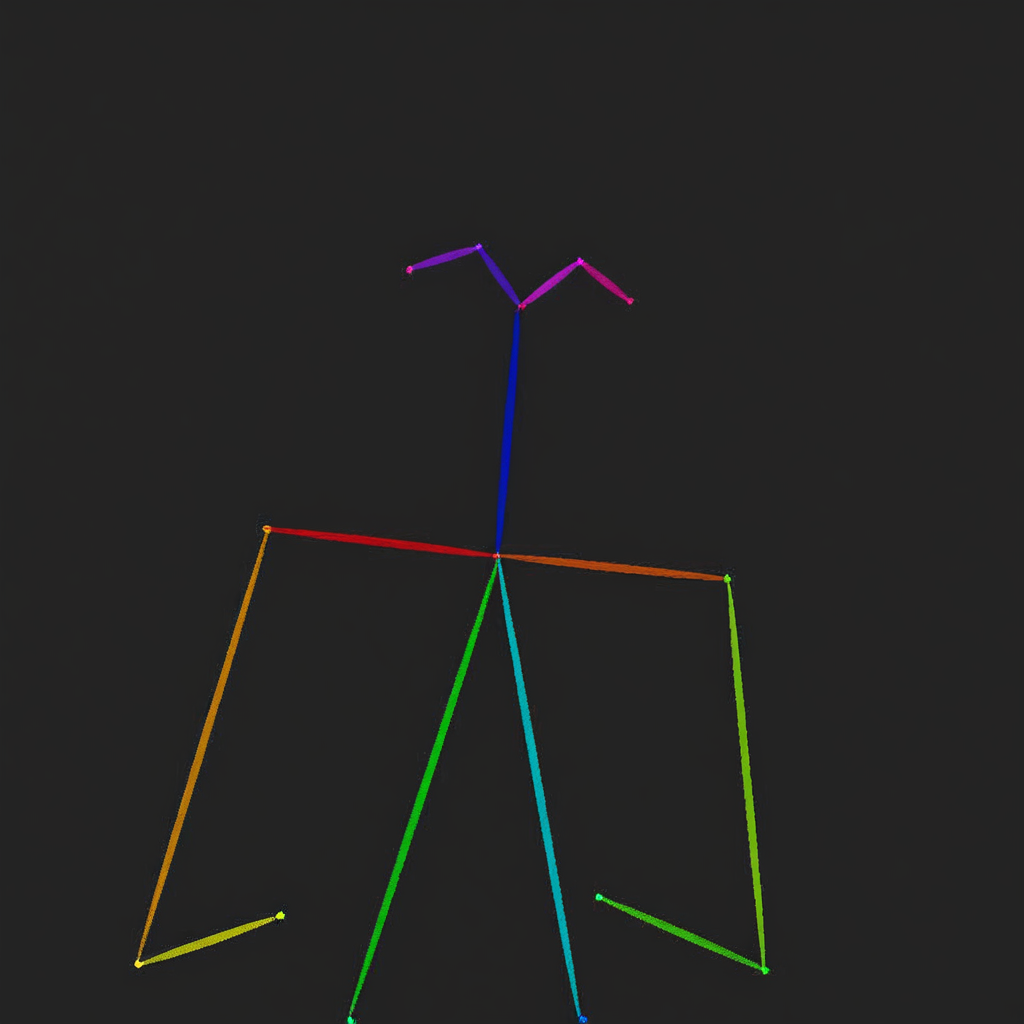

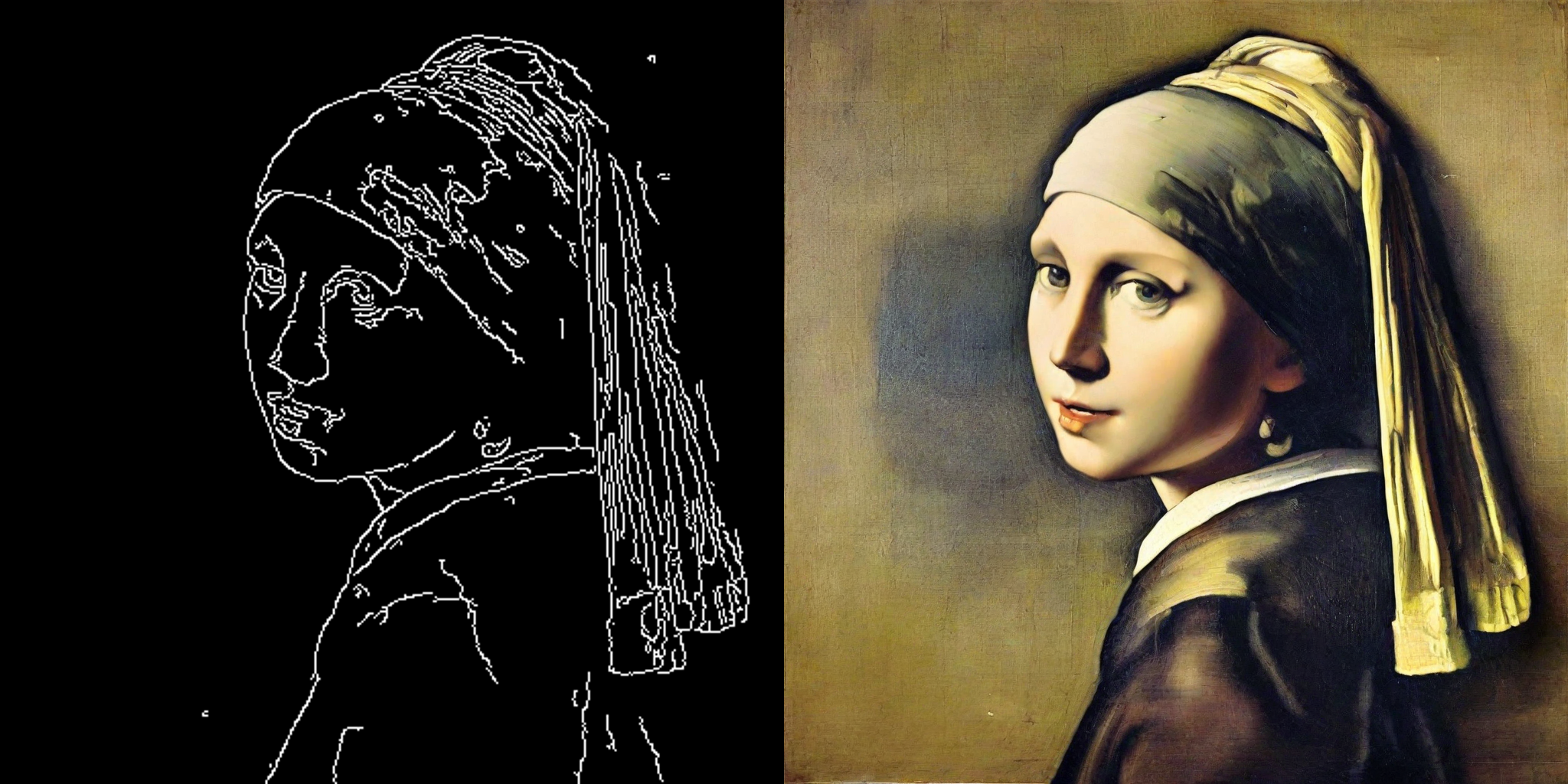

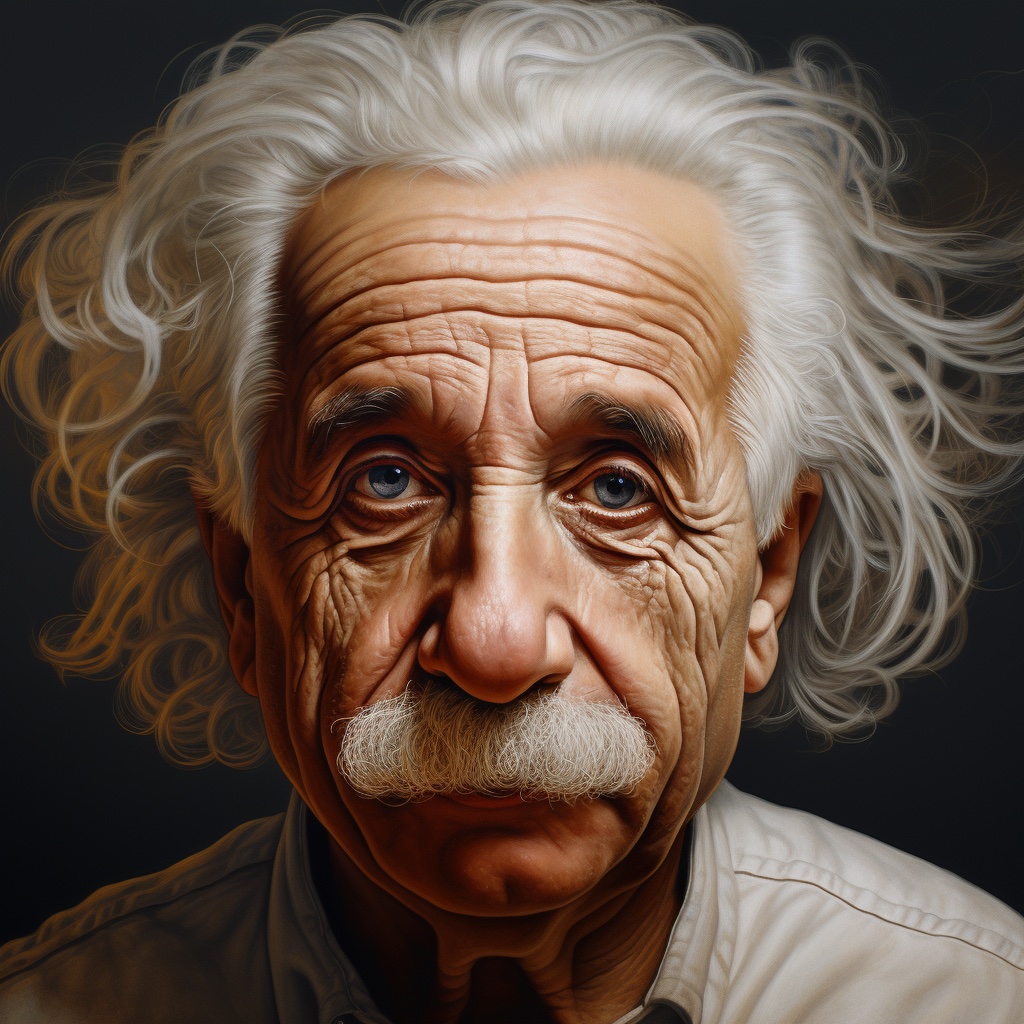

+ original image

+

+  +

+

+  +

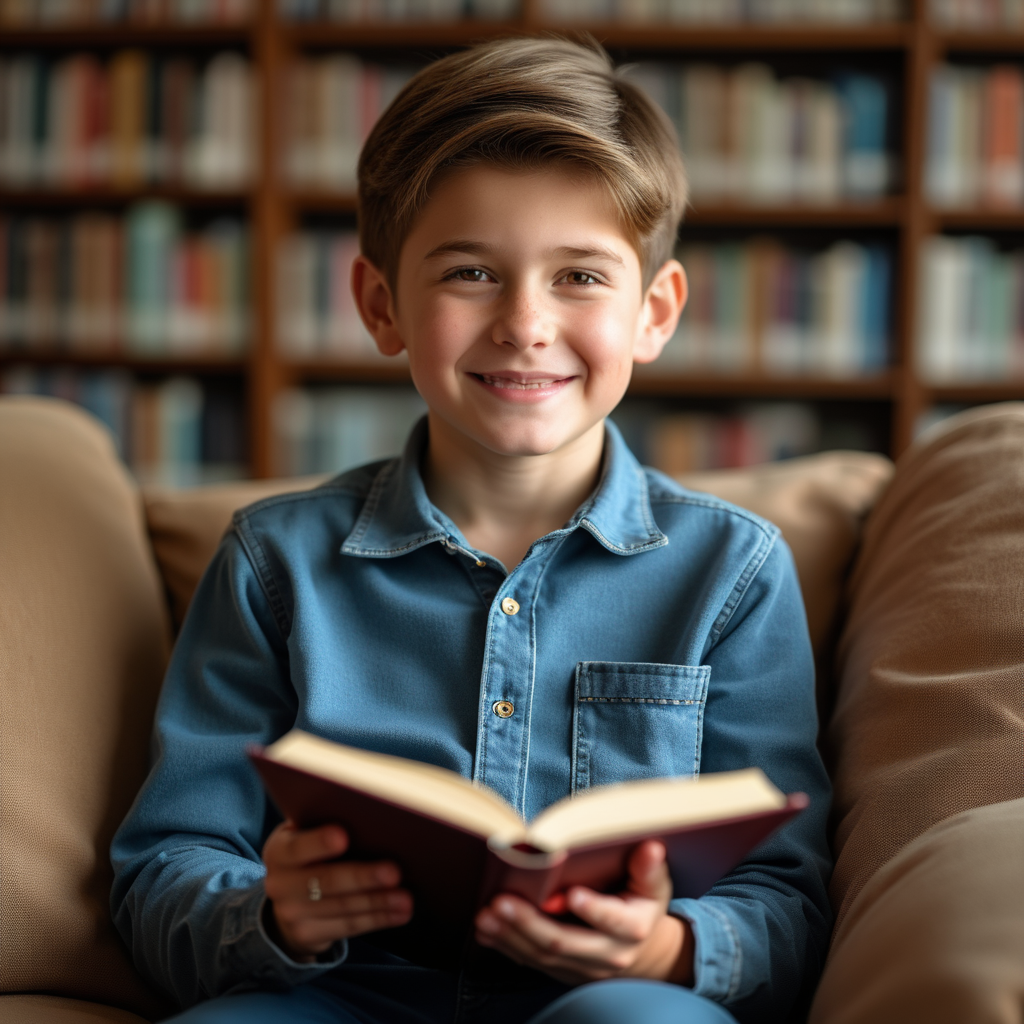

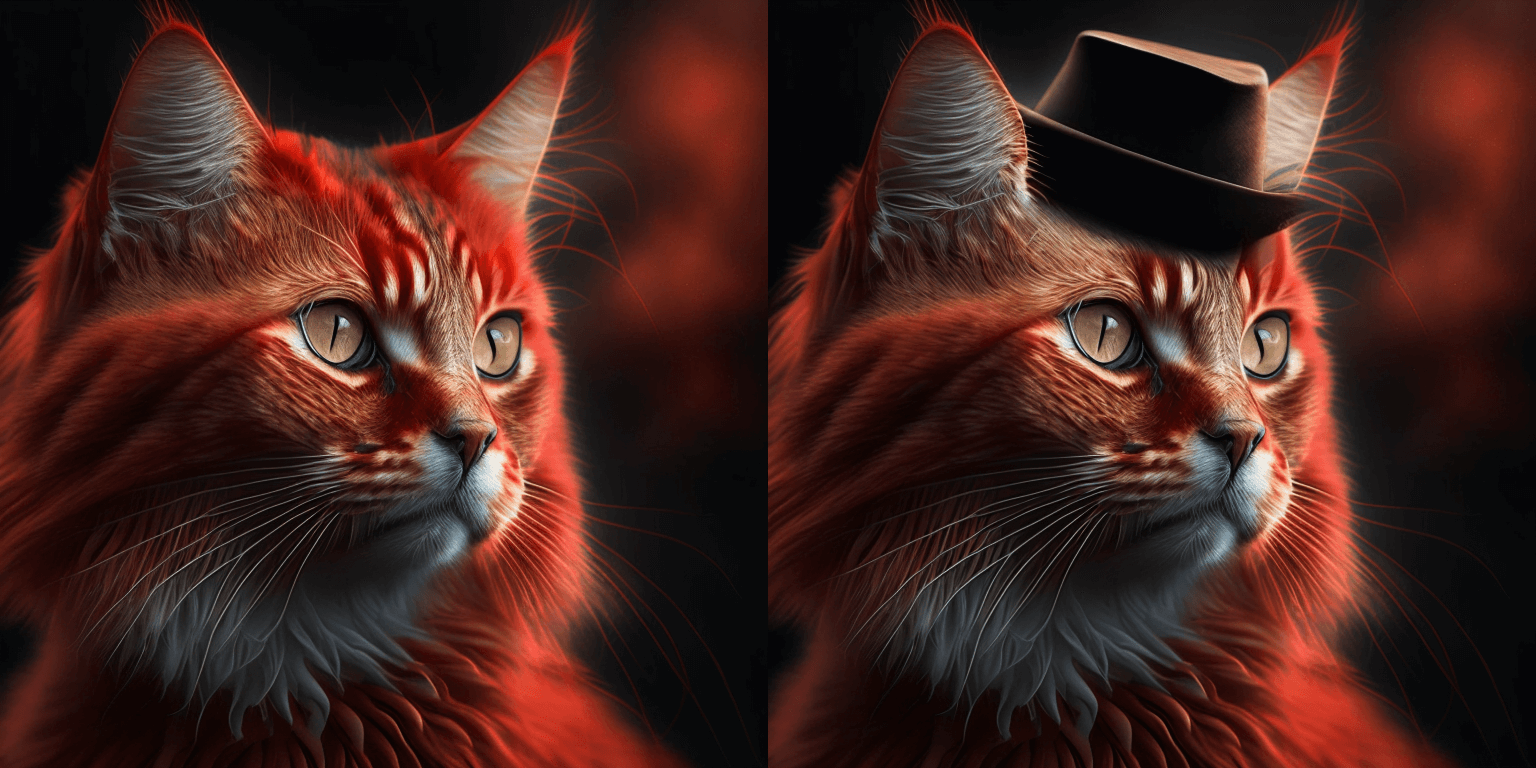

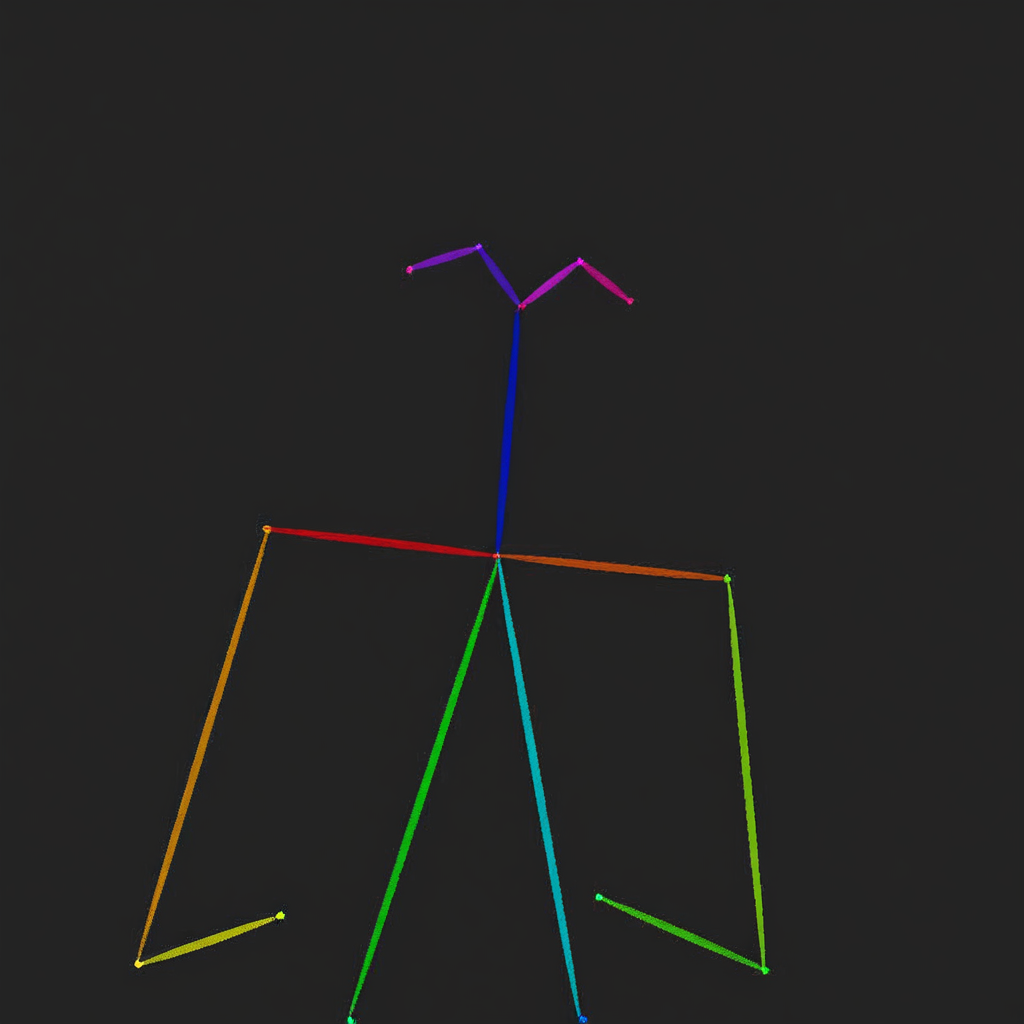

+ edited image

+

+ +

+  +

+  +

+  +

+  +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+  +

+  +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+  +

+  +

+  +

+  +

+  +

+  +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+ +

+  +

+  +

+ +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+  +

+ +

+ +

+ +

+ +

+ +

+ +

+  +

+  +

+  +

+  +

+  +

+ +

+ +

+  +

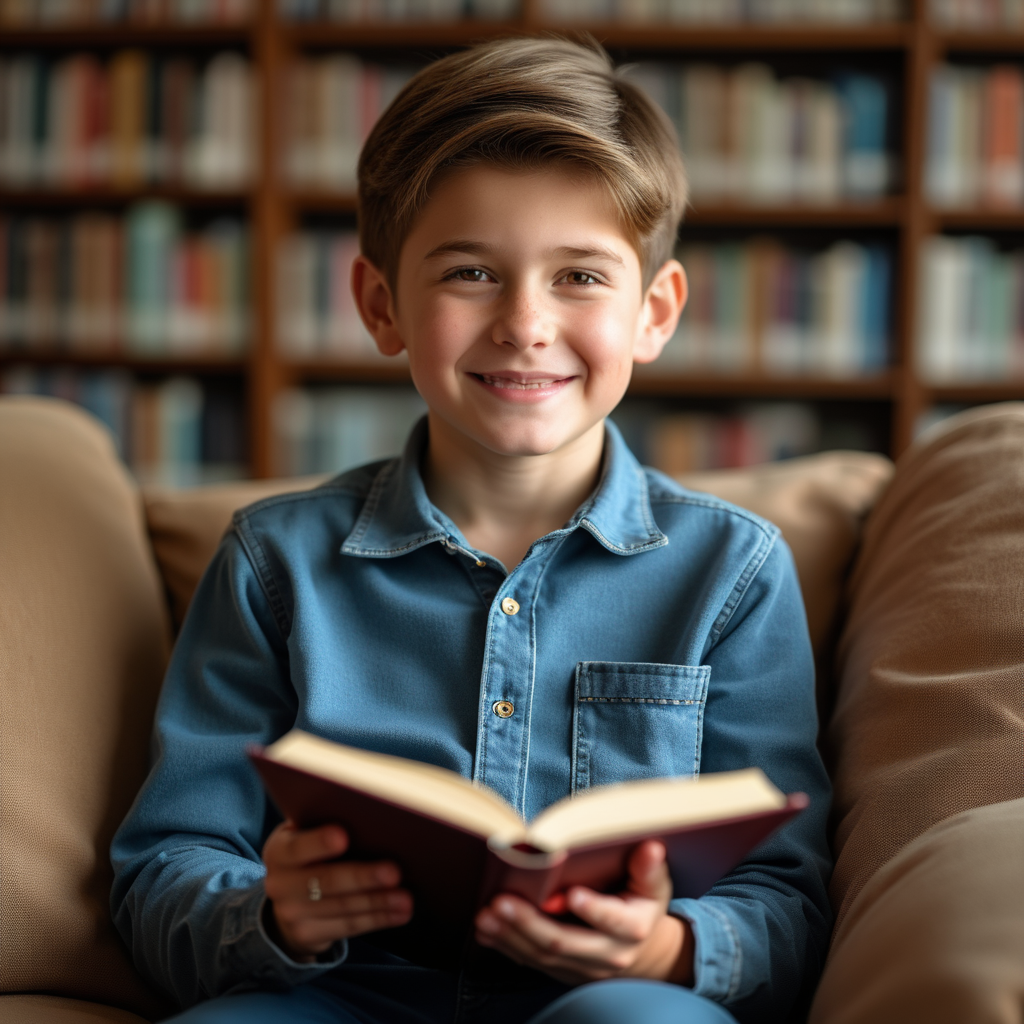

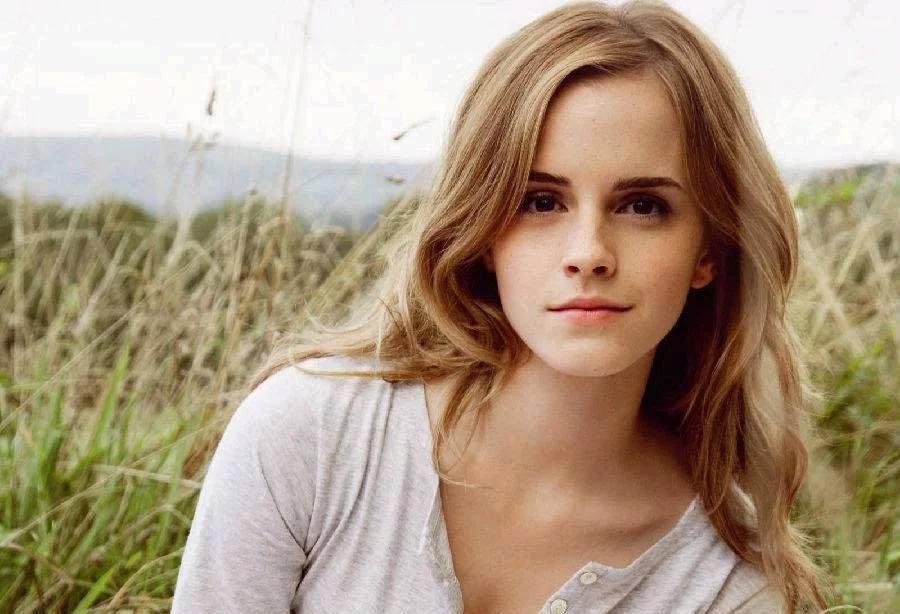

+ | Face Image | -Video | -Description | -

|---|---|---|

|

-  |

- The video, in a beautifully crafted animated style, features a confident woman riding a horse through a lush forest clearing. Her expression is focused yet serene as she adjusts her wide-brimmed hat with a practiced hand. She wears a flowy bohemian dress, which moves gracefully with the rhythm of the horse, the fabric flowing fluidly in the animated motion. The dappled sunlight filters through the trees, casting soft, painterly patterns on the forest floor. Her posture is poised, showing both control and elegance as she guides the horse with ease. The animation's gentle, fluid style adds a dreamlike quality to the scene, with the woman’s calm demeanor and the peaceful surroundings evoking a sense of freedom and harmony. | -

|

-  |

- The video, in a captivating animated style, shows a woman standing in the center of a snowy forest, her eyes narrowed in concentration as she extends her hand forward. She is dressed in a deep blue cloak, her breath visible in the cold air, which is rendered with soft, ethereal strokes. A faint smile plays on her lips as she summons a wisp of ice magic, watching with focus as the surrounding trees and ground begin to shimmer and freeze, covered in delicate ice crystals. The animation’s fluid motion brings the magic to life, with the frost spreading outward in intricate, sparkling patterns. The environment is painted with soft, watercolor-like hues, enhancing the magical, dreamlike atmosphere. The overall mood is serene yet powerful, with the quiet winter air amplifying the delicate beauty of the frozen scene. | -

|

-  |

- The animation features a whimsical portrait of a balloon seller standing in a gentle breeze, captured with soft, hazy brushstrokes that evoke the feel of a serene spring day. His face is framed by a gentle smile, his eyes squinting slightly against the sun, while a few wisps of hair flutter in the wind. He is dressed in a light, pastel-colored shirt, and the balloons around him sway with the wind, adding a sense of playfulness to the scene. The background blurs softly, with hints of a vibrant market or park, enhancing the light-hearted, yet tender mood of the moment. | -

|

-  |

- The video captures a boy walking along a city street, filmed in black and white on a classic 35mm camera. His expression is thoughtful, his brow slightly furrowed as if he's lost in contemplation. The film grain adds a textured, timeless quality to the image, evoking a sense of nostalgia. Around him, the cityscape is filled with vintage buildings, cobblestone sidewalks, and softly blurred figures passing by, their outlines faint and indistinct. Streetlights cast a gentle glow, while shadows play across the boy's path, adding depth to the scene. The lighting highlights the boy's subtle smile, hinting at a fleeting moment of curiosity. The overall cinematic atmosphere, complete with classic film still aesthetics and dramatic contrasts, gives the scene an evocative and introspective feel. | -

|

-  |

- The video features a baby wearing a bright superhero cape, standing confidently with arms raised in a powerful pose. The baby has a determined look on their face, with eyes wide and lips pursed in concentration, as if ready to take on a challenge. The setting appears playful, with colorful toys scattered around and a soft rug underfoot, while sunlight streams through a nearby window, highlighting the fluttering cape and adding to the impression of heroism. The overall atmosphere is lighthearted and fun, with the baby's expressions capturing a mix of innocence and an adorable attempt at bravery, as if truly ready to save the day. | -

-

-  -

-  -

-  -

- -

- -

-  -

-  -

-  -

-  -

-  -

-  -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

-  -

-  -

- -

- -

- -

- -

- -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

- -

-  -

-  -

- -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

-  -

- -

- -

- -

-  -

-  -

-  -

-  -

-  -

- -

- -

- -

- -

-  -

-  -

-  -

-  -

- -

-  -

-