-

Notifications

You must be signed in to change notification settings - Fork 6k

[web] Support external textures for CanvasKit renderer #37890

Conversation

4338dd2 to

7cbaccb

Compare

|

Gold has detected about 72 new digest(s) on patchset 6. |

|

I've built this on my local machine and it seems to work flawlessly so far. Great work, and something the engine has been sorely lacking for our purposes. |

|

This pull request executed golden file tests, but it has not been updated in a while (20+ days). Test results from Gold expire after as many days, so this pull request will need to be updated with a fresh commit in order to get results from Gold. |

|

@xtyxtyx – would you rebase and push a new commit? Just to get new goldens would be great! |

1030927 to

dba4dfb

Compare

yjbanov

left a comment

yjbanov

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry for the delayed review. Thank you for the contribution! This is not far from being submittable.

| final PlatformViewRegistry platformViewRegistry = PlatformViewRegistry(); | ||

|

|

||

| /// A registry for textures. | ||

| class TextureRegistry { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We are working on removing web-specific API from dart:ui because it confuses compilers and code analyzers. Instead, we expose API through JS-interop. Let's consult with @ditman on how best to do this for textures. We might want to do it in a way that's easy to expose through flutter.js API.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Realistically we don't have a good place to put this in a way that is ergonomic for users to use (and a workaround for the analyzer to freak out less when using these web-only APIs).

In practice, the API surface of dart:ui has barely changed in the last couple of years, and adding one more object to the mix is not too bad.

@yjbanov I think this can land in dart:ui, and whenever we come up with a better location for all these extra methods (dart:web_ui?), move them there. WDYT?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@ditman Is there any way we could have two separate, clearly named libraries in the future, something like dart:native_ui and dart:web_ui? I feel like dart:ui in and of itself has been a point of confusion for too long for those working with the web platform as a target. I would assume that for backwards compatibility (if necessary) dart:ui could just re-export (certain members of) one or both - much like Rust's std re-exports (almost?) all of the core package members.

Personally, I would love for these to be named in a way that clearly shows they're building blocks for Flutter, and not really useful on their own when using pure Dart, but that might be too big a deviation from the norm at this point. I don't know, for example, if it would be possible technically to implement packages prefixed with flutter, like flutter:native and flutter:web.

Long story short, I would appreciate a clear separation between Dart and Flutter, where one ends and the other begins, and the same for native and web targets. But I'm sure this isn't the place to discuss this in detail, so I'll be looking out for issues related to this in the future, if any.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@skreborn you're not alone, and this is such a big change that you'll know when it's coming for sure! The decision of making the flutter engine (dart:ui) a dart library predates me, by years! I guess it's because the engine needs some extra help from the Dart compilers, but I haven't dug too deep.

(I'd be happy if we could get to a point where dart:ui does not have anything web-specific, and dart:web_ui (or whatever its final name is) has only web-specific things, so it's clear from your imports if you're calling web-only APIs. Not sure what needs to be done for that to happen, haven't looked into the structural bits of the code yet!)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

PS: We now have a dart:ui_web package for things like this 😅

Thank @eyebrowsoffire and @mdebbar!

| class CkTexture { | ||

| CkTexture(this.source); | ||

|

|

||

| final Object source; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm wondering if accepting all possible texture sources is future-proof enough. In particular, Skwasm will move to a multi-threaded rendering model where GPU work will be done through an OffscreenCanvas in a web worker. I'm not sure if in that model we can use <img> elements as texture sources since there's no DOM in web workers. Maybe there's a way to first convert <img> to something that's transferable, such as VideoFrame, and then send it to the worker? cc @eyebrowsoffire for guidance.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The issue you bring up is something that I'm going to have to work out regardless of this change, and I haven't gotten to the point with the multi-threaded rendering where I have to decide how I'm going to solve it, but I think this should be fine. A VideoFrame can be constructed from an HTMLImageElement if we end up going that route: https://developer.mozilla.org/en-US/docs/Web/API/VideoFrame/VideoFrame

|

This is cool, but I can't help but think that this isn't really what the Texture element should be mapping to. A media element can be used to create a texture, but really is not the same thing as a texture. Shouldn't this be using Accepting a MediaElement in registerTexture certainly doesn't prevent this method from also accepting WebGL textures being passed to registerTexture, but it's a bit higher level than a texture and doesn't really solve the max web GL context problem, since anyone attempting to build widgets that use actual WebGL textures directly would still be forced to burn additional contexts and make more canvas elements to do so. |

Is it possible for Skia and ThreeJS to share the same WebGL context? Won't they compete to alter the WebGL state and cause rendering problems? Also is there a way to pass a WebGL texture to |

|

It looks like makeImageFromTexture says it accepts a WebGLTexture here: https://github.com/google/skia/blob/main/modules/canvaskit/npm_build/types/index.d.ts It's certainly possible to share a WebGL context with multiple libraries. In this case you wouldn't want to have both rendering directly the canvas, but you could have Three.JS render into a frame buffer and then using that frame buffer as a texture that you pass to Skia. Since you can't share textures across WebGL contexts, that's really the only way you could ever really take advantage of the native textures using the Texture element. https://threejs.org/docs/#api/en/renderers/WebGLRenderer That said, Three.js was just an example. Supporting raw textures would also allow for loading of MediaElements into the textures without having to go through the internal methods like lazyMakeImageFromTextureSource (that's what lazyMakeImageFromTextureSource is doing under the covers anyway): https://developer.mozilla.org/en-US/docs/Web/API/WebGL_API/Tutorial/Using_textures_in_WebGL I do think it's useful for the higher level APIs to be exposed, but all the Ck stuff should probably be moved into it's own package that can be imported directly if people want to do that. Being able to cast Canvas to CkCanvas in a CustomPainter for a web specific renderer would be a cleaner way to expose that, instead of making them use the Texture element, which should be lower level. |

That's good to know. I was under the impression that it was not possible. There is another problem if we go this way. When It seems possible to use |

|

Golden file changes have been found for this pull request. Click here to view and triage (e.g. because this is an intentional change). If you are still iterating on this change and are not ready to resolve the images on the Flutter Gold dashboard, consider marking this PR as a draft pull request above. You will still be able to view image results on the dashboard, commenting will be silenced, and the check will not try to resolve itself until marked ready for review. |

Well I think that's actually THE problem. Because all this stuff is internal and hidden in the plugin, there is currently no way to know what canvas to use when trying to get access to the correct webGL context. But it's right there as a property of the Surface that is used to create the Canvas for the render frame. It's not that it can't be known so much as that there aren't currently any hooks to know. I think the only way to really allow a useful implementation of Texture on the web is to solve that problem, because you need access to the WebGL context if you want to do anything with native Textures on web. I don't think it's a hard problem to solve, just requires a package somewhere that can be imported and a cast. Maybe something like: |

On iOS there is the FlutterTexture protocol which implementers can implement to be able to use the TextureRegistry (https://api.flutter.dev/objcdoc/Protocols/FlutterTexture.html). Perhaps another way to solve the problem would be to do something similar and have an abstract class that must be implemented and passed to the TextureRegistry when registering the texture? // web_ui/texture.dart -- looks like the current idea is for this kind of thing to be in web_ui package?

class TextureContext {

final CanvasElement canvas;

final WebGLRenderingContext gl;

}

class ImageInfo {

final AlphaType alphaType;

final ColorSpace colorSpace;

final ColorType colorType;

final int height;

final int width;

}

abstract class FlutterTexture {

FlutterTexture(this.info);

final ImageInfo info;

void copy(TextureContext context, WebGLTexture destination);

}From here a user could manually do whatever they need to their texture using the standard WebGL apis, and SkSurface.makeImageFromTexture can be used to get it into CanvasKit. This is probably a better mapping to how things work on the other platforms with regard to textures. |

Maybe then |

|

Yeah, let's hold off adding texture support until we know how to deal with multiple GL contexts. I see this resolving in a few ways:

|

|

MakeLazyImageFromTextureSource can already do what's needed for SVG and VideoFrames can't it? Maybe an alternative pathway could be simply to have a helper exposed in dart:web_ui that returns a CkImage and allows it to be updated similar to CkBrowserImageDecoder, but against html.MediaElement instead of a byte array. Not being able to render things like SVG or Video without introducing new GL contexts is a serious problem for us. Texture element is one potential way to solve it, but it's not the only one. That could allow the Texture implementation to be pushed out a bit, and is probably a better match for what this pull request was attempting to do. |

|

@yjbanov what do you think about exposing a helper to create / update an Image from a media element somewhere as an alternative? We are basically stuck here. Either we move off Flutter, or we just can't do video conferencing with more than a couple participants due to the same issue. Not having any way currently to stop Flutter from creating additional webGL contexts and crashing the renderer is a huge problem for so many basic scenarios. We need some kind of escape hatch. |

|

Has this rotted? |

|

@kevmoo no real progress from flutter team on this issue yet. Put together a proof of concept pull request to work around some problems (#41562), but no feedback on it so not sure if the team cares much about the problem. It's definitely a massive problem for us and seems bad to us that it's so easy to crash the renderer doing basic things, but we can work in a fork with the proof of concept if the team doesn't want to solve it. We definitely can't ship anything into prod with flutter web that uses the official flutter bits because of this. |

|

This is mostly waiting for the results of the single GL context investigation that @hterkelsen is doing right now. Once we have a good understanding of how to manage GL contexts we should be able to fit this in. I'm thinking we should start with supporting |

|

@yjbanov renderer with single context #41562 with the z-index moving the layers behind and clearing canvas works great in our prototype, maybe not the best fit for non rectangular elements (for instance SVG with transparent backgrounds) where you'd need a mask to do the appropriate cut in many cases. Support for |

|

@harryterkelsen @yjbanov what should happen with this PR? |

|

This is still waiting for the single GL context design to land. Other than that the overall code looks OK. |

what is the progress of the design |

|

Follow progress of the Single GL Context PR here: |

|

@Hixie @yjbanov still think this PR is the wrong approach to solve the SVG problem (vs just an image constructor that takes a media element), but don't see how #42672 would impact this much since it still will blow up when you reach an overlay limit. It seems to just give a different error for the same fundamental architectural problem in the renderer. |

|

@eyebrowsoffire I think this commit should probably be closed due to #45256 landing. This change is just another way of implementing the same thing, not really a Texture implementation. |

Agree. I feel

What's your idea about these? @eyebrowsoffire |

|

@xtyxtyx Even if the call were synchronous, the textures get asynchronously uploaded to the graphics card by teximage2d, so there is definitely some delay when putting frames out. The promise resolve happens pretty immediately though, not sure what the reason was behind making the value a promise. Looks to me at first glance like it's pretty much always available right on the next microtask: teximage2d can certainly accept the dom element directly and bypass that promise resolution (maybe Skia has a more direct call that does this), but not sure if it would make any difference in practice for how long it takes the texture to get uploaded to the GPU. We used to do something similar with video elements in pixi.js with video, here's what they do for reference, which works well enough in practice: |

|

@xtyxtyx also note that videos have requestVideoFrameCallback to notify when a new frame is available, so for updating a video texture, the process would be something like request a video frame callback, createImageBitmap, request video frame callback, etc. so you already do have some waiting built into the process and some time for the promise to resolve between video frames already, but yeah if you didn't have to wait for a promise to resolve because the texture was being updated with the webgl calls directly from the DOM element, the code would be a little easier to deal with and you could definitely imagine the video maybe being a frame behind where it would be otherwise due to the promise resolution and a setstate after it's ready. Maybe there is a slightly easier variation of what createImageBitmap is doing. Certainly it's possible with the current browser APIs to skip the promise and do the same thing. |

Chromium-based browsers have a fast path for this operation, but Firefox does not. In Firefox, such operation will always involve a GPU-to-CPU-to-GPU copy if you suspect it might. Here is a demo to show this: Update: After carefully looking at the picture, I realised that <!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Document</title>

</head>

<body>

<canvas id="canvas2d" width="2048" height="2048"></canvas>

<canvas id="canvas3d" width="2048" height="2048"></canvas>

<script>

const canvas2d = document.getElementById('canvas2d');

const ctx2d = canvas2d.getContext('2d');

const canvas3d = document.getElementById('canvas3d');

const ctx3d = canvas3d.getContext('webgl');

ctx3d.clearColor(0, 0, 0, 1);

ctx3d.clear(ctx3d.COLOR_BUFFER_BIT);

const vertexShaderSource = `

attribute vec4 a_position;

void main() {

gl_Position = a_position;

}

`;

const fragmentShaderSource = `

precision mediump float;

uniform sampler2D u_image;

uniform vec2 u_textureSize;

uniform vec2 u_resolution;

void main() {

vec2 onePixel = vec2(1.0, 1.0) / u_textureSize;

vec2 coord = gl_FragCoord.xy / u_resolution;

vec4 color = texture2D(u_image, coord);

gl_FragColor = color;

}

`;

const program = ctx3d.createProgram();

const vertexShader = ctx3d.createShader(ctx3d.VERTEX_SHADER);

const fragmentShader = ctx3d.createShader(ctx3d.FRAGMENT_SHADER);

ctx3d.shaderSource(vertexShader, vertexShaderSource);

ctx3d.shaderSource(fragmentShader, fragmentShaderSource);

ctx3d.compileShader(vertexShader);

ctx3d.compileShader(fragmentShader);

ctx3d.attachShader(program, vertexShader);

ctx3d.attachShader(program, fragmentShader);

ctx3d.linkProgram(program);

ctx3d.useProgram(program);

var frame = 0;

async function copy2dTo3d() {

ctx2d.clearRect(0, 0, canvas2d.width, canvas2d.height);

ctx2d.fillStyle = 'red';

ctx2d.font = '30px Arial';

ctx2d.fillText(`frame: ${frame++}`, 10, 50);

const bitmap = await createImageBitmap(canvas2d);

const positionLocation = ctx3d.getAttribLocation(program, 'a_position');

const positionBuffer = ctx3d.createBuffer();

ctx3d.bindBuffer(ctx3d.ARRAY_BUFFER, positionBuffer);

ctx3d.bufferData(ctx3d.ARRAY_BUFFER, new Float32Array([

-1, -1,

-1, 1,

1, -1,

1, -1,

-1, 1,

1, 1,

]), ctx3d.STATIC_DRAW);

ctx3d.enableVertexAttribArray(positionLocation);

ctx3d.vertexAttribPointer(positionLocation, 2, ctx3d.FLOAT, false, 0, 0);

const texture = ctx3d.createTexture();

ctx3d.bindTexture(ctx3d.TEXTURE_2D, texture);

ctx3d.texImage2D(ctx3d.TEXTURE_2D, 0, ctx3d.RGBA, ctx3d.RGBA, ctx3d.UNSIGNED_BYTE, bitmap);

ctx3d.texParameteri(ctx3d.TEXTURE_2D, ctx3d.TEXTURE_WRAP_S, ctx3d.CLAMP_TO_EDGE);

ctx3d.texParameteri(ctx3d.TEXTURE_2D, ctx3d.TEXTURE_WRAP_T, ctx3d.CLAMP_TO_EDGE);

ctx3d.texParameteri(ctx3d.TEXTURE_2D, ctx3d.TEXTURE_MIN_FILTER, ctx3d.NEAREST);

ctx3d.texParameteri(ctx3d.TEXTURE_2D, ctx3d.TEXTURE_MAG_FILTER, ctx3d.NEAREST);

const textureSizeLocation = ctx3d.getUniformLocation(program, 'u_textureSize');

ctx3d.uniform2f(textureSizeLocation, bitmap.width, bitmap.height);

const resolutionLocation = ctx3d.getUniformLocation(program, 'u_resolution');

ctx3d.uniform2f(resolutionLocation, canvas3d.width, canvas3d.height);

ctx3d.drawArrays(ctx3d.TRIANGLES, 0, 6);

requestAnimationFrame(copy2dTo3d)

}

requestAnimationFrame(copy2dTo3d);

</script>

</body>

</html> |

|

This is good discussion. I will say I don't have all the answers here off the top of my head. I considered allowing types other than an

We could (for other non- This is actually may be an argument for why the new Another reason to actually support Another argument is simply convenience. While this isn't strictly necessary, having the engine deal with the mutability of the underlying image source for you and refreshing the contents of the texture is less work for clients, so I can see why it might be desirable. On the other hand, I am hesitant to add a complex system for updating textures to the web engine unless there is a real concrete reason to do so. Code that is in the engine is likely to ship with everyone's apps whether they need it or not. Dart's tree-shaking helps with this, but in many cases (and I suspect in this case) it won't insightful enough to remove a lot of the machinery that deals with this updating. So all users end up paying the price (in binary size at least) for a feature that few will use. Conversely, if this can actually be done with a package, then the price is basically opt-in. The other thing that concerns me is that I'm not sure that the engine will be able to know whether a texture needs to be updated, which will lead to redundant updates of the texture object. In contrast, the client code may have a higher level understanding of when the image source might actually need updating. Perhaps there is a way to special-case this in the engine for things like video elements, but again that's more complicated logic that people are paying for that most may not use. The last complication I will mention is that in the Skwasm renderer, we are using a multi-threaded rendering strategy, which means the actual My general takeaway personally is that I'd like to see some experimentation with the simple Anyway, sorry for the mountain of text, this is just a brain dump of all my thoughts on the subject. I hope some of it is useful! |

|

Updating the video_player package to use the new API could be a good test to see whether the new API is sufficient enough. I don't think it's a bad idea to support textures, but I do think that if textures are to be supported then it seems like the texture ID should map directly to a webGL texture, not some DOM object that can be used to create a texture. Why create a complicated system for managing and updating textures instead of just letting people use the APIs that already exist for creating and updating textures. Isn't that the real point of having Texture support? |

|

I filed a follow on issue about the possibility of some way to have textures that can be updated: flutter/flutter#139271 But I think the work in this PR is superseded by the addition of the |

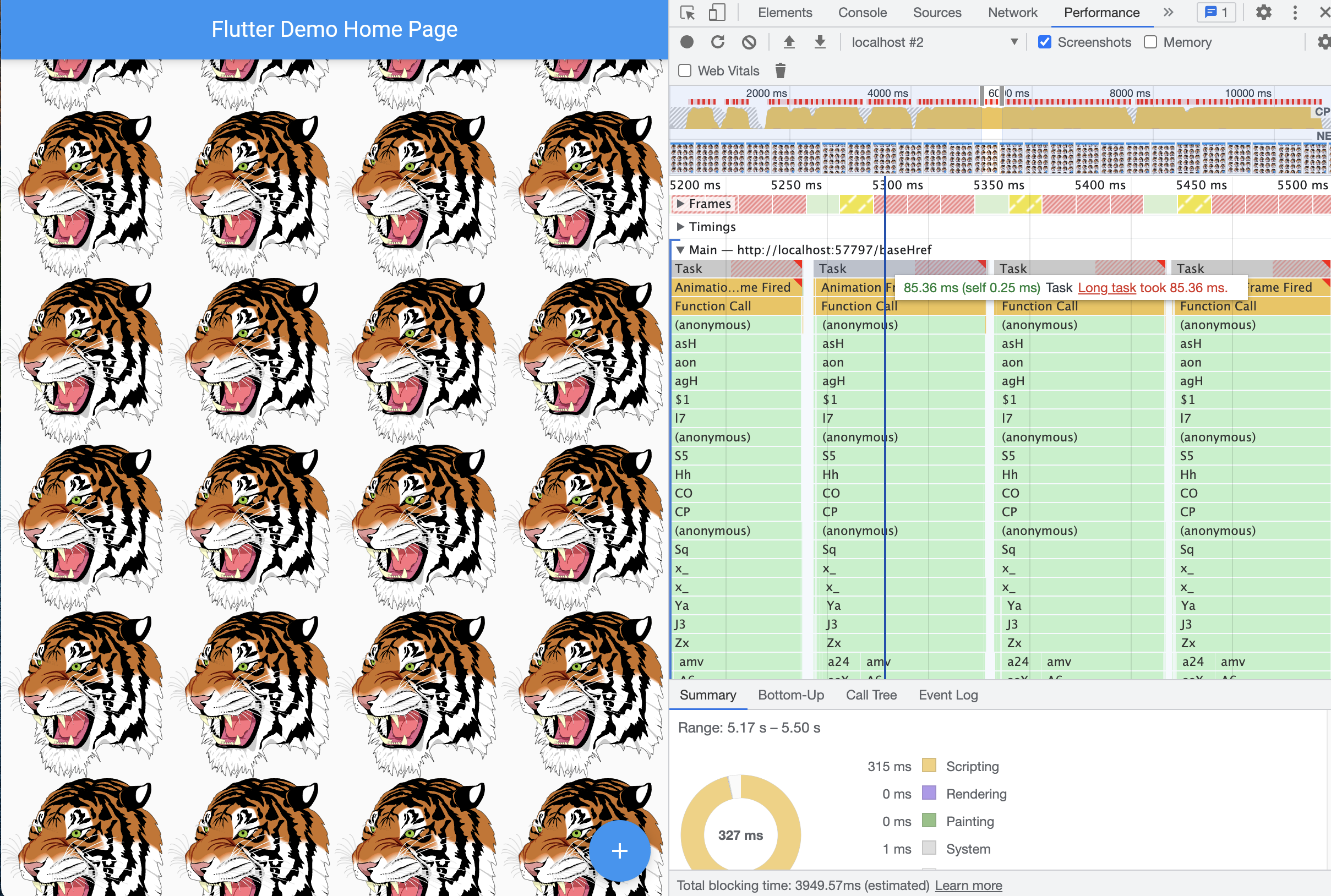

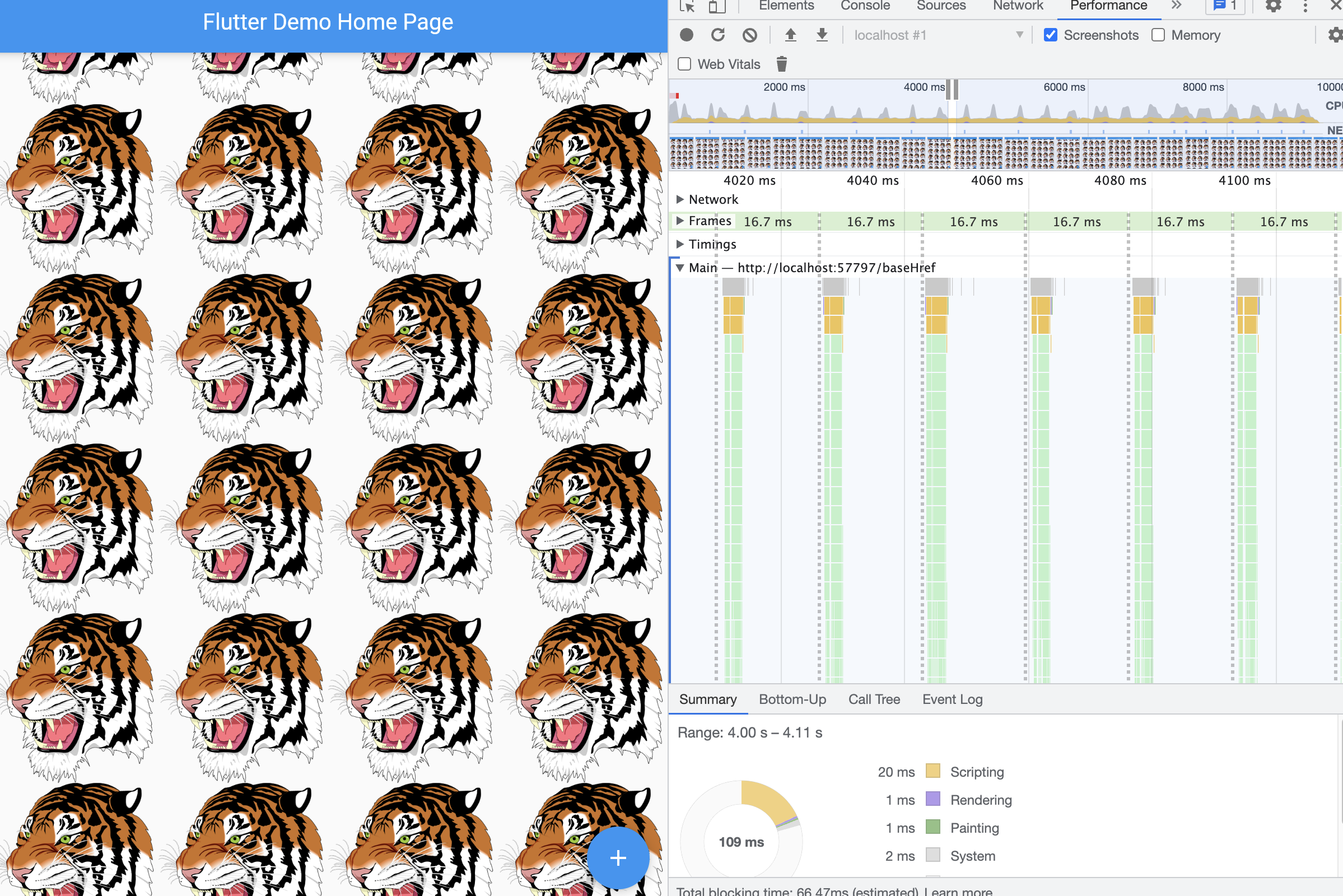

This PR adds the ability to use the Texture widget on web when using the CanvasKit renderer.

Fixes flutter/flutter#119649

While HtmlElementView works well on the html renderer, it consumes significantly more GPU memory on the CanvasKit renderer (around 100mb per element) because the CanvasKit renderer needs to create one more surface per HtmlElementView.

Also due to the limit that browsers have on the number of WebGL contexts. It's impossible to have many HtmlElementView, for example VideoPlayers, to render at the same time. flutter/flutter#113699

Textures can also help to make images render faster on browsers that don't have ImageDecoder. flutter/flutter#113713

With textures it's also easier to render SVG images performantly on web browsers:

Render svgs with flutter_svg:

Render svgs with Texture and HTMLImageElement:

Implementing a Texture based Image widget with the new API: