-

Notifications

You must be signed in to change notification settings - Fork 25.6k

Derive max composite buffers from max content len #29448

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Derive max composite buffers from max content len #29448

Conversation

With this commit we determine the maximum number of buffers that Netty keeps while accumulating one HTTP request based on the maximum content length. Previously, we kept the default value of 1024 which is too small for bulk requests which leads to unecessary copies of byte buffers internally.

|

Pinging @elastic/es-core-infra |

|

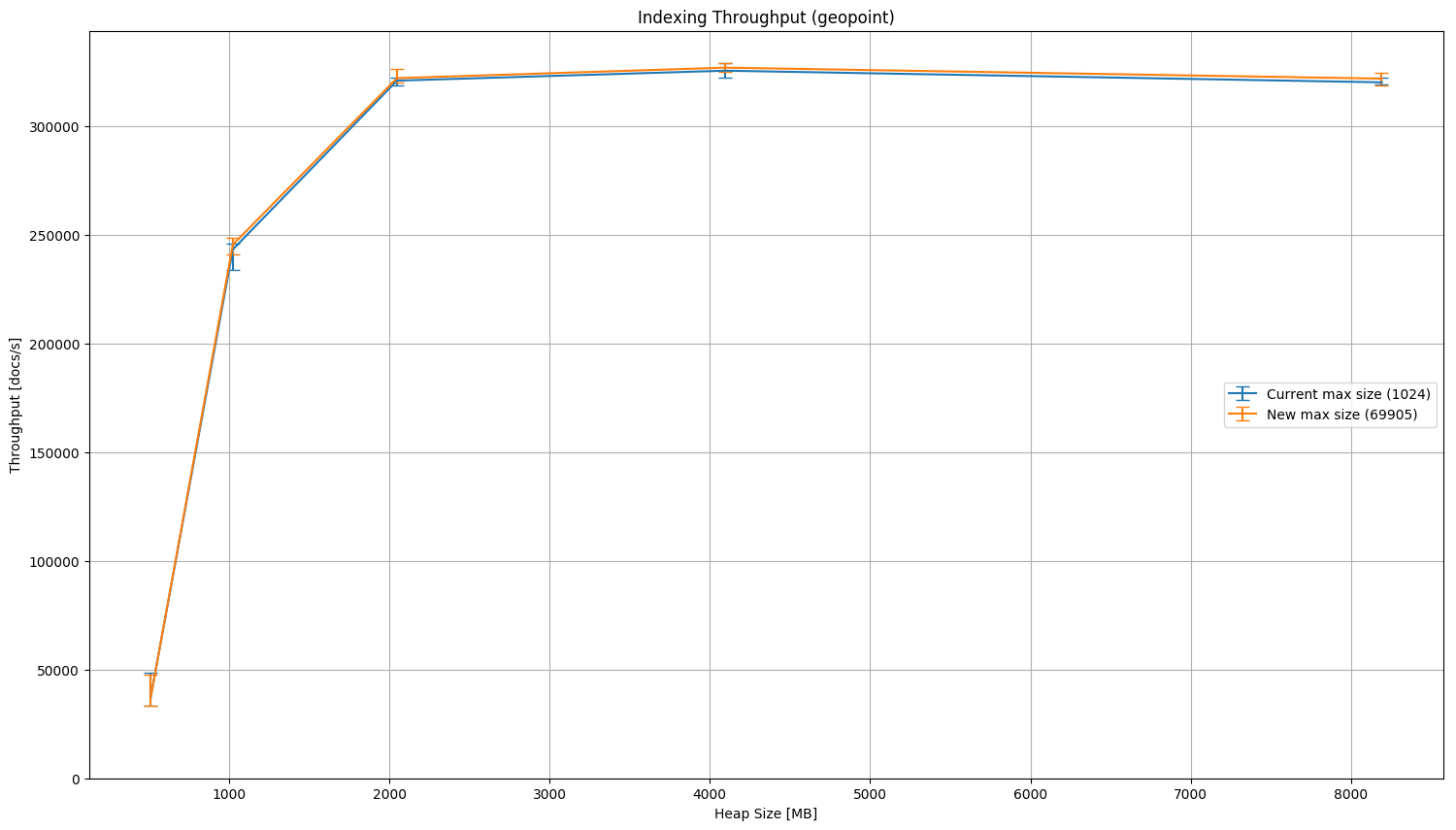

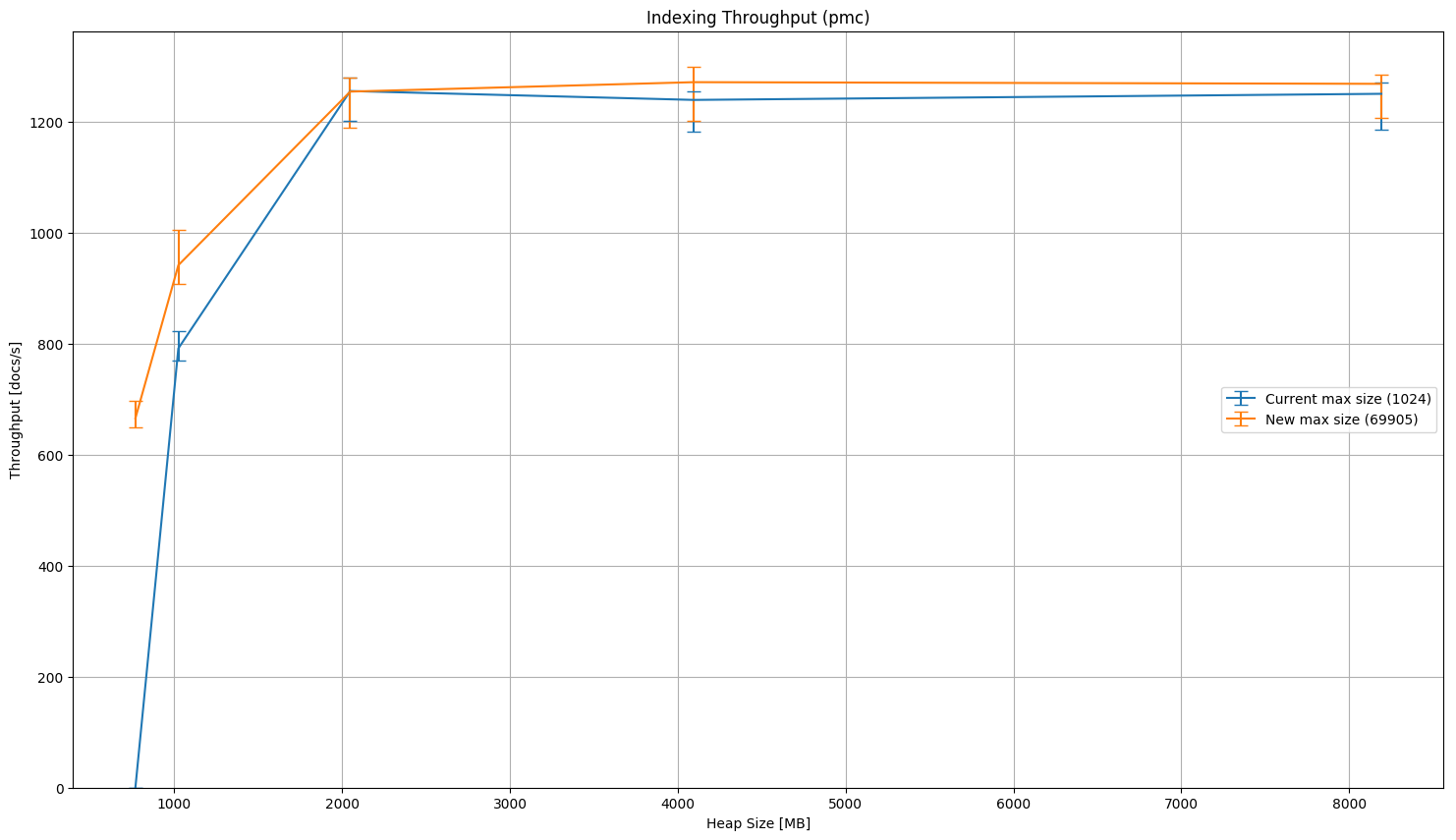

I have now run several benchmarks in our benchmarking suite with different heap sizes. Below are the results for two extreme cases:

I ran these benchmarks with different heap sizes to see for which configurations the max cumulation buffer changes have an effect. The results match the expectation that with these change, Elasticsearch performs better under low memory conditions while performing similar to or slightly better than before when more heap memory is available. One data point where this is very apparent is that we could successfully finish The graphs below show the achieved median indexing throughput for those tracks along with error bars for the minimum and maximum throughput. In blue we see the current default value of at most 1024 cumulation buffer components whereas in orange we see the new default of 69905 cumulation buffer components assuming a default HTTP max content length of 100MB (this value is derived based on the HTTP max content length; see the code for details). I'd intend to merge these changes to master after successful review, let it bake there for a while and backport it to 6.x. |

jasontedor

left a comment

jasontedor

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I left a question.

| // Note that we are *not* pre-allocating any memory based on this setting but rather determine the CompositeByteBuf's capacity. | ||

| // The tradeoff is between less (but larger) buffers that are contained in the CompositeByteBuf and more (but smaller) buffers. | ||

| // With the default max content length of 100MB and a MTU of 1500 bytes we would allow 69905 entries. | ||

| long maxBufferComponentsEstimate = Math.round((double) (maxContentLength.getBytes() / MTU_ETHERNET.getBytes())); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We can get the interface MTU from NetworkInterface API; should we use this here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I had a look this and I really like your idea. However, I am not sure it is feasible: I guess we need to resolve the network interface that is associated with the publish address (leveraging NetworkService).

While I also dislike having the MTU specified as a constant here (instead of retrieving it from the network interface), it serves as an input for an estimation. The "worst" practical MTU is 1500 byte, another typical one is 65536 byte for loopback (that's not always the case for loopback but it is usually higher than for Ethernet). What happens if we assume an MTU of 1500 for our estimation in the loopback case? Our estimate would allow more buffer components than are expected for loopback. But in practice we do not expect to reach that theoretical capacity. A specific example for the loopback case (with a max content length of 100 MB):

- Estimated maximum number of buffers: 100 MB / 1500 byte = 69905

- Actual maximum number of buffers: 100 MB / 65536 byte = 1600

This means that we expect to reserve space for at most 1600 buffers in the HttpObjectAggregator but overestimate it to at most 69905 (which we do not reach in practice).

To summarize, I see two possibilities:

- Determine the publish address with

NetworkService, find the matching network interface and determine its MTU. This is correct but more complex. - Rename the constant

MTU_ETHERNETto something likeSMALLEST_EXPECTED_MTU(while RFC 791 states 68 bytes as the minimum, I'd stick to 1500 for practical purposes). It's not ideal but in very special cases, users can explicitly define this expert setting (http.netty.max_composite_buffer_components) directly.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@jasontedor wdyt about the above?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that jumbo frames are common enough that we should try to go the extra mile here. If it's not possible to do cleanly, I would at least like to see a system property that can be set to set the MTU to 9000.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the suggestion. I have now introduced a system property with a default is 1500 (bytes).

| // | ||

| // Note that we are *not* pre-allocating any memory based on this setting but rather determine the CompositeByteBuf's capacity. | ||

| // The tradeoff is between less (but larger) buffers that are contained in the CompositeByteBuf and more (but smaller) buffers. | ||

| // With the default max content length of 100MB and a MTU of 1500 bytes we would allow 69905 entries. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It is a nit, but would you please use the multi-line style:

/*

*

*/

```?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure. Addressed in b5c14fd.

|

@elasticmachine retest this please |

|

At some point it may be worth benchmarking this with TLS/SSL enabled as some of the assumptions (1500 byte buffer sizes making it to |

|

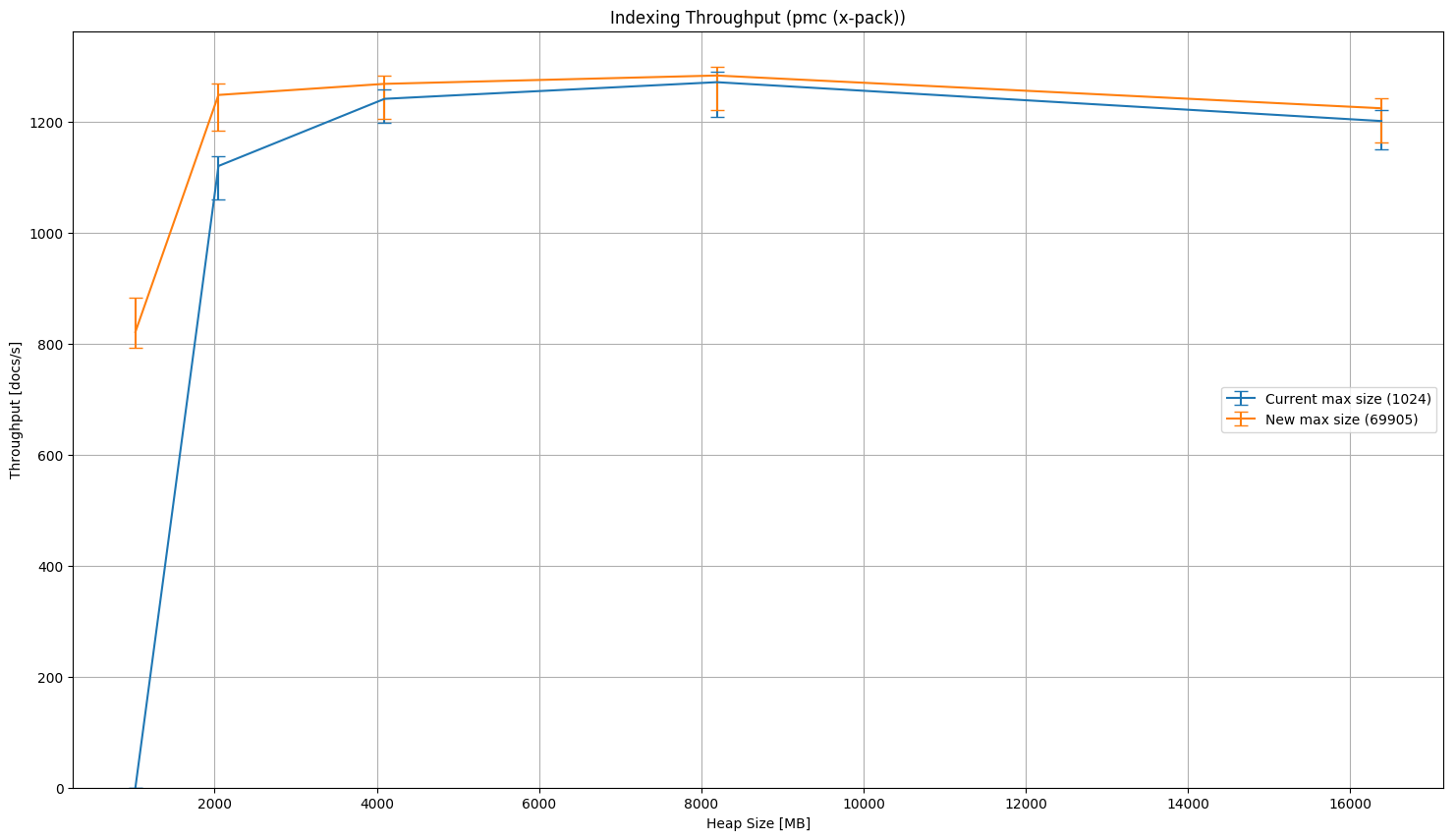

Good point @tbrooks8. I ran the PMC benchmarks x-pack now and the results are similar to Elasticsearch without x-pack (i.e. we still see a benefit of implementing this change):

The number that is adjusted here defines at which point the original (small) buffers get copied into one larger buffer. We do not preallocate any memory based on that number. I agree that we might have more elements in the |

|

@elasticmachine retest this please |

jasontedor

left a comment

jasontedor

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I left one comment but LGTM to me now.

| * | ||

| * By default we assume the Ethernet MTU (1500 bytes) but users can override it with a system property. | ||

| */ | ||

| private static final ByteSizeValue MTU = new ByteSizeValue(Long.valueOf(System.getProperty("es.net.mtu", "1500"))); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: it does not really matter here but this boxes and this should instead be Long.parseLong.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, I fixed it in 635c9fb.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

🚢

|

Thanks for review @jasontedor and your comments @tbrooks8! I let this bake on master for a while and will then backport to 6.x. |

|

@elasticmachine retest this please |

|

@danielmitterdorfer I do not think this change should be backported to the 6.3 branch. |

With this commit we determine the maximum number of buffers that Netty keeps while accumulating one HTTP request based on the maximum content length (default 1500 bytes, overridable with the system property `es.net.mtu`). Previously, we kept the default value of 1024 which is too small for bulk requests which leads to unnecessary copies of byte buffers internally. Relates #29448

|

Agreed w.r.t releases. Backported to 6.x in 71d3297. |

With this commit we determine the maximum number of buffers that Netty

keeps while accumulating one HTTP request based on the maximum content

length. Previously, we kept the default value of 1024 which is too small

for bulk requests which leads to unnecessary copies of byte buffers

internally.