-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-31030][SQL] Backward Compatibility for Parsing and formatting Datetime #27830

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #119451 has finished for PR 27830 at commit

|

docs/sql-ref-datetime-pattern.md

Outdated

|

|

||

| - Number/Text: If the count of pattern letters is 3 or greater, use the Text rules above. Otherwise use the Number rules above. | ||

|

|

||

| - Fraction: Outputs the nano-of-second field as a fraction-of-second. The nano-of-second value has nine digits, thus the count of pattern letters is from 1 to 9. If it is less than 9, then the nano-of-second value is truncated, with only the most significant digits being output. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Currently, Spark doesn't support fraction in nanosecond precision. It can mislead users.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the comment, update the fraction section in 621a00e.

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

Outdated

Show resolved

Hide resolved

| // parse. When it is successfully parsed, throw an exception and ask users to change | ||

| // the pattern strings or turn on the legacy mode; otherwise, return NULL as what Spark | ||

| // 2.4 does. | ||

| .replace("u", "e") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

'u' can be escaped in the pattern like 'update time' uuuu-MM-dd. Replacing every 'u' will lead to wrong pattern, and nothing matches to it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actually the quoted text has been considered, let me add comments to emphasize.

|

Test build #119477 has finished for PR 27830 at commit

|

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

Outdated

Show resolved

Hide resolved

|

|

||

| /** | ||

| * Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen | ||

| * one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes. However, the |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's a bit confusing to say java 7 & 8 as the old APIs are also available in java 8.

How about SimpleDateFormat and DateTimeFormatter?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done in e846fbb.

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

Outdated

Show resolved

Hide resolved

docs/sql-ref-datetime-pattern.md

Outdated

|

|

||

| The count of pattern letters determines the format. | ||

|

|

||

| - Text: The text style is determined based on the number of pattern letters used. Less than 4 pattern letters will use the short form. Exactly 4 pattern letters will use the full form. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

how about more than 5 letters?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We'll get IllegalArgumentException.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's document it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure, done in e846fbb.

docs/sql-ref-datetime-pattern.md

Outdated

|

|

||

| - Fraction: Outputs the micro-of-second field as a fraction-of-second. The micro-of-second value has six digits, thus the count of pattern letters is from 1 to 6. If it is less than 6, then the micro-of-second value is truncated, with only the most significant digits being output. | ||

|

|

||

| - Year: The count of letters determines the minimum field width below which padding is used. If the count of letters is two, then a reduced two digit form is used. For printing, this outputs the rightmost two digits. For parsing, this will parse using the base value of 2000, resulting in a year within the range 2000 to 2099 inclusive. If the count of letters is less than four (but not two), then the sign is only output for negative years. Otherwise, the sign is output if the pad width is exceeded. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Otherwise, the sign is output if the pad width is exceeded.

This is not true when G is present, right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Right, emphasize in e846fbb.

|

Test build #119557 has finished for PR 27830 at commit

|

|

Test build #119558 has finished for PR 27830 at commit

|

| } | ||

|

|

||

| /** | ||

| * Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In Spark 3.0, we switch to the Proleptic Gregorian calendar and use DateTimeFormatter for parsing/formatting datetime values. The pattern string is incompatible with the one defined by SimpleDateFormat in Spark 2.4 and earlier. This function ...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done in 5382508

| pattern.split("'").zipWithIndex.map { | ||

| case (patternPart, index) => | ||

| if (index % 2 == 0) { | ||

| // The meaning of 'u' was day number of week in Java 7, it was changed to year in Java 8. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Java 8 -> DateTimeFormatter

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, also rephrase the whole comment.

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeUtils.scala

Outdated

Show resolved

Hide resolved

|

Test build #119613 has finished for PR 27830 at commit

|

|

Test build #119620 has finished for PR 27830 at commit

|

|

thanks, merging to master/3.0! |

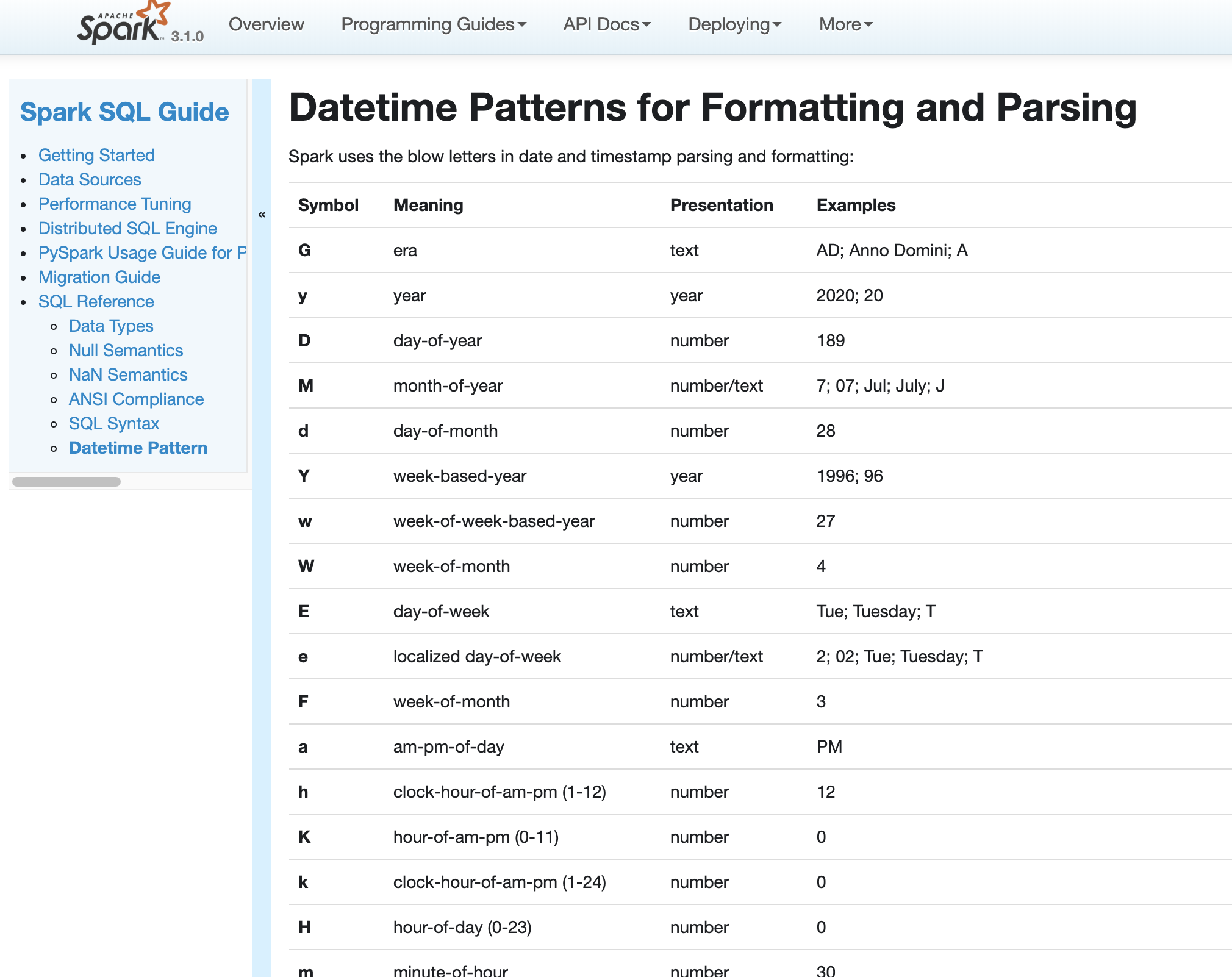

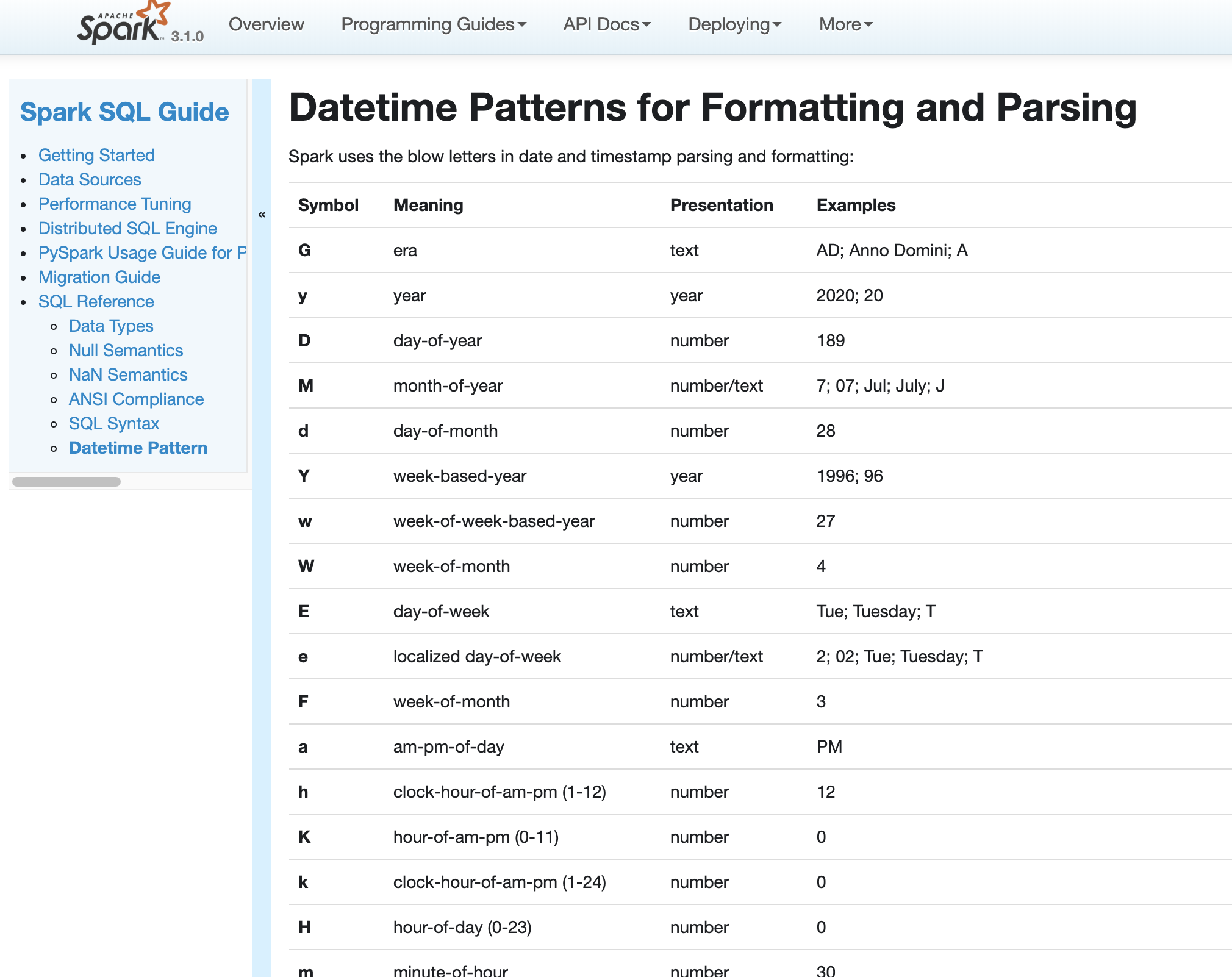

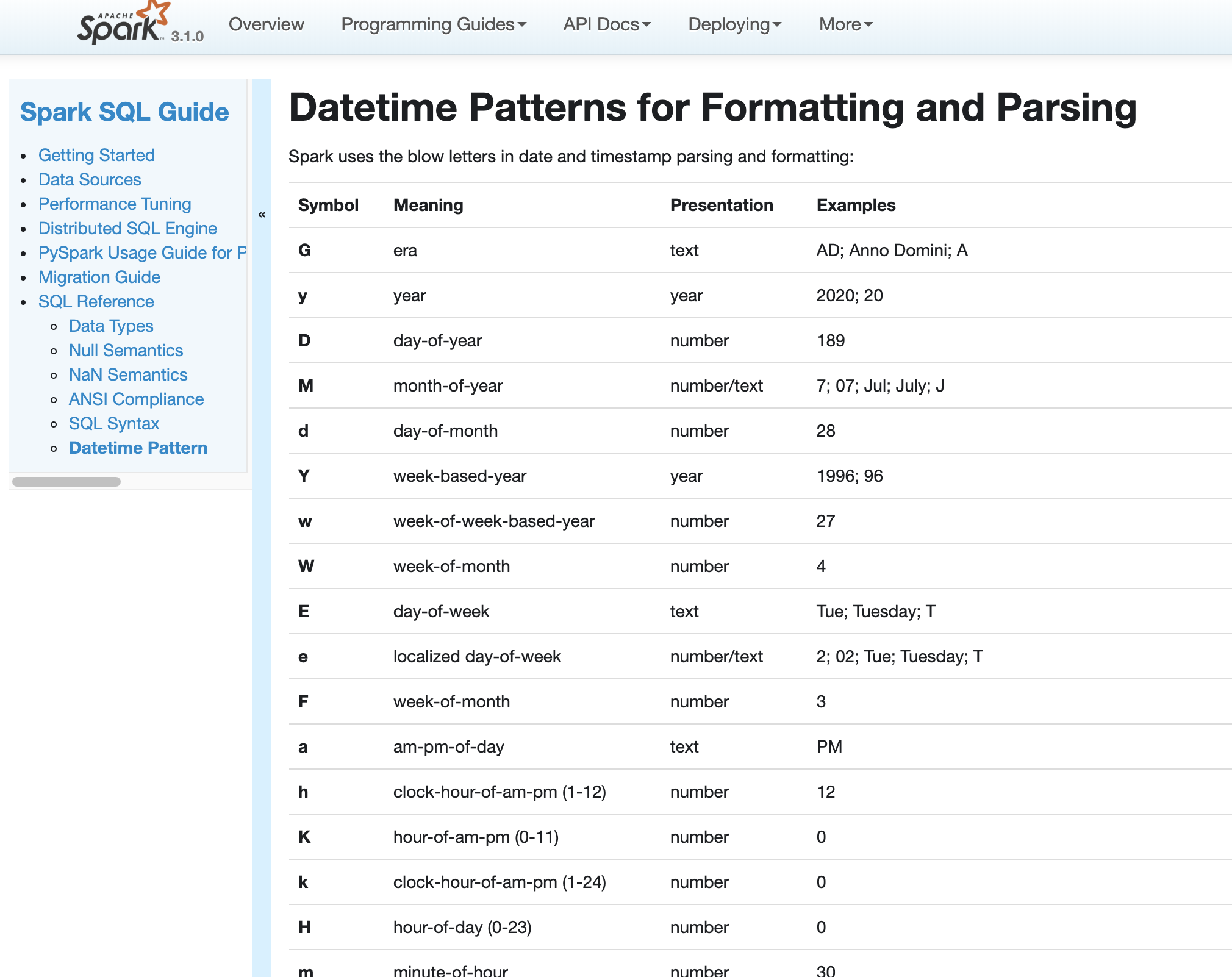

…Datetime In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian). Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651. But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API. In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030) For backward compatibility. No. After we define our own datetime parsing and formatting patterns, it's same to old Spark version. Existing and new added UT. Locally document test:  Closes #27830 from xuanyuanking/SPARK-31030. Authored-by: Yuanjian Li <[email protected]> Signed-off-by: Wenchen Fan <[email protected]> (cherry picked from commit 3493162) Signed-off-by: Wenchen Fan <[email protected]>

|

Thanks for the review! |

|

so I only skimmed this but I ran into the config: val LEGACY_TIME_PARSER_ENABLED = buildConf("spark.sql.legacy.timeParser.enabled") in SQLConf. I assume that can be removed with this change? |

|

yea it has been removed in #27889 |

…Datetime ### What changes were proposed in this pull request? In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian). Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651. But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API. In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030) ### Why are the changes needed? For backward compatibility. ### Does this PR introduce any user-facing change? No. After we define our own datetime parsing and formatting patterns, it's same to old Spark version. ### How was this patch tested? Existing and new added UT. Locally document test:  Closes apache#27830 from xuanyuanking/SPARK-31030. Authored-by: Yuanjian Li <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

What changes were proposed in this pull request?

In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian).

Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651.

But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API.

In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in SPARK-31030

Why are the changes needed?

For backward compatibility.

Does this PR introduce any user-facing change?

No.

After we define our own datetime parsing and formatting patterns, it's same to old Spark version.

How was this patch tested?

Existing and new added UT.

Locally document test: