You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

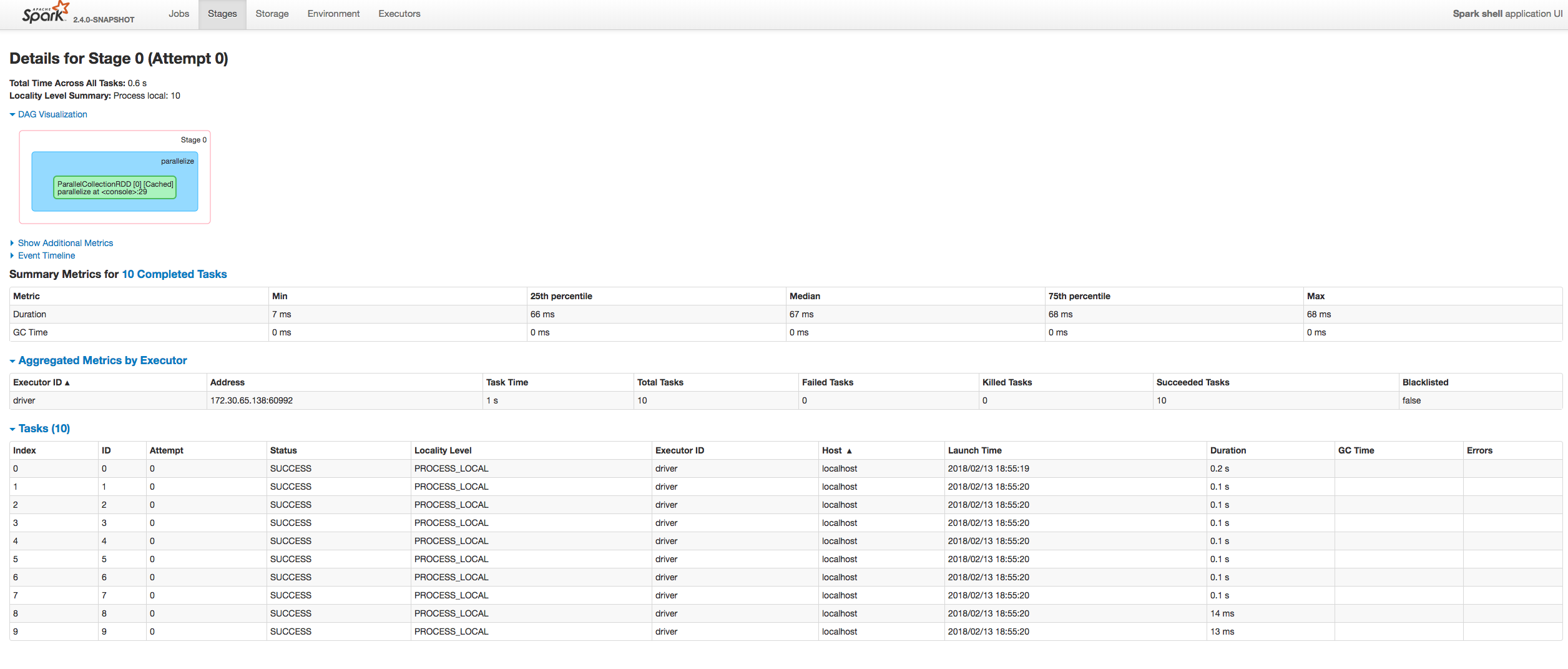

[SPARK-23413][UI] Fix sorting tasks by Host / Executor ID at the Stag…

…e page

## What changes were proposed in this pull request?

Fixing exception got at sorting tasks by Host / Executor ID:

```

java.lang.IllegalArgumentException: Invalid sort column: Host

at org.apache.spark.ui.jobs.ApiHelper$.indexName(StagePage.scala:1017)

at org.apache.spark.ui.jobs.TaskDataSource.sliceData(StagePage.scala:694)

at org.apache.spark.ui.PagedDataSource.pageData(PagedTable.scala:61)

at org.apache.spark.ui.PagedTable$class.table(PagedTable.scala:96)

at org.apache.spark.ui.jobs.TaskPagedTable.table(StagePage.scala:708)

at org.apache.spark.ui.jobs.StagePage.liftedTree1$1(StagePage.scala:293)

at org.apache.spark.ui.jobs.StagePage.render(StagePage.scala:282)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:687)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.spark_project.jetty.servlet.ServletHolder.handle(ServletHolder.java:848)

at org.spark_project.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584)

```

Moreover some refactoring to avoid similar problems by introducing constants for each header name and reusing them at the identification of the corresponding sorting index.

## How was this patch tested?

Manually:

(cherry picked from commit 1dc2c1d)

Author: “attilapiros” <[email protected]>

Closes#20623 from squito/fix_backport.

0 commit comments