Commit 3d6b68b

[SPARK-25313][SQL] Fix regression in FileFormatWriter output names

## What changes were proposed in this pull request?

Let's see the follow example:

```

val location = "/tmp/t"

val df = spark.range(10).toDF("id")

df.write.format("parquet").saveAsTable("tbl")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"CREATE TABLE tbl2(ID long) USING parquet location $location")

spark.sql("INSERT OVERWRITE TABLE tbl2 SELECT ID FROM view1")

println(spark.read.parquet(location).schema)

spark.table("tbl2").show()

```

The output column name in schema will be `id` instead of `ID`, thus the last query shows nothing from `tbl2`.

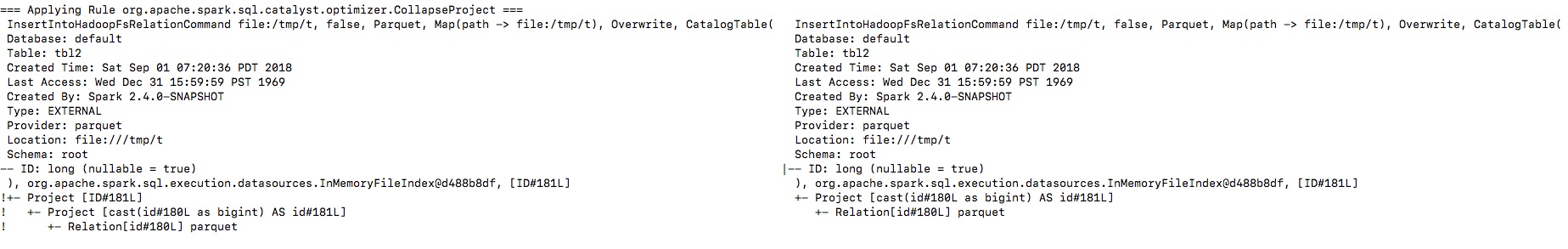

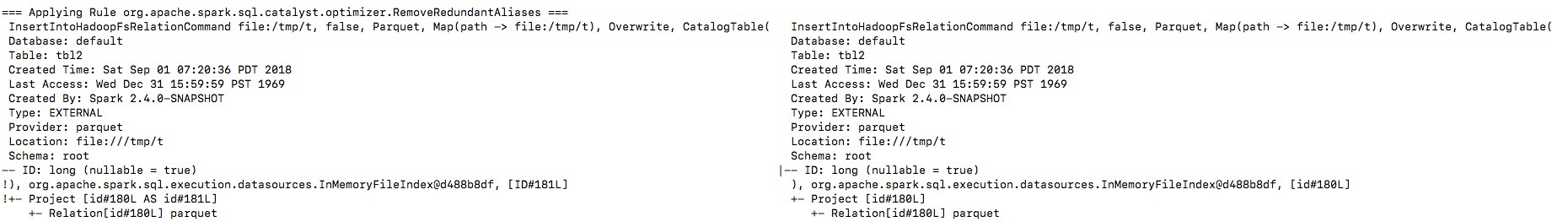

By enabling the debug message we can see that the output naming is changed from `ID` to `id`, and then the `outputColumns` in `InsertIntoHadoopFsRelationCommand` is changed in `RemoveRedundantAliases`.

**To guarantee correctness**, we should change the output columns from `Seq[Attribute]` to `Seq[String]` to avoid its names being replaced by optimizer.

I will fix project elimination related rules in #22311 after this one.

## How was this patch tested?

Unit test.

Closes #22320 from gengliangwang/fixOutputSchema.

Authored-by: Gengliang Wang <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>1 parent 3e03303 commit 3d6b68b

File tree

11 files changed

+189

-25

lines changed- sql

- core/src

- main/scala/org/apache/spark/sql/execution

- command

- datasources

- test/scala/org/apache/spark/sql/test

- hive/src

- main/scala/org/apache/spark/sql/hive

- execution

- test/scala/org/apache/spark/sql/hive/execution

11 files changed

+189

-25

lines changedLines changed: 40 additions & 3 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

25 | 25 | | |

26 | 26 | | |

27 | 27 | | |

28 | | - | |

| 28 | + | |

| 29 | + | |

29 | 30 | | |

30 | 31 | | |

31 | 32 | | |

| |||

41 | 42 | | |

42 | 43 | | |

43 | 44 | | |

44 | | - | |

45 | | - | |

| 45 | + | |

| 46 | + | |

| 47 | + | |

| 48 | + | |

| 49 | + | |

| 50 | + | |

46 | 51 | | |

47 | 52 | | |

48 | 53 | | |

| |||

53 | 58 | | |

54 | 59 | | |

55 | 60 | | |

| 61 | + | |

| 62 | + | |

| 63 | + | |

| 64 | + | |

| 65 | + | |

| 66 | + | |

| 67 | + | |

| 68 | + | |

| 69 | + | |

| 70 | + | |

| 71 | + | |

| 72 | + | |

| 73 | + | |

| 74 | + | |

| 75 | + | |

| 76 | + | |

| 77 | + | |

| 78 | + | |

| 79 | + | |

| 80 | + | |

| 81 | + | |

| 82 | + | |

| 83 | + | |

| 84 | + | |

| 85 | + | |

| 86 | + | |

| 87 | + | |

| 88 | + | |

| 89 | + | |

| 90 | + | |

| 91 | + | |

| 92 | + | |

Lines changed: 2 additions & 2 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

139 | 139 | | |

140 | 140 | | |

141 | 141 | | |

142 | | - | |

| 142 | + | |

143 | 143 | | |

144 | 144 | | |

145 | 145 | | |

| |||

214 | 214 | | |

215 | 215 | | |

216 | 216 | | |

217 | | - | |

| 217 | + | |

218 | 218 | | |

219 | 219 | | |

220 | 220 | | |

| |||

Lines changed: 10 additions & 6 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

34 | 34 | | |

35 | 35 | | |

36 | 36 | | |

| 37 | + | |

37 | 38 | | |

38 | 39 | | |

39 | 40 | | |

| |||

450 | 451 | | |

451 | 452 | | |

452 | 453 | | |

453 | | - | |

| 454 | + | |

454 | 455 | | |

455 | 456 | | |

456 | 457 | | |

| |||

460 | 461 | | |

461 | 462 | | |

462 | 463 | | |

463 | | - | |

464 | | - | |

465 | | - | |

| 464 | + | |

| 465 | + | |

| 466 | + | |

466 | 467 | | |

467 | 468 | | |

468 | 469 | | |

| |||

471 | 472 | | |

472 | 473 | | |

473 | 474 | | |

474 | | - | |

| 475 | + | |

475 | 476 | | |

| 477 | + | |

476 | 478 | | |

477 | 479 | | |

478 | 480 | | |

| |||

495 | 497 | | |

496 | 498 | | |

497 | 499 | | |

498 | | - | |

| 500 | + | |

| 501 | + | |

| 502 | + | |

499 | 503 | | |

500 | 504 | | |

501 | 505 | | |

| |||

Lines changed: 2 additions & 2 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

139 | 139 | | |

140 | 140 | | |

141 | 141 | | |

142 | | - | |

| 142 | + | |

143 | 143 | | |

144 | 144 | | |

145 | 145 | | |

| |||

209 | 209 | | |

210 | 210 | | |

211 | 211 | | |

212 | | - | |

| 212 | + | |

213 | 213 | | |

214 | 214 | | |

215 | 215 | | |

| |||

Lines changed: 3 additions & 3 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

56 | 56 | | |

57 | 57 | | |

58 | 58 | | |

59 | | - | |

| 59 | + | |

60 | 60 | | |

61 | 61 | | |

62 | 62 | | |

63 | 63 | | |

64 | 64 | | |

65 | | - | |

66 | | - | |

| 65 | + | |

| 66 | + | |

67 | 67 | | |

68 | 68 | | |

69 | 69 | | |

| |||

Lines changed: 74 additions & 0 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

805 | 805 | | |

806 | 806 | | |

807 | 807 | | |

| 808 | + | |

| 809 | + | |

| 810 | + | |

| 811 | + | |

| 812 | + | |

| 813 | + | |

| 814 | + | |

| 815 | + | |

| 816 | + | |

| 817 | + | |

| 818 | + | |

| 819 | + | |

| 820 | + | |

| 821 | + | |

| 822 | + | |

| 823 | + | |

| 824 | + | |

| 825 | + | |

| 826 | + | |

| 827 | + | |

| 828 | + | |

| 829 | + | |

| 830 | + | |

| 831 | + | |

| 832 | + | |

| 833 | + | |

| 834 | + | |

| 835 | + | |

| 836 | + | |

| 837 | + | |

| 838 | + | |

| 839 | + | |

| 840 | + | |

| 841 | + | |

| 842 | + | |

| 843 | + | |

| 844 | + | |

| 845 | + | |

| 846 | + | |

| 847 | + | |

| 848 | + | |

| 849 | + | |

| 850 | + | |

| 851 | + | |

| 852 | + | |

| 853 | + | |

| 854 | + | |

| 855 | + | |

| 856 | + | |

| 857 | + | |

| 858 | + | |

| 859 | + | |

| 860 | + | |

| 861 | + | |

| 862 | + | |

| 863 | + | |

| 864 | + | |

| 865 | + | |

| 866 | + | |

| 867 | + | |

| 868 | + | |

| 869 | + | |

| 870 | + | |

| 871 | + | |

| 872 | + | |

| 873 | + | |

| 874 | + | |

| 875 | + | |

| 876 | + | |

| 877 | + | |

| 878 | + | |

| 879 | + | |

| 880 | + | |

| 881 | + | |

808 | 882 | | |

809 | 883 | | |

810 | 884 | | |

| |||

Lines changed: 3 additions & 3 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

149 | 149 | | |

150 | 150 | | |

151 | 151 | | |

152 | | - | |

| 152 | + | |

153 | 153 | | |

154 | 154 | | |

155 | 155 | | |

156 | 156 | | |

157 | 157 | | |

158 | 158 | | |

159 | 159 | | |

160 | | - | |

| 160 | + | |

161 | 161 | | |

162 | 162 | | |

163 | 163 | | |

164 | 164 | | |

165 | 165 | | |

166 | 166 | | |

167 | | - | |

| 167 | + | |

168 | 168 | | |

169 | 169 | | |

170 | 170 | | |

| |||

Lines changed: 5 additions & 4 deletions

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

37 | 37 | | |

38 | 38 | | |

39 | 39 | | |

40 | | - | |

| 40 | + | |

41 | 41 | | |

42 | 42 | | |

43 | 43 | | |

| |||

63 | 63 | | |

64 | 64 | | |

65 | 65 | | |

66 | | - | |

| 66 | + | |

67 | 67 | | |

68 | 68 | | |

69 | 69 | | |

70 | 70 | | |

71 | 71 | | |

72 | | - | |

| 72 | + | |

| 73 | + | |

73 | 74 | | |

74 | 75 | | |

75 | 76 | | |

| |||

82 | 83 | | |

83 | 84 | | |

84 | 85 | | |

85 | | - | |

| 86 | + | |

86 | 87 | | |

87 | 88 | | |

88 | 89 | | |

| |||

Lines changed: 1 addition & 1 deletion

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

57 | 57 | | |

58 | 58 | | |

59 | 59 | | |

60 | | - | |

| 60 | + | |

61 | 61 | | |

62 | 62 | | |

63 | 63 | | |

| |||

Lines changed: 1 addition & 1 deletion

| Original file line number | Diff line number | Diff line change | |

|---|---|---|---|

| |||

69 | 69 | | |

70 | 70 | | |

71 | 71 | | |

72 | | - | |

| 72 | + | |

73 | 73 | | |

74 | 74 | | |

75 | 75 | | |

| |||

0 commit comments