|

1 | | -# Cerebras Planning and Optimization (CePO) |

| 1 | +# optiLLM |

2 | 2 |

|

3 | | -CePO is an inference-time computation method designed to enhance the accuracy of large language models (LLMs) on tasks requiring reasoning and planning, such as solving math or coding problems. It integrates several advanced techniques, including Best of N, Chain of Thought (CoT), Self-Reflection, Self-Improvement, and Prompt Engineering. |

4 | | - |

5 | | -If you have any questions or want to contribute, please reach out to us on [cerebras.ai/discord](https://discord.com/channels/1085960591052644463/1325883896964841582) |

6 | | - |

7 | | -## Methodology |

8 | | - |

9 | | -In CePO, the Best of N technique is applied to `bestofn_n` solution candidates. Each solution is generated through the following four steps: |

10 | | - |

11 | | -**Step 1**: Plan Generation |

12 | | -The model generates a detailed, step-by-step plan to solve the problem, along with its confidence level for each step. |

13 | | - |

14 | | -**Step 2**: Initial Solution |

15 | | -Using the plan from Step 1, the model produces an initial solution. |

16 | | - |

17 | | -Steps 1 and 2 are repeated `planning_n` times to generate multiple solution proposals. |

18 | | -If the model exceeds the token budget during Step 1 or 2, the plan/solution is marked as incomplete, rejected, and regenerated. A maximum of `planning_m` attempts is made to generate `planning_n` valid proposals. |

19 | | - |

20 | | -**Step 3**: Plan Refinement |

21 | | -The model reviews all generated solution proposals and their associated plans, identifying inconsistencies. Based on this analysis, a refined, final step-by-step plan is constructed. |

22 | | - |

23 | | -**Step 4**: Final Solution |

24 | | -The model uses the refined plan from Step 3 to produce the final answer. |

25 | | - |

26 | | -## Current Status |

27 | | - |

28 | | -This project is a work in progress, and the provided code is in an early experimental stage. While the proposed approach works well across the benchmarks we tested, further improvements can be achieved by task-specific customizations to prompts. |

29 | | - |

30 | | -## Results |

31 | | - |

32 | | -### Comparison of CePO with default settings and base model |

33 | | - |

34 | | -| Method | Math-L5 | MMLU-Pro (Math) | GPQA | CRUX | LiveCodeBench (pass@1) | Simple QA | |

35 | | -| -------------------------: | :-----: | :-------------: | :--: | :--: | :--------------------: | :-------: | |

36 | | -| Llama 3.1 70B | 41.6 | 72.9 | 41.7 | 64.2 | 24.5 | 14.7 | |

37 | | -| Llama 3.3 70B | 51.0 | 78.6 | 49.1 | 72.6 | 27.1 | 20.9 | |

38 | | -| Llama 3.1 405B | 49.8 | 79.2 | 50.7 | 73.0 | 31.8 | 13.5 | |

39 | | -| CePO (using Llama 3.3 70B) | 69.6 | 84.8 | 55.5 | 80.1 | 31.9 | 22.6 | |

40 | | - |

41 | | -### Ablation studies |

42 | | - |

43 | | -We conducted ablation studies to evaluate the impact of various hyperparameters in the CePO framework. Our results indicate that the chosen hyperparameter settings strike a good balance between computational cost and accuracy. |

44 | | - |

45 | | -Interestingly, the self-critique and quality improvement capabilities of existing off-the-shelf models do not always scale proportionally with increased inference compute. Addressing this limitation remains a key focus, and we plan to explore custom model fine-tuning as a potential solution in the future. |

46 | | - |

47 | | -| bestofn_n | planning_n | planning_m | bestofn_rating_type | Math-L5 | MMLU-Pro (Math) | GPQA | CRUX | Comments | |

48 | | -| :-------: | :--------: | :--------: | :-----------------: | :-----: | :-------------: | :---: | :---: | :------------- | |

49 | | -| 3 | 3 | 6 | absolute | 69.6 | 84.8 | 55.5 | 80.1 | Default config | |

50 | | -| 3 | 3 | 6 | pairwise | 67.7 | 83.5 | 55.6 | 79.8 | | |

51 | | -| 3 | 2 | 5 | absolute | 67.1 | 85.1 | 55.1 | 79.0 | | |

52 | | -| 3 | 5 | 8 | absolute | 69.4 | 84.3 | 55.6 | 81.1 | | |

53 | | -| 5 | 3 | 6 | absolute | 68.7 | 85.4 | 54.8 | 79.9 | | |

54 | | -| 7 | 3 | 6 | absolute | 69.6 | 82.8 | 54.7 | 78.4 | | |

55 | | -| 9 | 3 | 6 | absolute | 68.9 | 83.4 | 55.7 | 80.6 | | |

56 | | - |

57 | | -# Implemented with OptiLLM |

58 | | - |

59 | | -optillm is an OpenAI API compatible optimizing inference proxy which implements several state-of-the-art techniques that can improve the accuracy and performance of LLMs. The current focus is on implementing techniques that improve reasoning over coding, logical and mathematical queries. It is possible to beat the frontier models using these techniques across diverse tasks by doing additional compute at inference time. |

| 3 | +optillm is an OpenAI API compatible optimizing inference proxy which implements several state-of-the-art techniques that can improve the accuracy and performance of LLMs, including CePO. The current focus is on implementing techniques that improve reasoning over coding, logical and mathematical queries. It is possible to beat the frontier models using these techniques across diverse tasks by doing additional compute at inference time. |

60 | 4 |

|

61 | 5 | [](https://huggingface.co/spaces/codelion/optillm) |

62 | 6 | [](https://colab.research.google.com/drive/1SpuUb8d9xAoTh32M-9wJsB50AOH54EaH?usp=sharing) |

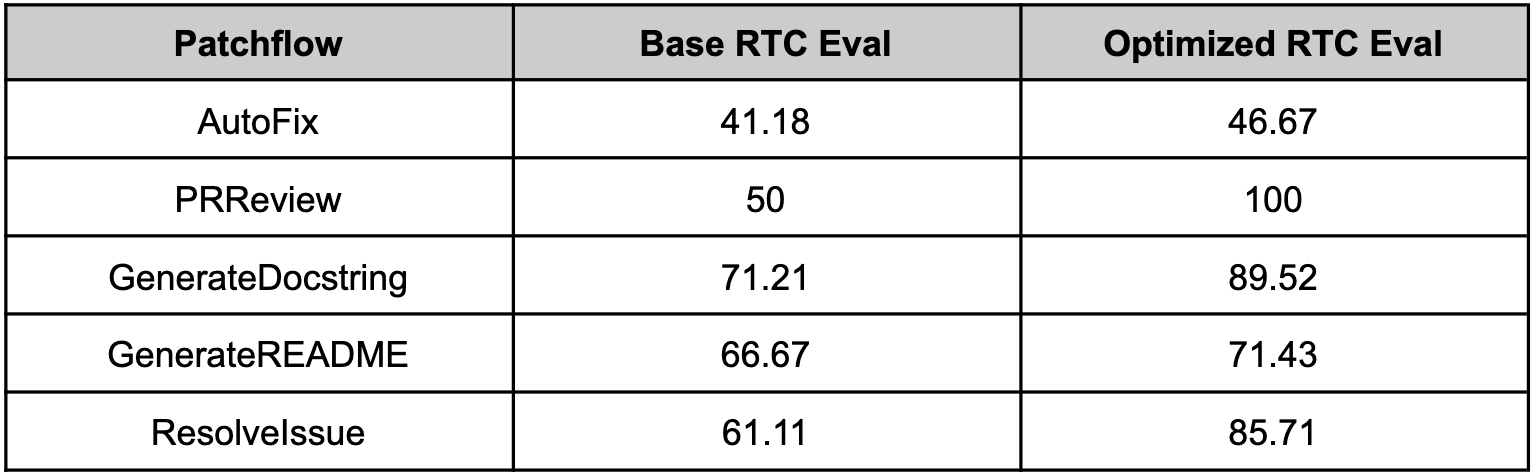

@@ -419,6 +363,62 @@ called patchflows. We saw huge performance gains across all the supported patchf |

419 | 363 |

|

420 | 364 |  |

421 | 365 |

|

| 366 | +## The Cerebras Planning and Optimization (CePO) Method |

| 367 | + |

| 368 | +CePO is an inference-time computation method designed to enhance the accuracy of large language models (LLMs) on tasks requiring reasoning and planning, such as solving math or coding problems. It integrates several advanced techniques, including Best of N, Chain of Thought (CoT), Self-Reflection, Self-Improvement, and Prompt Engineering. |

| 369 | + |

| 370 | +If you have any questions or want to contribute, please reach out to us on [cerebras.ai/discord](cerebras.ai/discord) |

| 371 | + |

| 372 | +### CePO Methodology |

| 373 | + |

| 374 | +In CePO, the Best of N technique is applied to `bestofn_n` solution candidates. Each solution is generated through the following four steps: |

| 375 | + |

| 376 | +**Step 1**: Plan Generation |

| 377 | +The model generates a detailed, step-by-step plan to solve the problem, along with its confidence level for each step. |

| 378 | + |

| 379 | +**Step 2**: Initial Solution |

| 380 | +Using the plan from Step 1, the model produces an initial solution. |

| 381 | + |

| 382 | +Steps 1 and 2 are repeated `planning_n` times to generate multiple solution proposals. |

| 383 | +If the model exceeds the token budget during Step 1 or 2, the plan/solution is marked as incomplete, rejected, and regenerated. A maximum of `planning_m` attempts is made to generate `planning_n` valid proposals. |

| 384 | + |

| 385 | +**Step 3**: Plan Refinement |

| 386 | +The model reviews all generated solution proposals and their associated plans, identifying inconsistencies. Based on this analysis, a refined, final step-by-step plan is constructed. |

| 387 | + |

| 388 | +**Step 4**: Final Solution |

| 389 | +The model uses the refined plan from Step 3 to produce the final answer. |

| 390 | + |

| 391 | +### CePO Current Status |

| 392 | + |

| 393 | +This project is a work in progress, and the provided code is in an early experimental stage. While the proposed approach works well across the benchmarks we tested, further improvements can be achieved by task-specific customizations to prompts. |

| 394 | + |

| 395 | +### CePO Results |

| 396 | + |

| 397 | +#### Comparison of CePO with default settings and base model |

| 398 | + |

| 399 | +| Method | Math-L5 | MMLU-Pro (Math) | GPQA | CRUX | LiveCodeBench (pass@1) | Simple QA | |

| 400 | +| -------------------------: | :-----: | :-------------: | :--: | :--: | :--------------------: | :-------: | |

| 401 | +| Llama 3.1 70B | 41.6 | 72.9 | 41.7 | 64.2 | 24.5 | 14.7 | |

| 402 | +| Llama 3.3 70B | 51.0 | 78.6 | 49.1 | 72.6 | 27.1 | 20.9 | |

| 403 | +| Llama 3.1 405B | 49.8 | 79.2 | 50.7 | 73.0 | 31.8 | 13.5 | |

| 404 | +| CePO (using Llama 3.3 70B) | 69.6 | 84.8 | 55.5 | 80.1 | 31.9 | 22.6 | |

| 405 | + |

| 406 | +#### CePO Ablation studies |

| 407 | + |

| 408 | +We conducted ablation studies to evaluate the impact of various hyperparameters in the CePO framework. Our results indicate that the chosen hyperparameter settings strike a good balance between computational cost and accuracy. |

| 409 | + |

| 410 | +Interestingly, the self-critique and quality improvement capabilities of existing off-the-shelf models do not always scale proportionally with increased inference compute. Addressing this limitation remains a key focus, and we plan to explore custom model fine-tuning as a potential solution in the future. |

| 411 | + |

| 412 | +| bestofn_n | planning_n | planning_m | bestofn_rating_type | Math-L5 | MMLU-Pro (Math) | GPQA | CRUX | Comments | |

| 413 | +| :-------: | :--------: | :--------: | :-----------------: | :-----: | :-------------: | :---: | :---: | :------------- | |

| 414 | +| 3 | 3 | 6 | absolute | 69.6 | 84.8 | 55.5 | 80.1 | Default config | |

| 415 | +| 3 | 3 | 6 | pairwise | 67.7 | 83.5 | 55.6 | 79.8 | | |

| 416 | +| 3 | 2 | 5 | absolute | 67.1 | 85.1 | 55.1 | 79.0 | | |

| 417 | +| 3 | 5 | 8 | absolute | 69.4 | 84.3 | 55.6 | 81.1 | | |

| 418 | +| 5 | 3 | 6 | absolute | 68.7 | 85.4 | 54.8 | 79.9 | | |

| 419 | +| 7 | 3 | 6 | absolute | 69.6 | 82.8 | 54.7 | 78.4 | | |

| 420 | +| 9 | 3 | 6 | absolute | 68.9 | 83.4 | 55.7 | 80.6 | | |

| 421 | + |

422 | 422 | ## References |

423 | 423 |

|

424 | 424 | - [Chain of Code: Reasoning with a Language Model-Augmented Code Emulator](https://arxiv.org/abs/2312.04474) - [Implementation](https://github.com/codelion/optillm/blob/main/optillm/plugins/coc_plugin.py) |

|

0 commit comments